Summary

Metaphysical debates about consciousness, including whether phenomenal qualia exist at all, are mostly fought in the epistemic realm: Which view is more likely true? But given the difficulty of the issue, as evidenced by disagreement among very smart thinkers, we may wish to supplement epistemic arguments with prudential ones—appealing to what the consequences for altruism would be if different views were true. If we're certain we only care about qualia over and above physical function, then we should act as if such qualia exist, since if they don't, nothing matters. On the flip side, if we mainly care about function, we can pretend qualia don't exist, whether they do or not. Both pro-qualia and anti-qualia views also imply some limits on the powers of human knowledge and, by implication, on our abilities to make a positive difference in the world.

Contents

Introduction

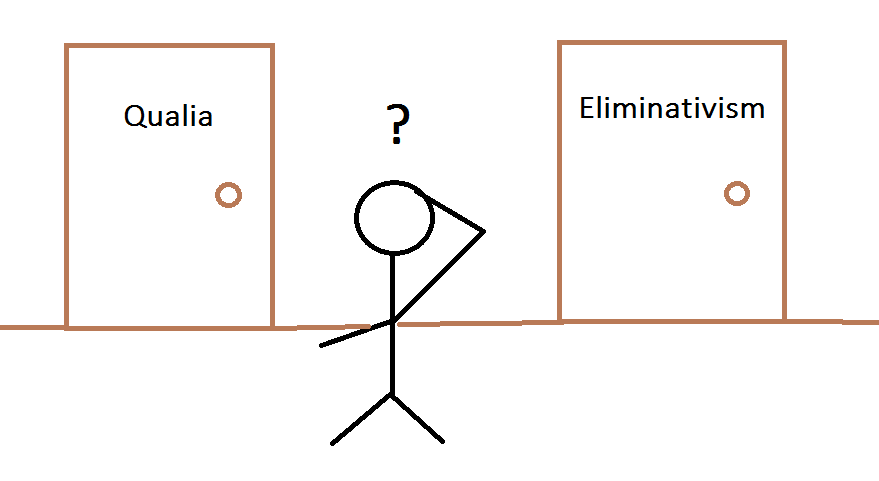

The hard problem of consciousness is a vexing topic, even if one suspects, as I do, that there is ultimately no explanatory gap between physical processes and consciousness (type-A physicalism). I'm not certain of my stance because the topic bumps up against human cognitive limitations,  and many smart people disagree with qualia eliminativism.

and many smart people disagree with qualia eliminativism.

One reason it's important for altruists to understand phenomenal consciousness is because, if it did exist in the way many philosophers characterize it, it would overhaul our metaphysical assumptions and might lead to radical changes in other areas. But the more direct reason it matters—the reason I've pondered the topic so much—is because its resolution seems crucial in deciding which organisms or other physical systems matter morally and to what degrees. If we want to reduce conscious suffering, we need to know what entities are conscious.

But why do we care about consciousness in the first place? Depending on the answer, we may be able to prudentially favor one or another position on consciousness even without epistemically working out the truth of the matter.

Prudential argument for qualia

Suppose you're convinced that moral value derives from phenomenal experience as philosophers describe it—something over and above mere physical processing. In other words, philosophical zombies lack any intrinsic moral importance.

If eliminativism is true, then all organisms are zombies. Since phenomenal consciousness doesn't exist, nothing in the universe matters. Assuming we can't influence other possible worlds where phenomenal consciousness does exist, then nothing we do matters. Hence, in our expected-value calculations, the branch of possibilities where qualia don't exist evaluates to zero, which means we should act as if qualia do exist.

If modal realism is true

I mentioned that this argument depends on our not being able to influence other possible worlds, but even that condition can be weakened. Suppose modal realism is true (all possible worlds exist), or even just that at least one zombie world and one phenomenally conscious world exist. Because the zombie world and conscious world are physically identical, any choices the zombies make are logically mirrored in the conscious world and vice versa. So even if we are zombies, if non-zombie twins exist in other worlds, we can "control" their actions by our actions. But non-zombies should, if they want to be successful, act as if they're not zombies. Therefore, we zombies should act as if we're not zombies to achieve the right mirrored actions in the non-zombie world. Hence, we should act as though we're conscious.

Actually, the preceding paragraph uses slightly misleading terminology. When two systems are logically correlated, it's not the case that one "controls" the other. Rather, they both run in synchrony. In such a case, it may be better to identify "myself" as being the set of all my physically identical copies jointly (thanks to a friend for this point). In this case, "I" would be both conscious and not conscious at the same time, in the sense that some parts of my distributed physical instances would be zombies and some wouldn't.

Similar arguments

The above "qualia wager" is similar to the moral-realism wager: If you only care about morally real truths, then if moral realism is false, nothing you do matters relative to your value system, so you should assume moral realism is true.

A divine-command theorist might also invoke an argument of this type on behalf of theism: I only care about God's commands, so if God doesn't exist, nothing matters, so I should assume God does exist.

But values should be robust

The qualia wager seems fishy to me because generally, moral values should adapt to ontological crises. Humanity has had many ontological crises so far, and it seems reasonably likely to me that our current understanding of the universe retains many egregious confusions. Values that lock on to fixed ontological assumptions are values that will probably turn out to be vacuous in hindsight, as the example of divine-command theory shows. Likewise, I think the moral-realism wager fails because what we care about still matters to us whether or not moral truths are ontologically fundamental.

So the qualia wager seems too narrow-minded. What if it did turn out that we're all zombies? Would that really make everything unimportant? Life certainly doesn't seem unimportant, so maybe we were making a mistake to only value real phenomenal experience to begin with.

Eliezer Yudkowsky quotes the following as what he calls the "Litany of Gendlin":

What is true is already so.

Owning up to it doesn't make it worse.

Not being open about it doesn't make it go away.

And because it's true, it is what is there to be interacted with.

Anything untrue isn't there to be lived.

People can stand what is true,

for they are already enduring it.

Even given eliminativism, there is something we care about—the thing that we poetically call "the feeling of what it's like". So when we learn that this thing is best seen as functional processing, it seems natural to redirect our caring towards functional processing.

An example might be someone who thought she cared about following God's commands as described by Jesus. Then when she learns that God doesn't exist, she can still care about loving her neighbor as herself and so on, even without seeing anything metaphysically special about such principles.

Prudential argument for eliminativism

In light of the above, a prudential wager might run as follows: Whether or not qualia exist, we would still care about ourselves and others. Therefore, maybe we care actually about something other than qualia. If so, whether qualia exist or not is irrelevant, so we can act as though qualia don't exist.

It's important to note that the eliminativist argument is only suggestive. Even if we would care about zombies if eliminativism is true, we could still care about qualia and not zombies if eliminativism is false, because what we care about is allowed to adjust to the actual facts on the ground. For instance, even if it turns out that people outside my field of vision don't actually exist, I can still care about them if they do exist and only stop caring if I become certain they don't. So it's consistent to give lexical concern to qualia and, only if those don't exist, then fall back to caring about zombies.

That said, an eliminativst can also probe why we think we care so much about qualia. Why not actually care about all the physical processes going on, including mental states representing hopes, beliefs, fears, and so on? Zombies care dearly about themselves; why does that not matter?

Daniel Dennett, Consciousness Explained (p. 450):

But why should it matter, you may want to ask, that a creature's desires are thwarted if they aren't conscious desires? I reply: Why would it matter more if they were conscious—especially if consciousness were a property, as some think, that forever eludes investigation? Why should a "zombie's" crushed hopes matter less than a conscious person's crushed hopes? There is a trick with mirrors here that should be exposed and discarded. Consciousness, you say, is what matters, but then you cling to doctrines about consciousness that systematically prevent us from getting any purchase on why it matters.

Bryce Huebner notes that our intuitions about whether zombies matter may depend on how much we interact with them:

I do not want to deny that we have initial intuitions about the capacities of various entities to be in various mental states. However, when we are faced with an entity that is unfamiliar, these intuitions are typically submitted to feedback from our social environments that allow us to evaluate the normative acceptability of our ascriptions. If I judge that an entity is not capable of feeling pain, for example, but then I come to interact with that entity and it displays a lot of pain behavior, I am likely to revise my judgment.

Huebner points to the empathy that viewers develop for the replicants of Blade Runner as an example of this. (That said, replicants in our world may not be actual zombies. Even philosophers who use zombie arguments often contend that zombies are only possible in some worlds, not in our world.)

How much do qualia matter on various non-reductionist views?

Suppose the correct theory of consciousness is a kind of epiphenomenal property dualism in which physical brain processing occurs just as eliminativists say it does, but there's also a non-physical process that converts the most salient of those physical processes into phenomenal experiences, by applying some "consciousness gloss" to physics. This seems to me the most elegant formulation of epiphenomenalism—more elegant than a parallelist hypothesis that creates a whole separate realm of phenomenal experiences that evolve in synchrony with physics for some unspecified reason.

In this case, we might ask: Why does the consciousness-glossing operation matter? It seems like most of the work is being done by physics itself. Shouldn't physics then deserve the bulk of our moral sympathies? On an epiphenomenalist account, our evolved "caring" impulses should be directed toward physical processes, since only physics affects Darwinian survival—unless it's postulated that our evolved capacity for reason allowed us to deduce the existence of qualia and thereby realize that we actually want to care about those instead.

An epiphenomenalist might also reply that it's our conscious experiences of caring that matter, not our physical caring algorithms, and those conscious experiences of caring are directed at qualia. But are they? If consciousness is only a gloss over physics, then our conscious experiences are directed at whatever our physical brains direct them at. In order for consciousness to have an independent life of its own, there would need to be additional laws specifying the dynamics of how phenomenal experience behaves when it acts independently of physics.

Other non-reductive theories of consciousness that postulate different ways in which consciousness arises might get a different moral evaluation. For instance, a theory in which qualia are more complex than in the epiphenomenalist account sketched above might elicit more intuitive caring about qualia than the epiphenomenalist theory where qualia are just a gloss on physics, because if qualia are more complex, they're doing more independent "work". On the other hand, theories where qualia play a more active role are also much less plausible on account of Occam's razor.

My take

I'm not sure how much I buy the eliminativist argument that zombies matter just as much as conscious beings if qualia do exist. My moral views are heavily shaped by emotions, and I do have a strong emotional sense that only qualia matter. I could examine how such an emotion evolved, why it's arbitrary-seeming, and so on, but I can do that for all of my moral views, and at the end of the day, my raw emotions tend to get a lot of weight. (Otherwise I would float toward moral nihilism.)

So I'm not fully convinced by the eliminativist moral argument, though it does incline me to care to some degree about zombies even if qualia exist.

Mostly I care about whatever the best interpretation is of what I'm pointing at when I talk about "my conscious experiences" and "others' conscious experiences". I expect the closest referent for these gestures will turn out to be physical operations of various kinds, but if qualia do exist and my gestures really do point at them, then I would care largely about qualia.

Arguments based on how much humans can know

The preceding arguments focused on what would follow if we cared only about qualia (the qualia wager) or only about physical function (the eliminativist wager), deriving conclusions based on what entities would matter to us. But another relevant prudential consideration is how much impact we can have toward our goals. This is in turn influenced by how much knowledge we can reliably accumulate about metaphysics.

Pro-qualia argument: Less cognitive closure

Eliminativism relies on the hypothesis that when humans intuit the existence of an explanatory gap for consciousness, they're making a mistake: Actually there is no gap, but we persist in thinking there is one due to the limitations of our minds.

But if we make metaphysical mistakes about consciousness, presumably we do so about other big questions as well. If we make enough metaphysical errors, or if we make even one big metaphysical error, "then all our best efforts might be for naught—or less" (to quote Nick Bostrom).

So we should prudentially assume that our intuitions have a good grasp on metaphysics, which includes our intuition that there's an explanatory gap.

Pro-eliminativist arguments: Less mystery, better grasp on other minds

In response, an eliminativist can make an opposite claim: If the explanatory gap does exist, then neuroscience and philosophy seem to be butting up against a great mystery. In contrast, if the explanatory gap is just an illusion, then this suggests a better track record for the march of science and human understanding.

Moreover, suppose consciousness is a "real thing". Then we can never know for certain which entities have it (the problem of other minds). In contrast, if eliminativism is true, then we can in principle understand all there is to know about physical systems, and the only question that remains is a moral one ("how much do we care about this system?") rather than an epistemological one ("is this system really conscious?").

Note that these arguments are only compelling to qualiaphiles who would care at least somewhat about zombies if eliminativism turned out to be true.

What would I do differently if qualia existed?

Most of my more philosophical writings on consciousness assume eliminativism about qualia, since I think eliminativism is the most likely answer to the hard problem. But I'm not confident of this stance, and I should evaluate the sensitivity of my beliefs to this question. How would my views and policy recommendations change if consciousness were a "real", fundamental property?

Maybe not too much. Most of my arguments for why a certain class of agents or processes deserves moral consideration are phrased as "We should care a little bit about simple computations", but similar sorts of conclusions would follow from arguing that "Simple computations have a small probability of actually being conscious". For a given entity e, the eliminativist's degree of caring, d(e), may not always match the qualia-realist's probability of sentience, p(e), but the two quantities presumably mirror each other enough that the same broad sorts of conclusions would follow either way. Of course, there might be some divergences, such as if I care a lot about some feature of a mind that a qualia-realist would claim has no effect on the probability of its sentience.

My views might shift somewhat on the question of suffering in fundamental physics if I were a qualia realist. Currently I only give a bounded amount of my total moral concern to quarks and leptons because I feel emotional sympathy for bigger creatures and would feel it wrong to totally ignore clear cases of animal suffering in an effort to maximally avert electron suffering. I'm okay with this kind of stance due to knowing that the qualia of electrons aren't "real" anyway. If instead I thought electrons really might be able to suffer in an objective sense, I might feel more compelled to "shut up and multiply" as far as trading their feelings off against feelings of bigger animals. That said, such a conclusion would not be inevitable. Even if quark qualia were real, maybe they would feel very unlike anything I'm familiar with, and therefore, maybe I would still feel comfortable giving them dramatically less weight than the qualia of animals and robots.

Also, if qualia are real, then it could be that eventually we'll become nearly certain of whether, say, atoms are sentient, and if atoms seem not to have qualia, the probability of their sentience would drop to essentially zero. In contrast, with an eliminativist approach, if I care to some tiny degree about atoms, that caring will remain in place indefinitely unless I change my mind due to moral progress (or moral devolution). Of course, if qualia are really non-physical, we may never know for certain what things have them, in which case a nonzero probability of atom sentience may persist even if qualia do exist. (Part of Ned Block's Harder Problem of Consciousness is the idea that it doesn't seem we can know for certain whether beings physically unlike ourselves are conscious: "phenomenal realism says that the issue is open in the sense of no rational ground for belief either way.")

Acknowledgements

Some ideas in this piece were inspired by a discussion with Jonathan Erhardt.