Summary

Neuroscientists tell us that many of the brain's functions can happen unconsciously. This leads us to ask: What brain operations are crucial for consciousness? Are these the only ones that matter morally? Attempts to define a clear, binary division between unconscious and conscious processing seem somewhat arbitrary given that the underlying neural mechanics are fairly continuous. Because consciousness doesn't magically appear at a single point but involves many steps occurring jointly, it may make sense to care about the series of steps as a whole, at least in some crucial window of time when broadcast information is most widespread and when downstream processes are first responding to it.

Note: This piece is not terribly original and echoes many ideas from Daniel Dennett. For example, Dennett says: "Consciousness is smeared spatially and temporally" across the brain.

Contents

- Summary

- Epigraph

- Introduction

- Example: Tack in your foot

- Two ways to draw a line

- No single "magic" point

- Consciousness is complex

- Holism?

- Caring is complex responses

- Do "unconscious" subcomponents matter?

- Illusion of atomicity

- What is pain?

- Why is pain so painful?

- The hard problem as ineffability

- Related discussions

- Appendix: Diagramming reportability

- Acknowledgments

Epigraph

"Consciousness is as consciousness does." --Güven Güzeldere, "Consciousness: What It Is, How to Study It, What to Learn from Its History" (1995)

Güzeldere presents this epigram as one of two approaches to consciousness, the other being "consciousness is as consciousness seems." But as Güzeldere notes, these two are not in opposition. I see them as the same elephant being felt by two blind men. Understanding consciousness as being "what consciousness does" is the heart of this essay.

Introduction

To most of us, it feels subjectively obvious that phenomenal consciousness has a clear cutoff. Our brain is definitely conscious now, but our toe is definitely not conscious, nor is a rock on the ground. Our attitudes toward other agents also reflect a binary distinction. We ask when a fetus becomes conscious, whether a given animal is conscious, and whether a person in a vegetative state is conscious.

Courses in psychology and cognitive science teach that even many parts of our own brains are not conscious, including habitual movements, implicit learning, implicit memory, and various other forms of automatic unconscious cognition.

The project of neural correlates of consciousness identifies which brain processes seem essential for conscious awareness and which can operate "in the dark." Phenomena like blindsight illustrate that people can perform sophisticated behaviors, such as avoiding obstacles in their paths, without reporting awareness of what they're seeing. Researchers have claimed to distinguish consciousness from most other cognitive abilities, including attention.

"On a confusion about a function of consciousness" by Ned Block claims that there's a difference between "phenomenal consciousness"—the (morally relevant) feeling of what a state is like—from "access consciousness"—information available in one's mind that's ready to be used for speech or action. In reply, Michael A. Cohen and Daniel C. Dennett argue ("Consciousness cannot be separated from function") that a conception of phenomenal consciousness that's not accessible cannot be studied scientifically.

So is it sensible to adopt a view on which phenomenal consciousness has a definite boundary, in which some brain processes instantiate it and others do not? Of course, there's not an objectively correct answer to this question; rather, this is ultimately a semantic and moral issue. But it's still a crucial issue, because it has significant implications for what kinds of beings we regard as ethically important under a sentiocentric axiology.

Example: Tack in your foot

Consider the following sequence of events:

- You hear about the country Eritrea and realize you don't know where it's located.

- You decide you need to learn geography, so you buy a world map to hang in your bedroom.

- You use tacks to hang up the map, and one of them happens to fall on the floor, unbeknownst to you.

- Three days later, you accidentally step on the tack, and it lodges into your foot.

- The mechanical damage of the tack in your skin tissue triggers nociceptors in your foot to fire.

- The nociceptors stimulate neurons from your feet up toward your head.

- Each neuron passes along information by receiving inputs on its dendrites.

- The neuron sums those signals at its axon hillock.

- This starts a cascade of Na+ depolarization down the axon.

- Eventually the signal reaches the axon terminal, where neurotransmitters are released into the synaptic cleft.

- The neurotransmitters journey to the other side of the cleft in vesicles and bind to post-synaptic receptors (whose numerosity depends on the connection strength of the pre- and post-synaptic neurons).

- This process of neuron-to-neuron transmission continues until signals reach your brain.

- The signals are processed by sensory neural networks, which transform the lower-level stimuli into a higher-level assessment of "damage."

- The high strength of the neuronal firing allows this damage assessment to propagate widely to neighboring brain regions and eventually throughout major parts of the brain. (This is the "global broadcast" step in global workspace theory.)

- The damage assessment joins with parts of the cortex that maintain high-level representations, and you develop the realization that your foot hurts, by binding the damage assessment with neural representations of yourself, your foot, etc.

- This message is sent to speech brain regions.

- Your speech centers develop the sentence "Ouch, my foot!" which is sent to muscles in your mouth, allowing you to scream it.

- In the meanwhile, the information about damage is being stored in memory.

- Also in the meanwhile, the damage information is relayed to your basal ganglia in order to trigger reinforcement learning against the sequence of actions that led to your stepping on the tack.

- Also in the meanwhile, your sensorimotor cortex and subcortical regions are planning motor commands to address the situation. They decide that you should pull the tack out with your hand, so they coordinate hierarchically organized muscle movements in your leg to lift up your foot and in your hand to pull out the tack. Locating the tack is done by a sensory integration of tactile stimuli and visual inspection.

- Also in the meanwhile, your brain's fight-or-flight response is being activated, causing the adrenal medulla to produce norepinephrine and epinephrine, which flow out into your blood, triggering a number of downstream effects. Some of these effects you can feel via interoceptive neurons that relay the information back to the brain and create a cortical representation of your internal bodily state in your insula.

- Also in the meanwhile, there are dozens of other neural and somatic responses triggered by the injury.

- You get the tack out, throw it away, and proceed to nurse your hurting foot.

- You decide never to use tacks again and check that you had a tetanus shot in the last 10 years.

(I think the neuroscience details here are mostly accurate, but it's possible I got a few points wrong, so don't use this to study for your exams!)

Now comes the million-dollar question: Where in this sequence did you become "conscious" of the pain in your foot? In other words, at what point did something morally unfortunate happen? Different theories of consciousness will give different answers. The global-workspace theory locates the beginning of conscious pain at step 14. Higher-order theory, which says that consciousness consists in thoughts about one's experiences, might say the crucial cutoff was step 15. Various viewpoints based on neural correlates of consciousness would place consciousness at whatever stage(s) activated the brain structure(s) that those theories consider important—e.g., recurrent cortical processing; this probably happens around steps 14-15 depending on the theory. A pure behaviorist or philosopher who insists that speech is crucial for sentience would locate the relevant juncture at step 17. An economist measuring revealed preferences might focus on steps 23-24, including your lack of future tack purchases at the office-supply store.

Most consciousness-focused views cluster within a few steps (14-15), though they disagree on the exact cutoff points. Debating exactly where within that cluster of steps consciousness emerges is arguably irrelevant for many real-world ethical questions involving normal adult humans, because a typical human goes through all of these steps during injury. Where these questions become crucial is in border cases—including humans in a fetal or vegetative state, as well as what those assessments imply about animals or machines that may have some but not all of the neural responses that a regular human has.

Two ways to draw a line

Scientists and philosophers typically define the cutoff for consciousness in one of two ways:

- Reportability: What brain processes, when removed, prevent the patient from indicating his experience of the event? Those processes are then assumed necessary for consciousness.

- Introspection and theoretical fiat: Researchers may decide that a given brain process just looks like the right kind of thing to be consciousness and may have properties that we can observe in our own consciousness, like being serially ordered, correlated with attention and memory, self-reflective, etc.

Reportability

Reportability has the virtue of allowing us to step outside our intuitions. For instance, few people predict based on untutored introspection that something like blindsight would be possible. In this way, digging further into the precise neural correlates of reportable consciousness has a lot to contribute to philosophy. That said, this approach is fundamentally biased to focus on what can be reported.

Typically reporting is done verbally, which works well enough for asymptomatic adult humans. Patients with damage to speech abilities could report via gestures instead. Many locked-in patients can't speak or gesture but are able to report information via voluntary eye movements, so their communications can be included in the umbrella of reportability as well. Vegetative patients can't voluntarily move anything, but one in five may be able to communicate by responding to questions with deliberate thoughts that can be viewed using fMRI.

We see that the limit of reportability can keep getting pushed further back. A next step might be to suggest that actively choosing what to think about in response to a question isn't essential so long as when we speak to the patient, she elicits some kind of non-random fMRI response indicative of having registered the statement. But is it possible that certain verbal cues could reflexively trigger certain responses in a way that people intuitively consider "unconscious"? How do we know when we've become too liberal in assessing what counts as a report? And conversely, how can we detect reports from vegetative patients who have lost the ability to hear or read words but are still conscious in other domains?

While the reportability approach seems more "objective" and admirably aims to challenge our intuitions, it also lacks a basis for setting the definition of reportability at any particular place. See "Appendix: Diagramming reportability" for further discussion.

Introspection and theory

Theoretical definitions of consciousness allow for more elegance in our views. They can also help extend consciousness assessments beyond the normal adult humans or other primates on whom neural-correlation experiments are often done. But of course, they lose out on the potential for counterintuitive findings from empirical research. Obviously some blend of reportability studies with theory is the best way to go.

Still, even theoretical approaches often make seemingly arbitrary distinctions. For instance, in the global-workspace view, consciousness consists in a global broadcast throughout corticothalamic networks, triggering significant updates to cognition, action, memory, and other functions in various brain regions. But does it need to be a fully global broadcast? What if there was a strong coalition of neurons that allowed for substantial changes in its local area but didn't project throughout the brain? Shouldn't it be at least somewhat conscious, because it got attention and made updates to other brain regions? And if so, we can keep going down lower. Small neural signals trigger small updates at least in their neighborhoods even if they don't extend further. For that matter, reflex arcs that don't reach the brain beyond the spinal cord still carry information and trigger neural updates. Even a single neuron represents a tug of war between excitatory and inhibitory inputs, and one of those two sides "wins" in a binary fashion to fire or not fire an action potential "broadcast" that has effects on downstream neurons. Why aren't these systems marginally conscious? Is there a particular kind of update that needs to be triggered by the broadcast before the broadcast "counts"? Presumably that definition too will involve ambiguity, in which we could potentially see in so-called unconscious brain operations simpler instances of whatever processes are deemed relevant for consciousness in the brain-wide broadcast.

Similar sorts of ambiguity in cutoff points arise for most other theoretical views on consciousness.

No single "magic" point

Neural-correlation definitions of consciousness can eschew ambiguity about where consciousness begins and ends by declaring particular structures as the finish lines. But these finish lines seem arbitrary, are based on a disputable definition of reportability, and aren't easily extended to alternate nervous systems (not just insects and fish but even birds). Theoretical views on consciousness are more graceful in their application to many types of brains, but for the same reason, they also raise issues of unclarity about where consciousness begins and ends, because the nervous system does fundamentally similar things in many places. There's not a single point where it stops its normal function and does magic.

Dennett characterizes the idea of a "magic consciousness point" as the "Cartesian theater":

Cartesian materialism is the view that there is a crucial finish line or boundary somewhere in the brain, marking a place where the order of arrival equals the order of "presentation" in experience because what happens there is what you are conscious of. [...] Many theorists would insist that they have explicitly rejected such an obviously bad idea. But [...] the persuasive imagery of the Cartesian Theater keeps coming back to haunt us--laypeople and scientists alike--even after its ghostly dualism has been denounced and exorcized.

David Papineau echoes this point in "Confusions about Consciousness":

Much of the confusion about consciousness is generated by lack of clarity on the issue of dualism. The majority of scientists who are caught up in the current excitement about consciousness studies would probably deny that they are dualists, if the question were put to them explicitly. But at the same time I think that many of them are closet dualists. They strive to resist the temptations of dualist thinking, but as soon as their guard drops they slip back into the old dualist ways. The very language in which they normally pose the problem of consciousness gives the game away. "How can brain states 'give rise' to conscious feelings?" "How are conscious states 'generated' by neural activity?" The way these questions are phrased makes it clear that consciousness is being viewed as something extra to the material brain, even if the official doctrine is to deny this.

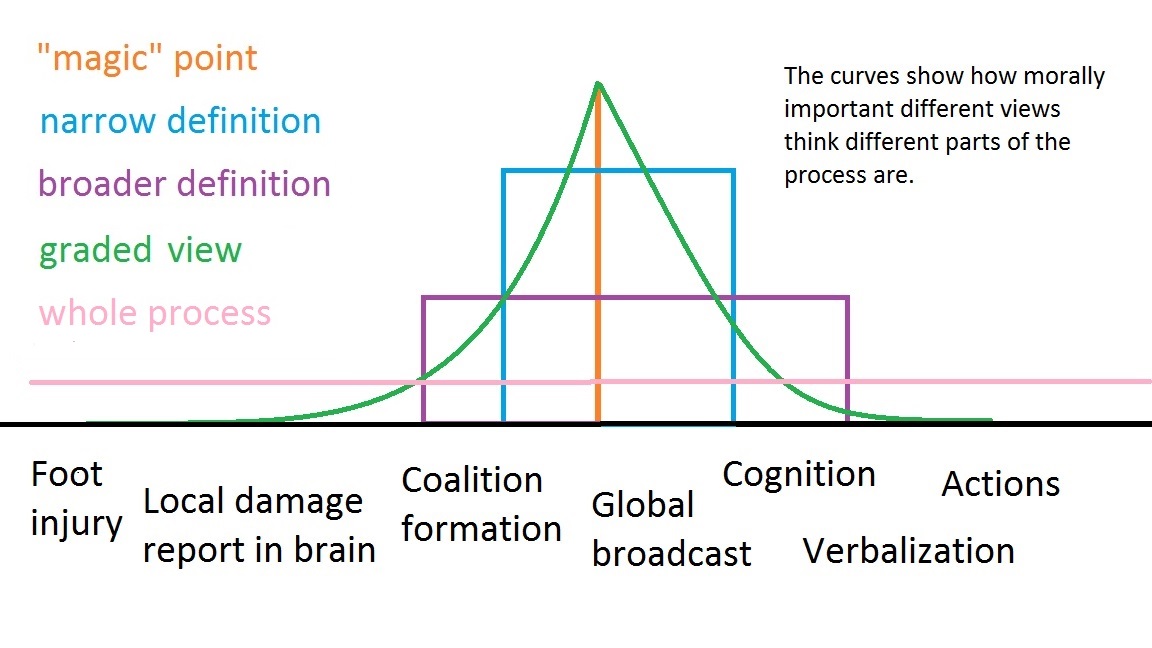

In response to this realization, we might instead adopt a viewpoint that considers many parts of the process important, at least clustered around the central computations that seem most crucial (probably steps 14-15 of the tack example). The following figure shows a range of possible viewpoints we could adopt regarding how morally relevant different parts of the injury-feeling process are.

The orange approach tries to define a single cutoff point before which everything is "unconscious" and after which everything is just morally irrelevant reporting of the "conscious" event. The blue and purple boxes give moral weight to larger segments of the process. The green curve makes the valuation more continuous, recognizing that there's no hard cutoff point where something "special" begins—after all, it's just signals leading to more signals and information being reorganized in various ways. Of course, if the curve doesn't drop fully to zero around the edges, this signs us up for caring at least a tiny bit about nociception in the foot.

The pink curve represents a view according to which the suffering process per se is less relevant than the overall sequence of events. This unintuitively suggests that just the tack breaking skin matters as much as the neural broadcasting of that information; probably few people would subscribe to such a view. That said, ethical views that value more than hedonic sensations in the brain could extend moral relevance to some operations outside the body. For instance, preference utilitarians might disvalue the process of violating someone's preferences even if the person with violated preferences never finds out.

Consciousness is complex

The absence of a single "consciousness point" underscores a broader observation about consciousness: It's really complicated! We have 85 billion neurons doing so many different processes that neuroscientists can publish 60,000 papers per year and still only scratch the surface of understanding how the whole thing works. 85 billion is 34 times the number of seconds in an 80-year human life. Like societies and ecosystems, brains are so complex that they resist simple models of function. We're accustomed to hearing theories like "process X is what consciousness is" for various kinds of X, but in fact, there may not be a single X describable in one or two English sentences that captures the full depth of what we want to refer to when we speak about our conscious minds. The intuition that consciousness can't be explained by mere physical algorithms playing out does get something right when it finds any single, unidimensional account of consciousness unsatisfactory. But when we put all 85 billion neurons together, the resulting neural collective is so sophisticated that I think these anti-materialist intuitions no longer apply. To rephrase Arthur C. Clarke's Third Law: "A sufficiently advanced physical brain ecosystem is indistinguishable from a magical dualist-like soul." There are whole little worlds inside our heads. It's no wonder our memories can be so extensive, our personalities so complex, and our inner lives so deep.

Usually, when we see an object or hear a word, its 'meaning' seems simple and direct. So we usually expect to be able to describe things without having to construct and describe such complicated cognitive theories. This fictitious apparent simplicity of feelings is why, I think, most philosophers have been stuck for so long [...]. When a mental condition seems hard to describe, this could be because the subject simply is more complicated that you thought.

Appreciating the complexity of consciousness helps us see why trivial computer programs don't have much moral importance, even if they're gerrymandered in ways that superficially seem very important for utilitarians. For example, suppose we have a simple program that has a variable pain_level that can be set at various values. This variable produces extremely simple response behaviors, like the program printing "Ouch, make it stop!" every second until pain_level is decreased below 5. Now suppose we increase pain_level to an extremely large number. Is this very bad? No. For a functionalist like myself, pain is as pain does. The more sophisticated the behavioral/functional responses of a system to a negative stimulus, the more morally serious its pain is. This simple computer program, though superficially in great amounts of pain, has an extremely limited repertoire of responses and thus can rightly be said to have an extremely tiny (and basically morally trivial) degree of pain.

Degrees of understanding

Do chatbots understand language? This debate depends how we define "understanding", but given common-sense conceptions of "understanding", I think present-day chatbots don't (appreciably) understand English. However, this isn't because of a fundamental difference between biological and artificial minds; rather, it's because humans have much more context, see novel connections, and so on. To "understand" something involves

- activating associated concepts

- recalling memories of the thing

- being able to combine relevant ideas in novel ways

- describing these thoughts clearly and coherently.

These are hard computational problems, and present-day chatbots don't really solve them. But this doesn't mean they're not soluble in principle. If the right algorithms were implemented, computers would indeed "understand" language.

In any case, there are many instances where people don't really understand what they talk about either. Perhaps someone has heard a few talking points about a highly technical issue without being able to say anything more on the topic. This person would be in a similar position as a chatbot: It could give some rote replies but couldn't engage in novel discussion or associative thinking about the topic.

[Daniel Dennett] recalled a time, many years ago, when he found himself lecturing a group of physicists. He showed them a slide that read “E=mc2” and asked if anyone in the audience understood it. Almost all of the physicists raised their hands, but one man sitting in the front protested. “Most of the people in this room are experimentalists,” he said. “They think they understand this equation, but, really, they don’t. The only people who really understand it are the theoreticians.”

“Understanding, too, comes in degrees,” Dennett concluded[...].

Van Gulick (2006) echoes (p. 19) that "Organisms can be informed about or understand some feature of their world to many varying degrees." Van Gulick (2006) suggests (p. 20) that deep understanding is "a capacity to bring the information to bear in a more or less open-ended range of adaptive applications, including many that depend on combining it with other information in ways sensitive and appropriate to their respective contents. The wider the range of the possible applications and the richer the field of other contents within whose context the particular item’s significance is actively linked, the more the organism can be said to understand the meaning of the relevant information."

Holism?

If we zoom in on any single part of the process of stepping on a tack, those neural operations alone look trivial. Some neurons here receive an input signal, so they fire rapidly to turn on some neurons downstream. A group of neurons from one brain region form a connection with those from another brain region. And so on. By itself these firing and synchronizing neurons seem totally meaningless; they could represent anything, and changes in the structure of network-graph activation can be seen all over nature and computer science. These computations only matter because of the context in which they're embedded—the larger process of the organism receiving negative input signals, recognizing them, and triggering responses. We seem to care about the holistic picture of the situation, not just one individual network-graph transformation that it may contain.

How much holism is important? Presumably the entire sequence, from stepping on the tack through taking it out doesn't need to occur for the experience to be morally relevant. Even the foot injury isn't needed; the event would be seemingly just as bad if the brain's "damage"-assessment network was stimulated directly. On the other hand, directly stimulating the "Ouch, my foot!" verbal response would not really matter, because that comes too late in the process. What about directly setting up a network configuration corresponding to thoughts of injury without requiring it to "form organically" via broadcasting? (Admittedly this would be hard to do in practice, but we can imagine it conceptually.) At some point we get into a tricky middle ground where holism intuitions about the process seem relevant.

Caring is complex responses

So far I've made general arguments about the arbitrariness of setting any single, hard cutoff for a process that's inherently multifaceted and perhaps holistic. But there's another way to argue that more than just a single point in the process matters. When you say, "I care about my conscious experience," what does that mean? What does it look like to "care about" something? Caring about something is the suite of responses that your brain and body have to event—all the various updates to thought, memory, action inclination, network organization, hormone levels, heart rate, etc. that occur as a result of that thing. These changes are brought about by the process of broadcasting information and triggering neural updates, so taken at face value, that whole process is what you care about.

Of course, this statement may be too literal. A person might say she really cares about her children's wellbeing, and information broadcast to her brain is just an intermediate step in that process. This is a fair response, and I agree that we can describe our goals at many levels of abstraction. But I wanted to highlight for those who do focus on brain processes that the collection of responses the brain makes can be seen as an integral part of the picture.

As I see it, feelings are not strange alien things. It is precisely those cognitive changes themselves that constitute what 'hurting' is [...]. The big mistake comes from looking for some single, simple, 'essence' of hurting, rather than recognizing that this is the word we use for complex rearrangement of our disposition of resources.

Subjectively it seems as though different periods and moments of my life have had distinct textures. Sometimes a smell, song, memory, or task will slide me back into "the feeling of being in elementary school" or "the feeling of working in my office at Microsoft" or "the feeling of being with a particular friend". Each of these textures appears almost to be a distinct emotion in its own right. Of course, one might say these are not unique emotions but only complex combinations of simpler emotions, memories, and thoughts—but that's precisely the point Minsky expressed in the preceding quote. What seems like one quale is actually a combination of many components. And presumably more basic emotions are also made of many smaller parts.

Do "unconscious" subcomponents matter?

The green curve in the above figure suggested giving some weight to lower-level nociceptive firings, even before they become more widely recognized. We could motivate this by appealing to the lack of a clear cutoff in the sequence of events where things suddenly start to matter. In addition, why should it matter that the neurons in the foot aren't yet "famous" to the rest of the brain? We could still poetically say they matter to themselves. And they're doing their darndest to take an appropriate action in the situation (namely, raising the alarm bells to higher layers). Of course, this imagery evokes unwarranted anthropomorphism. For instance, the neurons aren't activating a larger network of representations that binds various other features of the environment and internal sense of self in the way corticothalamic regions would. But how relevant is that added network connectivity? If we reduced the corticothalamic connectivity piece by piece, would the importance of the damage-signaling neurons ever drop to zero? Or would it gradually fade without becoming completely irrelevant? And if it fades gradually, does that mean the damage-signaling neurons still matter at least a little bit by themselves?

This might seem like idle discussion, but it has practical implications. One example concerns local anaesthesia, which works by preventing nociceptive neurons from sending their signals up to the brain. Let's imagine a scenario where the anaesthesia is inserted between the site of injury and the brain, rather directly at the site of injury. Obviously the anaesthesia prevents most of the potential morally relevant suffering from tissue damage, but is there any extent to which the nociceptive components alone still matter? If it helps pump the intuition, we could imagine the nociceptive neurons as powerless, voiceless entities that, if hooked up to the appropriate machinery, would scream in pain. But of course, they're not hooked up to such machinery, and maybe that additional machinery itself is what makes them matter at all—as we saw with the holism discussion previously.

This question of the status of lower subcomponents is relevant more generally when we assess non-human sentience, including that by very simple animals and machine agents, who may act more like peripheral ganglia than a highly integrated central nervous system. Does their aversive reaction not matter because it's not hooked up to a more sophisticated processor? If so, what features does that processor need before the experience matters? If not, do lots of simple processes in nature that are comparably complex as nociceptive firing also matter? (For the record, I think many insects have fairly impressive nervous systems and may deserve to be called somewhat conscious regardless of the question about subcomponents.)

Another question is whether we care about neuronal coalitions that form in the brain but don't "win the elections" to be globally broadcast. What if you would have been in pain due to the tack in your foot, but there's a loud "boom" sound behind you that takes away your attention for a second? Does the pain still matter a little bit when you're distracted, even if the "boom" sound wins the election?

Illusion of atomicity

These ethical questions are hard because they're sort of like trying to fit a square peg into a round hole. Pleasure and pain are not atomic primitives in the world. Rather, they're general concepts that refer to a messy collection of algorithms that can vary on a vast set of dimensions. Collapsing these complex systems into a scalar number representing our valuation is a challenge, especially if we try to push beyond our intuitive, ego-centric assessments of value made by the powerful parts of our brains.

Ultimately nature is one big whole. Physical processes outside the body can trigger processes inside the body, again leading to impacts outside the body. Our customary perceptions of the world draw clear boundaries around the skin of animals, but in actuality the environment makes up a part of who we are, and we even offload some of our mind to external objects in the form of writing, conversation, and the Internet. Within the body, it's even more clear that there's not a hard separation between morally relevant, conscious operations and an irrelevant, unconscious remainder—even though I do think some operations matter much more than others.

Our brains have decent systems built in to construct a sense of our welfare level as something that we can aim to optimize (or at least satisfice). Presumably these cognitive systems are the basis for utilitarianism itself, because utilitarianism takes this selfish welfare-maximization framework and applies it altruistically. But our intuitive assessments of welfare of other minds—or even subcomponents of our own—may not be consistent with a closer understanding of the situation, and they need some refinement.

What is pain?

Consider the person who stepped on a tack; let's call him Bob. Bob's brain generated various internal signals, which resulted in his crying out "I'm in pain!" We could ask Bob: "What is pain?" Bob might reply: "I don't know how to describe it, but pain is this qualitative experience that I'm having now." Bob points to his head. What exactly is Bob pointing at?

When Bob declares himself to be in pain, he does so by generating a verbal statement. So is the verbal statement what Bob is pointing at when trying to locate his pain? Maybe that's part of the answer, but verbalization alone seems to not be the whole story. We could imagine writing a computer program that prints out "I'm in pain!", and this would not be all that Bob is trying to point at.

What about the pre-verbal signaling in Bob's brain? Before Bob generated his verbal output, there were preceding neurons firing to indicate that pain was present. Is the path of pain-signaling neurons that leads from Bob's foot up to Bob's verbal report what Bob is pointing at as his pain? If we only focus on a small set of these pain-signaling neurons, then once again we're looking too narrowly. We could imagine hooking together a set of a few computers that pass along a message to a final computer to print out "I'm in pain!", and again this doesn't seem to capture the depth of what pain is.

What if we don't just point to a linear path from nociceptors to Bob's verbal declaration? What if we also include an associative network of related verbal and non-verbal concepts that get activated along the way? This is indeed closer to an account of pain, but even this by itself may not be the full story. We could imagine a computer that contains a detailed associative network of concepts, in which some nodes like "tissue damage in foot" spread their activation around and ultimately trigger the "pain" node, which then generates a verbal report. If this associative network is sufficiently rich, I think we've made progress toward characterizing prototypical human pain, but even in this case, it seems like there's a lot missing, such as motivation, action, memories, and so on. Without context, the nodes in the associative network don't obviously contain particular meanings. For example, who is to say that the "pain" node in the network is pain rather than some other abstraction (like Node #24819) unless it actually triggers stress responses, avoidance behavior, and so on? (This is a similar idea as the symbol grounding problem.)

In addition to producing a verbal output and activating associations in his brain, Bob also reacts to the tack by having motivation to pull the tack out of his foot and rub his injured skin. Is this reaction what pain is? Again, it's not the whole story. We can imagine building a simple machine that pulls tacks out of its feet and rubs them.

We can go down the list of all the various things that Bob's brain and body do in response to the tack in Bob's foot. Each one of them, when viewed in isolation, doesn't seem to capture all of what pain is. Even global broadcasting of a non-verbal "this is painful" message throughout Bob's brain only acquires meaning because of the reactions that are triggered by receiving brain processes.

It doesn't work to characterize pain in terms of a single, simple component, such as transmission of pain signals up the spinal cord, stress responses, avoidance behavior, verbalization of pain, facial expressions, or even more "internal" functional processes within the central nervous system considered in isolation. On the other hand, each of these components adds context. These components are like brush strokes in a painting. A single brush stroke isn't a painting, but each brush stroke adds context to the final picture.

Prototypical human pain is a very complex process, but we could see shades of pain in simpler computational agents, who may possess some but not all of the components that comprise prototypical pain in humans. In a similar way, we can still see a painting even if it has fewer and less varied brush strokes than those that are present in Vincent van Gogh's The Starry Night. There's no clear boundary where "painting" ends and "just a few brush strokes" begins, and likewise there's no clear cutoff where "pain" ends and "simple, automatic avoidance behavior" begins.

I'm sometimes asked if it's possible to have pain without reinforcement learning; if you can't update your behavior in the future, what's the point of pain? I think "pain" designates a lot of components, and learning is just one of them. Pain involves cognitive representations that something "bad" is happening. These representations are distributed throughout the cognitive system and trigger various follow-on consequences, such as withdrawal, motivation to continue avoiding the harmful stimulus, full direction of attention to the problem, planning how to best respond to the state of emergency, saying "This hurts!", and so on. None of these responses necessarily involves long-term updates to synaptic weights ("learning"), yet I think these responses already constitute pain to some degree. I think learning to avoid bad situations is just one of the many possible follow-on consequences of broadcasting a pain signal in a cognitive system.

Analogy: What is a clock?

What I've described in the above section is not specific to pain, or even to mental states of any kind. These are generic ideas that apply to most instances of describing a concept. For example, suppose you visit a kindergarten classroom, and a student says "This room has a clock in it." You ask "What is a clock?" The student might reply: "I don't know how to describe it, but a clock is that round thing hanging up on the wall over there." The student points to the wall clock. What exactly is the student pointing at?

One answer is that a clock is a series of twelve numbers in a circle with two lines pointing at them. But this isn't the full story, because we could lay down twelve numbers in a circle and point two twigs at them without this helping us to tell time.

Another answer could be that a clock is something that rotates at a constant speed. But in this case, a wheel could be a clock. While a rotating wheel could help us measure the passage of time, such a wheel is not a prototypical example of a clock.

As with pain, we can see that a prototypical clock is a complex concept that involves many parts. Any single part in isolation is not a paradigm case of a clock (though isolated parts might have some degree of clock-ness to them, like a spinning wheel that could theoretically record the passage of time, or a picture of a clock drawn on paper). Clocks, like pain, can also come in different forms, such as digital clocks, atomic clocks, and so on.

Why is pain so painful?

Richard Dawkins mentions a common thought:

It is an interesting question, incidentally, why pain has to be so damned painful. Why not equip the brain with the equivalent of a little red flag, painlessly raised to warn, "Don't do that again"?

The answer is actually suggested by the analogy with a little red flag. When you see small warnings on your computer, do you always attend to them? And do they produce significant shifts in your behavior in the future? Typically, no. Little red flags are all too easy to ignore or close without being given much thought. And if you do have strong motivation to attend to the red flags—sacrificing other opportunities in order to do so—then there's at least some sense in which we can consider you to be having an intense experience. And since that experience is of something bad, we could call it "intense pain". "Pain commanding your attention" is (part of) what it means for pain to be strong.

In addition, stronger pain experiences produce bigger impacts on the brain, in terms of physiological arousal, reinforcement, memory associations, and so on. Insofar as these functions also partly constitute what "pain" is, pain is necessarily intense when there are big changes to these systems in response to a harmful stimulus. Big changes to behavior and brain states are part of a functionalist definition of intense pain.

If an artificial agent didn't need to respond to a harmful stimulus in the short run but only needed to update its learning and memory functions, maybe some of the badness of pain could be averted. But these updates might still count as somewhat painful, and the agent would still acquire "traumatic" memories that would produce suffering when remembered later on.

Minsky (2006) makes a similar point as I did in this section (p. 75). He first poses a hypothetical question: "I agree that pain can lead to many kinds of changes in a person's mind, but that doesn't explain how suffering feels. Why can't all that machinery work without making people feel so bad?" Minsky then offers his answer: "It seems to me that when people talk about 'feeling bad' they are referring to the disruption of their other goals, and to the various conditions that result from this. Pain would not serve the functions for which it evolved if it allowed us to keep pursuing our usual goals while our bodies were being destroyed."

Perhaps there remains some mystery as to why attending to pain can't feel similar to, e.g., having an obsession with solving a problem without feeling negatively about one's current state. Obsessions can also disrupt one's current goals. Maybe the answer is that if one doesn't feel negatively about escaping pain, then there will be less motivation to avoid future potentially painful situations. When facing a possible future pain, one might say: "Ho hum, I guess another obsessive attempt to escape harm is coming up. This is fine." But would it be possible to make the in-the-moment harm-escaping process merely feel obsessive rather than painful, while still creating strong motivation to avoid future need-to-escape-from-harm situations?

The hard problem as ineffability

This essay has emphasized the importance of consciousness as doing. I think this viewpoint can help make sense of intuitions about the hard problem of consciousness and related puzzles.

In a lecture with Daniel Dennett, Daniel Hutto quotes a passage from Alice's Adventures in Wonderland, Ch. 3:

`What IS a Caucus-race?' said Alice; not that she wanted much to know, but the Dodo had paused as if it thought that SOMEBODY ought to speak, and no one else seemed inclined to say anything.

`Why,' said the Dodo, `the best way to explain it is to do it.'

Hutto notes that we can see related ideas in Nike's "Just do it" slogan. Or, I would add, in the Confucius quote: "I hear and I forget. I see and I remember. I do and I understand." There are lots of intuitive, ineffable qualities of something that you gather when you experience it as compared with just reading about it. This makes sense, because our brains and bodies have many responses to their environments, not all of which can be simulated just by imagination.

Joe Cruz makes roughly the same point:

Words aren't the same as the sensations that you feel. No description of the sensations you feel are going to be adequate to it. So why should it be a surprise that my understanding of you in words is going to fall short of your understanding of you?

Now, we can ask: Do the computations of physics contain all phenomenal truths? Or are there phenomenal truths not fully deducible from physics (combined perhaps with indexical information about where one is within physics)? This is a question that David J. Chalmers and Frank Jackson pursue in "Conceptual Analysis and Reductive Explanation".

I think that what we have in mind when we talk about "phenomenal truths" is different from the kind of logical ideas that philosophers are accustomed to debating. Phenomenal experience involves multitudinous somatic and neural responses that are part of being an agent in an environment, most of which are not amenable to description in words. This is why I often talk about consciousness as a kind of poetry—we're intending to point at something deeper than words can explain. We're referring to the process of being in the world and doing all the operations that consciousness constitutes.

We can take another example made famous by Frank Jackson: Mary's room. Mary can know at a descriptive level exactly what happens in the brain when it sees color, but she hasn't experienced color. Asking whether there's "knowledge" that Mary lacks is the wrong way to think about the question. It's just that the cells that compose Mary have not engaged in processing that directly implements color vision. This is completely non-mysterious. My computer can store a text file that contains a Python program without ever having run that program. A human can answer all kinds of questions about that Python program but can't "experience" it because a person doesn't have the hardware to execute a Python program in his brain—at least not the same kind of hardware as a digital computer does. (My point here resembles Earl Conee's "acquaintance hypothesis" response to the Mary thought experiment.)

So it is with consciousness in general. It feels like there's something missing from reductive explanations because we talk about those in terms of words and symbols, which yield different responses from our bodies and brains than if we actually implemented those algorithms. The only way to completely "understand" an algorithm is to be that algorithm. That said, we can make approximations, especially for other minds (like higher animals) that are similar enough to our own that we can roughly simulate parts of their experiences inside our heads. Presumably this is one reason we have more intuition that other humans are conscious than that, say, our laptops are conscious.

As Abraham Maslow said, "I suppose it is tempting, if the only tool you have is a hammer, to treat everything as if it were a nail." Likewise, if you're an analytic philosopher, it's tempting to treat everything as a propositional statement. The existence of consciousness must be a "truth", so say these philosophers, and there must be some "knowledge" that Mary learns upon seeing red. By thinking this way, one gets into puzzles about how physical truths can account for phenomenal truths. I suggest that we instead experience computations/consciousness for what they are: things that we do and that constitute us. This approach is closer to spirituality or poetry than philosophy, but that's fine. Humans have different ways of understanding reality, and verbal logical statements are not the best tool in all cases. Of course, I don't mean to suggest that consciousness actually is mysterious or illogical at bottom. Our brains can probably be simulated with the logical operations of a digital computer and at the very least must be simulated by the mathematical equations of physics. Rather, I mean that we can see the world from many perspectives, and when thinking about ethics or "what is it like" to have experiences, a spiritual perspective of being a computation in the world along with the rest of the computations of the world may be more helpful than tying ourselves in knots over how to shoehorn a first-person experience into third-person vocabulary.

Note that this proposal can be seen as eliminativist about phenomenal consciousness. Humans make a cognitive error in thinking that consciousness is a concrete entity that we need to account for theoretically. Rather, things just happen, and our brains try to make sense of it by inventing a node labeled "consciousness" that gets activated when we reflect on our subjective experiences, in a similar way as someone's brain may activate a node labeled "God" when he reflects on the origin of the universe.

The "knowledge argument" is imprecise

Jackson's knowledge argument is quite bad as far as arguments against physicalism go. It derives its force from equivocation about the definition of "knowledge". What does it mean for Mary to "learn something new"? Once we've defined precisely what that means, the situation becomes trivial.

For example, suppose "knowledge of red" means having physically received photos with wavelengths predominantly around 650 nm into one's eyes and having engaged subsequent processing in one's visual cortex. If this is our definition, then it's trivial that Mary in her black-and-white room doesn't "know" what red is, but this obviously has no more metaphysical significance than saying I haven't visited the moon. (Caveat: White light contains red wavelengths as part of it, so Mary has in fact seen red wavelengths, but they were combined with other wavelengths and so triggered different neural reactions in her than seeing more pure red light would have.)

Suppose instead that "knowledge of red" means Mary can predict how she would react to seeing red, what she would say, etc. If Mary had huge amounts of computing power and access to her brain's physical configuration, she could have, in the black-and-white room, simulated her brain in enough detail to read off from her simulation the answers to how she would respond to seeing red light. In this case, she would have "knowledge" of red.

In general, if we pick any definition of "knowledge" precise enough for us to understand what it means, then it becomes trivial to say whether Mary has knowledge of red or not. Jackson's argument essentially rests on equivocation between the definition of knowledge as what one can read in textbooks vs. the definition of knowledge as directly undergoing some brain-state transformation.

If "knowledge" is taken as something beyond the physical and hence not definable in terms of physical states, that's fine, but then Jackson's rebuttal to physicalism falls on deaf ears, since the whole argument requires rejecting physicalism to get started.

Related discussions

- "Graded sentience and value pluralism"

- "Consciousness and power"

- "Power vs. equality weighting"

- "Empathy vs. aesthetics"

Appendix: Diagramming reportability

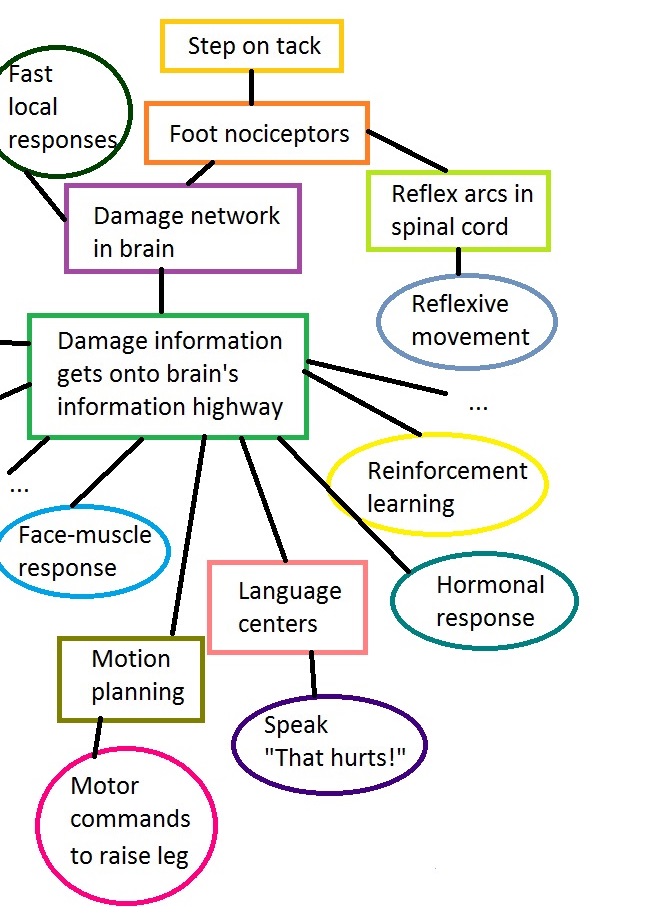

The following diagram shows a rough conceptual sketch of how your nervous system responds to stepping on the tack as discussed in the text. This is obviously oversimplified, ignores many cross and backward connections, and might not be exactly correct, but what matters is the general idea, not the exact details. (Update: For a more accurate picture of the flow of information in the nervous system starting from input stimuli, see Fig. 4.2 of Rolls (2008).)

The project of finding the minimal set of neural correlates of consciousness consists in cutting this tree along the latest (i.e., closest to the bottom in the above figure) possible branch(es) such that reportability is abolished. With a very narrow view of reportability, this is easy: Just cut out mouth muscles. If the person could write instead, then cut out the whole language center. If the person could make information-carrying limb movements, cut out motor control. If the person could still think deliberate thoughts to an fMRI, then cut out the ability to control the direction of one's thoughts. And so on. (Note that the ability to control one's thoughts may not be all we ordinarily think of as consciousness. For instance, dreams are conscious, yet rarely do you have control over where the dream goes.)

Studies that show "consciousness is not required for ability X" are basically cutting off branches that lead to reportability outputs while keeping intact branches that lead to other outputs. They seem weird because normally the whole tree is present together. But the boundaries of reportability are ultimately arbitrary, and it seems like many parts of the tree could be morally relevant? We privilege certain of our brain processes because those are the ones we can introspect on and that we use to carry out deliberative plans in the world. But are those all that matter? Or do those that work behind the scenes without stealing the show also count?

Note that reportability bias applies not just for third-person observers but also for our introspection. A blindsight patient feels he isn't visually conscious of the obstacles that his body is avoiding because the inputs processed in certain non-damaged visual parts of his brain aren't being sent (or at least not with enough strength) out to the other parts of his brain that are doing the higher-level thinking, talking, and remembering. For brain regions, it's all about whom you know, and in blindsight, the remaining visual neurons don't have a way in to the Big Boy network of executive control, speech, etc. They do their job locally, but they don't have the connections to get recognition or hobnob with the other brain functions. They might send out small newsletters, but they don't have wide readership. Therefore, when a blindsight patient thinks to himself, "Can I consciously see an object off to the side?" various higher-level thought regions query themselves:

Did I read any updates about an object in front of me? Hmm, I read a lot of the big publications, but I didn't see a story about that. My friends say they didn't hear any such story either. Guess I don't know anything about it. Secretary Speech Center, tell the experimenter that I'm not visually conscious of that object, will you?

Of course, I'm anthropomorphizing here, but I think drawing an analogy between neural and social networks can be helpful because we have better intuition for the latter. Our cognitive/executive centers are not homunculi with all the abilities of a full human, but they do implement some of the functionality of a full human, enough that this analogy is meaningful. Our full conscious experience is built from lots of little pieces like this working together. The cognitive/executive centers themselves could be decomposed into social networks of many individuals.

The cognitive functions that scientists use to assess consciousness seem to depend on global broadcasting. For instance

- verbal report requires sending information from stimulus-reception regions, through processing regions, through cortical hubs, to verbal regions

- memory requires sending information in the same way, except that instead of going to verbal centers, it goes to memory centers

- controlling the direction of one's thoughts (e.g., to be observed using fMRI) apparently requires cortical broadcasting as well.

But it's not that global broadcasting is somehow specially related to consciousness; it's just that cortical broadcasting seems important for most of the objective measures of consciousness that we can study. Some presentations of global-workspace theory may confuse this point. As an analogy, I could develop a Highway Theory of Interstate Car Travel according to which interstate travel by car is driving on the highway. Well, it's true you need to drive on the highway to do interstate car travel, but there's more to the travel process. You also need to pack your bags, start your car, leave your driveway, use local roads, find your destination, and so on. That a car is driving for a sufficiently long time on a highway may be a necessary and sufficient condition for most interstate car travel, but this doesn't mean travel is only highway driving. And it doesn't mean that driving just to your local coffee shop isn't a form of travel in its own right, even if it's not interstate travel.

I agree with Susan Blackmore's critique of the "neural correlates of consciousness" (NCCs) program:

A popular method is to use binocular rivalry or ambiguous figures which can be seen in either of two incompatible ways, such as a Necker cube that flips between two orientations. To find the NCCs you find out which version is being consciously perceived as the perception flips from one to the other and then correlate that with what is happening in the visual system. The problem is that the person has to tell you in words 'Now I am conscious of this', or 'Now I'm now conscious of that'. They might instead press a lever or button, and other animals can do this too, but in every case you are measuring physical responses.

Is this capturing something called consciousness? Will it help us solve the mystery? No.

This method is really no different from any other correlational studies of brain function, such as correlating activity in the fusiform face area with seeing faces, or prefrontal cortex with certain kinds of decision-making. It correlates one type of physical measure with another. This is not useless research. It is very interesting to know, for example, where in the visual system neural activity changes when the reported visual experience flips. But discovering this does not tell us that this neural activity is the generator of something special called 'consciousness' or 'subjective experience' while everything else going on in the brain is 'unconscious'.

[...] All we will ever find is the neural correlates of thoughts, perceptions, memories and the verbal and attentional processes that lead us to think we are conscious.

LeDoux and Brown (2017) defend a Higher-Order Theory of Emotional Consciousness, which identifies consciousness as arising from certain higher-order representations within "cortically based general networks of cognition". The authors seek to explain "consciousness as a subjective experience", but like most neuroscientists, they fall back on verbal reports as the marker of consciousness: "Essential to researching consciousness as subjective experience is some means of measuring internal states that cannot be observed by the scientist. The most common method is the use of verbal self-report [...]. This allows researchers to distinguish conditions under which one is able to state when they experience a sensory event from when they do not." But this is the bait-and-switch that Blackmore warned about. All that LeDoux and Brown (2017) are really doing is giving a sketch of a brain architecture that gives rise to such-and-such verbal reports, and LeDoux and Brown (2017) (in my opinion, arbitrarily) identify roughly the "top" level of that architecture as "conscious", while the lower-order representations are "unconscious".

While scientists believe they're talking about "theories of consciousness", they're actually debating "definitions of consciousness". It doesn't feel that way because of the implicitly dualist notion that there is something special that we're trying to locate. But in reality, we're just pointing to different cognitive systems that have different capabilities and functions.

Acknowledgments

A discussion with Sören Mindermann and Lukas Gloor inspired the section of this piece titled "The 'knowledge argument' is imprecise".