Summary

The difference between non-player characters (NPCs)a in video games and animals in real life is a matter of degree rather than kind. NPCs and animals are both fundamentally agents that emerge from a complicated collection of simple physical operations, and the main distinction between NPCs and animals is one of cognitive and affective complexity. Thus, if we care a lot about animals, we may care a tiny bit about game NPCs, at least the more elaborate versions. I think even present-day NPCs collectively have some ethical significance, though they don't rank near the top of ethical issues in our current world. However, as the sophistication and number of NPCs grow, our ethical obligations toward video-game characters may become an urgent moral topic.

Note: This piece isn't intended to argue that video-game characters necessarily warrant more moral concern than other computer programs or even other non-NPC elements of video games. Rather, my aim is merely to explore the general idea of seeing trivial amounts of sentience in simple systems by focusing on game NPCs as a fun and familiar example. For discussion of the idea of sentience in software more generally, see "What Are Suffering Subroutines?"

Other versions of this essay:

- Audio podcast (.mp3)

Contents

- Summary

- Introduction

- Fiction vs. reality

- Goal-directed behavior and sentience

- Consciousness and power

- Dennett's stance levels

- State machines and emotion

- Mysterious complexity

- Appearance vs. implementation

- Why visual display might matter

- How important are NPCs?

- Video games of the future

- What about enemy aggression?

- Do player characters matter?

- Comparison with biocentrism

- Does NPC suffering aggregate?

- Individuation vs. entitativity

- Sameness and empathy

- Empathy vs. aesthetics

- What is it like to be an NPC?

- Appendix: Evolution of my views on this topic

- Optional appendix: My personal history with video games

- Acknowledgments

- Feedback

- Footnotes

Introduction

Video games raise a number of ethical issues for society. Probably the most fiercely debated is whether gory video games affect proclivities for real-world violence. Some studies find no causal relationship between video games and violence, while other studies, including experimental manipulations, do suggest some causal pathway toward real-world aggression (see Wikipedia's article for more). My subjective experience jibes with the "desensitization" hypothesis, though some will probably argue in the reverse direction, that video games allow for releasing aggression in a harmless way.

Another ethical issue sometimes raised is the addictive potential of video games, which can decrease school or job performance, impair relationships, and so on. Some also fear that video games are more mind-numbing than books and will inhibit the intellectual growth of youth. Others point out that video games are a form of art that can be quite complex and challenging, and MMORPGs like World of Warcraft may even teach players planning, teamwork, and leadership.

These topics are worth exploring, but they're not my focus here. In this piece, I ask a less common question: Do the characters in video games matter ethically, not just for instrumental reasons but for their own sakes? I don't know if I've ever heard this question asked before, at least not with respect to present-day video games. Most people would brush it off as ridiculous. But I think the answer is not so obvious on further examination.

Fiction vs. reality

Children learn to separate fiction from reality around the ages of 3-5 years old. Context helps determine the distinction. As an example, in Mister Rogers' Neighborhood, the show's real characters lived in the outside world, while the fictional characters lived in an explicitly separate "Neighborhood of Make-Believe." Children learn that their families, friends, national leaders, and science facts are part of reality, while fiction books, movies, and video games are fictional. It's okay if fictional characters get hurt, because they don't actually exist. When a child sees a character in a movie fall injured, a parent can console the child by explaining that the people on screen are just actors, and the blood is not real. Likewise, it seems obvious that the violence in video games is not real and hence doesn't matter unless it leads to antisocial behavior in the actual world.

But what is "reality" anyway? Our real world, of cars and families and love and future plans, is a complex arrangement of tiny physical particles into higher and higher levels of abstraction: atoms to molecules to proteins to cells to organs to people, which interact in societies. We are extremely elaborate machines built from masses of dumb, elementary physical operations that are put together in ways that form something beautifully dynamic and meaningful. "Reality" consists of all the higher-level abstractions that we use to make sense of these complicated particle movements. These abstractions are what matter to us and determine whether we're happy or depressed, hopeful or afraid. Some neuroscience research by Anna Abraham and D. Yves von Cramon suggests that our sense of what's real is determined by its relevance and degree of personal connection to us.

Now consider an elaborate, intelligent video-game character. It, too, is built in layers of abstraction from tiny physical stuff: electrons and atoms, to transistors, to logic gates, to computer hardware, run using binary code, which is created from higher-level code, which itself is organized in a modular fashion, from individual statements, to functions, to classes, to software packages, all within computers that can interact in computer networks.

We could say, "The computer character isn't 'real' because it's just a collection of dumb physical operations that are each extremely simple and unfeeling." But we too are built from dumb, "unfeeling" physical operations. The transistors that comprise the video-game character's "brain" are just as "real" as the neurons that comprise yours; in both cases, we can even point to the clumps of matter that are involved.

"Ok," one might acknowledge. "The character's brain is as real as mine, but its world is fake. I'm interacting with real physics, while this character is interacting with fantasy physics." But is there really such a big distinction? The trees, rocks, and walls in your world are clusters of fundamental particles behaving in sophisticated aggregate ways. For instance, the atoms in your chair are acting so as to hold themselves together. The video-game character also interfaces with objects (atoms and electrons in transistors, for example) that are made of fundamental physical particles acting in particular ways that define their behavior. The behavior of those particles is different between the cases, but the kind of thing going on is similar.

If it helps, we could imagine the dungeon walls of a Legend of Zelda game as being actual physical walls that the atoms defining the protagonist, Link, bumps up against when he tries to move into them. It's not the case that these walls in the computer are spatially arranged in the same configuration as the walls in the game, but they're logically arranged in the configuration in the game, according to a complex mathematical mapping from atoms/electrons to virtual walls. (What is the mapping? It's defined by the game's code.) In a similar fashion, the building walls that we experience are themselves complicated mappings of the basic elements of reality. We don't experience decoherence as we split into the many worlds of quantum mechanics, nor do we experience the seven extra dimensions of M-theory beyond 3 spatial and one temporal dimension. As Donald D. Hoffman notes in "The Interface Theory of Perception": "Just as the icons of a PC's interface hide the complexity of the computer, so our perceptions usefully hide the complexity of the world, and guide adaptive behavior." The game character's perceptions likewise interface appropriately with the logical boundaries of its world.

"Real" entities are patterns that emerge from low-level physical particle interactions, and meaningful patterns can be seen in changes of currents and voltages inside microprocessors as well as in configurations of biomolecules inside organisms.

This discussion delves deeper into my main point in this section.

Goal-directed behavior and sentience

If video games can be seen as "real" in a similar way as our own world, what distinguishes video-game characters from real people and animals? I think it comes down to differences in complexity, especially with regard to specific algorithms that we associate with "sentience." As I've argued elsewhere, sentience is not a binary property but can be seen with varying degrees of clarity in a variety of systems. We can interpret video-game characters as having the barest rudiments of consciousness, such as when they reflect on their own state variables ("self-awareness"), report on state variables to make decisions in other parts of their program ("information broadcasting"), and select among possible actions to best achieve a goal ("imagination, planning, and decision making"). Granted, these procedures are vastly simpler than what happens in animals, but a faint outline is there. If human sentience is a boulder, present-day video-game characters might be a grain of sand.

Digital agents using biologically plausible cognitive algorithms seem most likely to warrant ethical consideration. This is especially true if they use reinforcement learning, have a way of representing positive and negative valence for different experiences, and broadcast this information in a manner that unifies different parts of their brains into a conscious collective. Yet, I find it plausible that other attributes of an organism matter at least a little bit as well, such as engaging in apparently goal-directed behavior, having a metric for "betterness vs. worseness" of its condition, and executing complex operations in response to environmental situations. Many NPCs in video games have some of these attributes, at least to a vanishing degree, even if most (thankfully) don't yet have frameworks for reinforcement learning or sophisticated emotion.

Many NPCs exhibit goal-directed behavior, even if that just means implementing a pathfinding algorithm or choosing in which direction to face in order to confront the game's protagonist.

Consider an example from a real video game: Doom 3 BFG edition (source code). In the file AI.cpp, we can see implementations of how AIs can KickObstacles, FaceEnemy, MoveToEnemyHeight, MoveToAttackPosition, etc. Of course, these functions are extremely simple compared with what happens if a real animal is engaged in an action. But the distinction is one of complexity, of degree rather than kind. Real animals' actions to kick obstacles, face enemies, etc. involve many more steps, body parts, and high-level systems, but at bottom they're built from the same kinds of components as we see here.

Especially in RPGs, some NPCs have explicit representations of their "welfare level" in the form of hit points (HP), and the NPCs implement at least crude rule-based actions aiming to preserve their HP. In some turn-based RPGs like Super Mario RPG or Pokémon, an NPC may even choose an action whose sole purpose is to bolster its defenses against damage in subsequent rounds of the battle. The extent of damage may affect action selection. For example, in Revenge of the Titans (source code), drones select a building to target based on a rating formula that incorporates HP damage:

rating = cost * (damage / newTarget.getMaxHitPoints()) * factor * distanceModifier;

Even NPCs without explicit HP levels have an implicit degree of welfare, such as a binary flag for whether they've been killed. NPCs that require multiple strikes to be slain—for instance, a boss who needs to be struck with a sword three times to die—carry HP state information not exposed to the user. They also display scripted aversive reactions in response to damage.

And maybe representations of valuation could be seen more abstractly than in an explicit number like HP. In animal brains, values seem to be encoded by firing patterns of output nodes of certain neural networks. Why couldn't we also say that the patterns of state variables in an NPC encode its valuation? Animal stimulus valuation exists because of the flow-on effects that such valuation operations have on other parts of the brain. So why not regard variables or algorithms that trigger flow-on effects in NPCs as being a kind of at least implicit valuation?

Consciousness and power

A recurrent criticism of this piece is the observation that NPCs don't have sophisticated self-monitoring functionality of the type that would give them truly "conscious" experience rather than going through life "in the dark." I acknowledge that this complaint is relevant, and maybe I would agree that upon further examination, NPCs don't matter very much because their self-reflection is often not well refined. But we should think about how robust our view is that self-monitoring specifically is the crucial feature of emotional experience.

In "Feelings Integrate the Central Representation of Appraisal-driven Response Organization in Emotion" Klaus R. Scherer suggests five different components of emotion (this table is copied from Table 9.1, p. 138 of the text):

CNS = central nervous system; NES = neuro-endocrine system;

ANS = autonomic nervous system; SNS = somatic nervous system

| Emotion function | Organismic subsystem and major substrata | Emotion component |

| Evaluation of objects and events | Information processing (CNS) | Cognitive component |

| System regulation | Support (CNS; NES; ANS) | Neurophysiological component |

| Preparation and direction of action | Executive (CNS) | Motivational component |

| Communication of reaction and behavioral intention | Action (SNS) | Motor expression component |

| Monitoring of internal state and organism-environment interaction | Monitor (CNS) | Subjective feeling component |

We can see that emotion is a complex process with many parts at play. Why would we then privilege just the subjective-feeling component as the only one that we think matters? One reason might be that it's potentially the most central feature of the system. In Scherer's view, the monitoring process helps coordinate and organize the other systems. But then privileging it seems akin to suggesting that among a team of employees, only the leader who manages the others and watches their work has significance, and the workers themselves are irrelevant.b In any event, depending on how we define monitoring and coordination, these processes may happen at many levels, just like a corporate management pyramid has many layers.

Daniel Dennett's Consciousness Explained is one of the classic texts that challenges views separating "unconscious" from "conscious" processing. He says (p. 275): "I have insisted that there is no motivated way to draw a line dividing the events that are definitely 'in' consciousness from the events that stay forever 'outside' or 'beneath' consciousness."

I think the reason people place such emphasis on the monitoring component is that it's the part of the system that the centers of their brains that perform abstract analysis and linguistic cognition can see. When we introspect, we introspect using our self-monitoring apparatus, so naturally that's what we say matters to us. What our introspection can't see, we don't explicitly care about. But why couldn't the subsystems matter if we knew from neuroscience that they were there, even if we couldn't identify them by introspection? Intuitively we may not like this proposal, because we tend not to care about what we can't feel directly; "out of sight, out of mind." But if we take a more abstracted view of the situation, it becomes less clear why monitoring is so essential. Why couldn't we also care about a collection of workers that didn't have a manager/reporter?

I'm skeptical of higher-order theories of consciousness. I think it's plausible that people adopt these views because when they think about their emotions, of course in that moment they're having higher-order perceptions/thoughts about lower-order mental states. But that doesn't mean consciousness is always a higher-order phenomenon, even when you're not looking. To be sure, I think there is an important element of subjective experience that comes from monitoring, and metacognition probably contributes something to the texture of consciousness, but I'm doubtful about assertions that monitoring is all there is to consciousness. After all, what makes those computations special compared with others? One could say that so-called first-order perceptions are actually second-order perceptions about first-order objects in the world.

The focus on monitoring and reportability rather than the "unconscious" subcomponents seems to be an issue of power. The parts of us that think about moral issues are disposed to care about the monitoring functionality, in a similar way as the president of the United States gets all the attention by the media, even though it's his underlings who do most of the work. If one asks oneself whether one is conscious of low-level processes, one thinks "no, I'm not". Yet as blindsight, subliminal stimuli, and various other "unconscious" phenomena demonstrate, effects we can't uncover via explicit introspection still have effects and implicitly matter to us.

One way in which so-called "conscious" (globally available) information is distinct from "unconscious" information is that we can hold on to the conscious information for an indefinite time, as Stanislas Dehaene explains in Consciousness and the Brain. Our minds have what Daniel Dennett calls "echo chambers" that allow contents to be thought about and re-thought about and kept around for inspection and metacognition. Unconscious information comes and goes without leaving as much of a lasting footprint. This helps explain why we can only talk about the conscious data. But does only the conscious data matter? It too will eventually fade (unless it enters long-term memory, and even then we'll eventually die). Shouldn't relevance be assessed by the degree of impact something has on the system, not the binary fact of whether it can be reported on?

Consider also a split-brain patient. When presented with an object visible to only her right hemisphere, she says she can't see it; this is because linguistic areas are concentrated in the left hemisphere. But she can still reach for the object with the hand that her right hemisphere controls. It seems implausible to suggest that only the left half of the brain is conscious merely because only it can make linguistic reports.

Once we can see roughly how affective subsystems are operating in computational brain models, do we feel inclined to give more power to those voiceless subcomponents or do we want to continue to favor the conscious monitoring part that our instincts incline us to care about? I don't have a settled opinion on this, but I think we shouldn't jump to the conclusion that only the monitor matters. This question can be particularly relevant in cases where the non-conscious components diverge from the conscious summary or are not reported at all. And it's also relevant for agents like NPCs that may have only rudimentary monitoring going on relative to the amount of so-called "unconscious" computation.

In any case, NPCs do have extremely crude monitors of their internal status, such as display of hit points or verbalized exclamations they make (e.g., screaming). And we can see how the other components of emotion in the above table also show themselves in barebones ways within NPCs.

Dennett's stance levels

NPCs differ in their degree of sophistication. For example, consider the game "Squirrel Eat Squirrel" in Ch. 8 of Al Sweigart's "Making Games with Python & Pygame." The program for this game is extremely simple—just 396 lines of code, not counting what's imported from Pygame. Squirrels of various sizes walk onto the screen from various directions. If they're smaller than the protagonist squirrel, the protagonist eats them; else, they hurt the protagonist. They may occasionally change direction at random, but this is all they do. They don't plan routes, seek to avoid damage, or even try to face the protagonist. They just move randomly, and if they hit the protagonist, either they're eaten or they cause injury.

The NPC squirrels in this game could be adequately described by what Daniel Dennett calls the "physical stance": behaviors comparable to particles moving around or other crude physical processes. (These are the "dumb particle updates" from which all higher-level stances appear as emergent phenomena.) For instance, the squirrel game could be seen to resemble planets in space. If a big planet like Earth runs into a small asteroid, the asteroid is absorbed and makes Earth somewhat bigger. If Earth runs into a planet bigger than itself, it gets blasted apart and damaged. We could think of plenty of other physical analogies, like water droplets collecting together.

In Super Mario Bros., Goombas and Koopa Troopas can be seen purely with the physical stance, because they just walk in one direction until they hit an obstacle, at which point they go the opposite way. A bouncing ball is comparable (indeed, arguably somewhat more complicated due to acceleration, friction, etc.).

Non-animal-like environmental features of games could be seen as comparably sophisticated, such as the dangerous water drips or falling rocks in Crystal Caves. Each level of Crystal Caves also features an air station, which explodes if the game's protagonist accidentally shoots it. The behavior of this air station is not much simpler than that of the most basic game enemies (after all, the air station "dies" if shot, although it doesn't move around or cause damage to the protagonist), but it can be modeled entirely with the physical stance.

Other NPCs are a little more complex. For instance, the Hammer Bros. in Super Mario Bros. turn to face Mario, and if Mario waits long enough, they begin to chase after Mario. This behavior could put them closer to the level of the "intentional stance" (they have beliefs about where Mario is located, and they have the goal of hammering him, so they move in order to position themselves to better achieve that goal). Many advanced game AIs are best described by the intentional stance, even if they don't use sophisticated academic AI algorithms.

Of course, that something can fruitfully be modeled by the intentional stance doesn't obviously imply that we should care about it ethically. Many computing operations outside the realm of video games also seem to require the intentional stance. Load balancers, query optimizers, and many other optimization processes are best modeled by considering what the system knows, what it wants to achieve, and predicting that it will act so as to best achieve that goal (within the space of possibilities that it can consider). Search engines are probably best modeled by the intentional stance; given beliefs about what the user was looking for, they strive toward the goal of providing the best results (or, more pragmatically, results that will lead to satisfied-seeming user behavior). Outside the computing realm, we could see corporations as intentional agents, with beliefs about how to best achieve their goals of maximizing shareholder value.

If we're at least somewhat moved by the intentional stance, we might decide to care to a tiny degree about query optimizers and corporations as well as video-game AIs. I just wanted to make sure we realized what we're signing up for if we include intentional behavior on our list of ethically relevant characteristics. The fact that video-game characters look more like animals than a search engine does might bias our sentiments. (Or we might decide that superficial resemblance to animals is not totally morally irrelevant either.)

Planning AIs

Dennett (2009) explains that the intentional stance treats a thing "as an agent of sorts, with beliefs and desires and enough rationality to do what it ought to do given those beliefs and desires" (p. 3). Dennett (2009) mentions a chess-playing computer as a prototypical example of an intentional system: "just think of them as rational agents who want to win, and who know the rules and principles of chess and the positions of the pieces on the board. Instantly your problem of predicting and interpreting their behavior is made vastly easier than it would be if you tried to use the physical or the design stance" (pp. 3-4).

An AI planning agent is in some sense a crystallization of an intentional system: "Given a description of the possible initial states of the world, a description of the desired goals, and a description of a set of possible actions, the planning problem is to synthesise a plan that is guaranteed (when applied to any of the initial states) to generate a state which contains the desired goals (such a state is called a goal state)."

And planning algorithms are sometimes used in game AI (Champandard 2013). Following are some examples from Champandard (2013):

F.E.A.R. is the first game known to use planning techniques, based on the work of Jeff Orkin. The enemy AI relies on a [Stanford Research Institute Problem Solver] STRIPS-style planner to search through possible actions to find a world state that matches with the goal criteria. Monolith's title went on to spawn a franchise of sequels and expansions, and inspired many other games to use STRIPS-style planning too — in particular the S.T.A.L.K.E.R. series, CONDEMNED, and JUST CAUSE 2.

There aren't very many games that use such planners in comparison to other techniques, but the AI in those games has been well received by players and reviewers. [...]

Guerrilla Games implemented a planner inspired by [Simple Hierarchical Ordered Planner] SHOP into KILLZONE 2, and continues to use the technology in sequels including KILLZONE 3 and presumably 4. [...]

A utility system is the term used to describe a voting/scoring system, and they are often applied to sub-systems of games like selecting objects/positions based on the results of a spread-sheet like calculation. It's interesting to establish parallels between STRIPS-based planners and utility-based systems, since both have a strong emphasis on emergent behavior that's not intended to be controlled top-down by designers.

[...] The SIMS franchise is famous for its use of utility systems, but in the 3rd major iteration, the game puts more focus on a top-level hierarchy and keeps the utility-based decisions more isolated. This was necessary for performance reasons, but also makes the characters more purposeful.

We can see how beliefs and desires are central to STRIPS planning in the following Planning Domain Definition Language example from Becker (2015):

(define (problem move-to-castle) (:domain magic-world) (:objects npc - player town field castle - location ) (:init (border town field) (border field castle) (at npc town) ) (:goal (and (at npc castle))) )

Orkin (2006) explains regarding the game F.E.A.R. that NPCs may have multiple goals (p. 6): "These goals compete for activation, and the A.I. uses the planner to try to satisfy the highest priority goal." If a soldier, assassin, and rat were all given the two goals of Patrol and KillEnemy, the behavior of the characters would differ based on their different possible actions. Orkin (2006), p. 6:

The rat patrols on the ground like the soldier, but never attempts to attack at all. What we are seeing is that these characters have the same goals, but different Action Sets, used to satisfy the goals. The soldier’s Action Set includes actions for firing weapons, while the assassin’s Action Set has lunges and melee attacks. The rat has no means of attacking at all, so he fails to formulate any valid plan to satisfy the

KillEnemygoal, and he falls back to the lower priorityPatrolgoal.

Does the intentional stance cover too much?

Dennett (2009):

Like the lowly thermostat, as simple an artifact as can sustain a rudimentary intentional stance interpretation, the clam has its behaviors, and they are rational, given its limited outlook on the world. We are not surprised to learn that trees that are able to sense the slow encroachment of green-reflecting rivals shift resources into growing taller faster, because that’s the smart thing for a plant to do under those circumstances. Where on the downward slope to insensate thinghood does ‘real’ believing and desiring stop and mere ‘as if’ believing and desiring take over? According to intentional systems theory, this demand for a bright line is ill-motivated.

Peter Carruthers notes that on Dennett's view, "it is almost trivial that virtually all creatures [...] possess beliefs and desires, since the intentional stance is an undeniably useful one to adopt in respect of their behavior."

But could we also apply the intentional stance to even simpler systems? Consider these examples:

- A washer is tied to a string. If you hold the string, the washer will always orient to face down towards the Earth. If you pull up the washer, it will fall back down if it can. You can put the washer on a table and "thwart" its efforts to hit the floor.

- An electron is circling around a positive charge. If you push the electron away, it tries to go back toward the positive charge. (Thanks to a friend for inspiring this example.) In The House Bunny, a character says: "I want to be your girlfriend more than an electron wants to attach to a proton," which suggests that attributing a goal to the electron makes sense.

Dennett himself would perhaps reject these examples. Dennett (2009): "In general, for things that are neither alive nor artifacts, the physical stance is the only available strategy, though there are important exceptions".

However, I maintain that the separation among the physical, design, and intentional stances is not clear. Maybe it's easier to model the behavior of two electrons as "they want to avoid each other" than it is to deploy the equations of physics to describe their behavior more precisely. And consider high-level emergent properties of non-artifactual physical systems, like the angle of repose of a pile of granular material. We can easily predict the angle that such material will take by imagining it as a system "designed" to achieve that angle; it would be vastly harder to model the physical interactions of individual grains in producing that emergent angle.

So the boundaries between the physical, design, and intentional stances aren't perfectly clear. Maybe a relevant difference is one of intelligence on the part of the intentional agent? For instance, maybe electrons seeking protons aren't very smart at avoiding obstacles. And neither are Hammer Bros. Still, ability to achieve one's goals isn't obviously crucial. Imagine that you have an overwhelming desire to discover the theory of everything that describes physics, but you can't even understand first-grade math due to a learning disability. Or suppose that your loved one has died, and you have an overwhelming desire to see her again, even though this isn't possible for you (due to not having reconstructive nanotech or whatever). Do your desires not matter very much in these cases because you're not smart enough to accomplish them?

Minimum description length?

Note: I haven't read most of the literature on the intentional stance. Maybe the following idea has been discussed already, or maybe it's wrongheaded.

One idea for deciding when a high-level stance toward a physical system is justified might be to use something like the minimum description length (MDL) principle: does describing the physical system with the "intentional stance" (in some description language) allow you to compress the observed data enough to outweigh the added number of bits required to specify the intentional stance itself? In other words, we can treat the intentional stance as a model that helps explain the data, and the question is whether

(description length of the model) + (description length of the data compressed using the model) < (description length of the raw data).

If yes, then the intentional stance is justified.

Here's a possible example. Suppose that the observed data is that a moose walks around until it finds a river, at which point it takes a drink. If we try to describe the motion of this moose purely as if the moose were a physical object, we have to specify its location and trajectory as one out of a large number of possibilities. However, if we use the intentional stance to regard the moose as having a desire to drink and beliefs about the location of the nearest river, we can significantly constrain the set of likely trajectories the moose will take. The moose's exact physical trajectory will still have "noise" that our model couldn't predict, but this noise is cheaper to specify given our high-level prediction about where the moose would go. (This is analogous to the idea from MDL that it may require fewer bits to describe a data set by first applying a regression model to the data and then specifying the residuals of that regression model, rather than describing all the data points without using any model.)

Using this MDL criterion for the intentional stance, the intentional stance would be more appropriate for systems where it can explain a wide range of observations, since the description length of the model would allow for compressing a lot of observational data. That said, I imagine that this MDL criterion would be sensitive to how exactly the model and data are encoded? Plus, it's not obvious that our ethical intuitions should conform to an abstract criterion like this.

And what happens if a not-explicitly-intentional model can better compress the moose's behavior than an intentional model can? For example, maybe specifying the moose's entire connectome explains its long-run behavior much more precisely than merely specifying that it has such-and-such beliefs and desires, leading the connectome model to have lower total description length than the intentional-stance model.

Intentionality is not binary

In "Consciousness, explanatory inversion, and cognitive science", John Searle assumes as step 1 of an argument that "There is a distinction between intrinsic and as-if intentionality" (p. 586). "As-if" intentionality is the metaphorical kind that we use to ascribe goal-directed or mental states to a system, such as when we say that a lawn is thirsty for water. But, claims Searle, this is different from the intrinsic intentionality that animals like humans have when they're thirsty.

Like Dennett, I maintain there is no fundamental divide. Goal-directed behavior is not different in kind from mechanical behavior; rather, goals are conceptual frames that we impose on mechanical systems. It's just that for some systems the intentional stance makes more sense than for others.

Searle compares the brain in our heads, which has intrinsic intentionality, with the "brain in our guts", which has as-if intentionality (p. 586-87):

Now does anyone think there is no principled difference between the gut brain and the brain brain? I have heard it said that both sorts of cases are the same; that it is all a matter of taking an "intentional stance" toward a system. But just try in real life to suppose that the "perception" and the "decision making" of the gut brain are no different from the real brain.

I don't claim there's no difference between the gut brain and the brain brain—it's just that the difference is a matter of degree rather than kind. Distinctions between "mechanical" versus "goal-directed" systems are continuous gradations along dimensions of complexity, intelligence, robustness, and metacognition.

Searle claims that my view opens up a reductio (p. 587):

If you deny the distinction it turns out that everything in the universe has intentionality. Everything in the universe follows laws of nature, and for that reason everything behaves with a certain degree of regularity, and for that reason everything behaves as if it were following a rule, trying to carry out a certain project, acting in accordance with certain desires, and so on. For example, suppose I drop a stone. The stone tries to reach the center of the earth, because it wants to reach the center of the earth, and in so doing it follows the rule S = (1/2) g t2.

But Searle's modus tollens is my modus ponens. Searle has outlined one of my main points in this essay.

Searle encourages cognitive science to adopt an "inversion analogous to the one evolutionary biology imposes on preDarwinian animistic modes of explanation" (p. 585). He says simple cognitive processes like the vestibulo-ocular reflex don't deserve to be thought of as goal-directed. Rather than eyes moving with the goal of stabilizing vision, eyes just move mechanically, and evolution has selected mechanisms such that they achieve evolutionary ends (p. 591). But once again, I maintain there isn't obviously a sharp distinction between selection and goal-directed cognition. Suppose we're choosing where to go to college. This is a paradigm case of a goal-directed process: We have criteria and long-term plans, and we evaluate how well each college would score along those dimensions. But inside our brains, it's a mechanical-seeming selection process, in which inclinations toward different colleges compete, and the strongest wins. Likewise, an AI agent might engage in goal-directed behavior via an evolutionary subroutine to refine certain action parameters. In general, we could think of the "goal" as the fitness/objective function and "selection" as the cognitive processes that pick out a candidate that best achieves the goal. Both biological evolution and human-conscious goal-seeking involve both components.

State machines and emotion

What is "emotion"? The word means different things to different people, and as Scherer noted above, emotion includes many components. One basic aspect of emotion is that it's a state of the brain in which the organism will incline toward certain actions in certain situations. For example, given the emotion of "hunger", the organism will incline toward the action of "eating" if food is present. Once it has eaten, the organism transitions to an emotional state of "full", and from the "full" state, the organism won't eat even if food is present.

Sketched in this way, emotions resemble states in a finite-state machines. This comparison has been made by other authors, including Ehmke (2017). For example, "hungry" is a state, and when presented with the input that "food is available", the organism eats (which is the transition arrow of the state machine), and then the organism arrives at the "full" state.

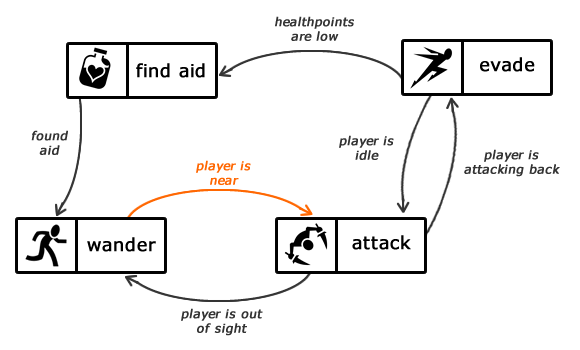

Game AIs sometimes use finite-state machines to control their behavior as well. Bevilacqua (2013) gives a helpful example:

Of course, the anthropomorphic labels given to these states shouldn't be mistaken for greater complexity than is actually present in the NPC code. The state machine is a clean way to organize a lot of if-then (or "stimulus-response") rules (Nystrom 2009-2014).

Unlike for planning agents, the goals of a state-machine-based NPC aren't explicitly described; rather, they can be inferred from how the agent behaves.

Mysterious complexity

Often when a system is simple enough that people can understand how it works, people stop caring about it. When we can see something operating mechanically, we trigger the "inanimate object" viewpoint in our brains rather than the "other mind" viewpoint. Vivisectionists formerly cut open animals without anaesthesia because of the (technically correct though ethically misguided) belief that animals were "just machines."

The "AI effect" is similar: As soon as we understand how to solve a cognitive problem, that problem stops being "AI." As Larry Tesler said: "Intelligence is whatever machines haven't done yet."

Inversely, when a system is large, complicated, and arcane, it can feel to us like magic. Suddenly we can dismiss our prejudices against understandable processes and instead be awed by the mystery of the unknown. The fact that our brains are so big and messy is perhaps a main reason we attribute mysterious consciousness to our fellow humans. Because we haven't understood the mechanical steps involved, we can retain the "magic consciousness" feeling.

Many video games are bulky and massively complex as well. Here are some estimates from two articles:

| Game | Lines of code |

| Quake II | 136,000 |

| Dyad | 193,000 |

| Quake III | 229,000 |

| Doom 3 | 601,000 |

| Rome 2 | 3,000,000 |

The article on Rome 2 adds that each of the game's soldiers have 6000-7000 polygons and 45 bones or moving parts. The game's lead designer, James Russell, explained: "Games are arguably the most sophisticated and complex forms of software out there these days."

Of course, code in video games has to run everything about the game world, and the amount of code devoted specifically to NPC behavior is a tiny fraction of the total. Still, even just the NPC systems may become messy enough that our intuitions that "this can't be sentient" give way to more magical ideas about emergence of consciousness.

We should bear in mind that codebase size is not equivalent to sophistication, intelligence, or moral importance. Some extremely powerful algorithms are really simple, while some trivial operations can be made complicated by adding a lot of frivolous or inelegant appendages.

Appearance vs. implementation

The main reason we don't care intrinsically about people being stabbed to death in movies or plays is that these media use deception to fool us. What looks like a sword in a man's chest in a play is actually a sword sticking under his armpit. When actors pretend to cry out in agony, their emotions are not actually distraught to a significant extent. In other words, what looks bad at first glance is not something we actually consider bad when we know all the facts.

Video games are somewhat different, because in their case, the NPCs really do "get hurt." NPCs really do lose health points, recoil, and die. It's just that the agents are sufficiently simple that we don't consider any single act of hurting them to be extremely serious, because they're missing so much of the texture of what it means for a biological animal to get hurt.

There is some degree of "acting" going on in video games when characters play out a scripted sequence of movements that would indicate conscious agony in animals but that is mainly a facade in the video game because the characters don't have the underlying machinery to compute why those actions are being performed. For instance, pain might cause a person to squint his eyes and scream because of specific wiring connecting pain to those movements. In a game, a character can be programmed to squint and scream without anything going on "under the hood." The usual correlation between external behavior and internal algorithms is broken, just as in the case of movie acting.

The same reasoning suggests that recordings of video games (such as screenshots and gameplay walkthroughs on YouTube) have a less significant moral status than playing the game itself, because the behaviors are all "acting," without any of the underlying agent-behavioral computations going on. That said, insofar as the image rendering of gameplay in a YouTube video still traces the outlines of what happened in the game, if only in an adumbral way, is there at least an infinitesimal amount of moral significance in the video alone? A more forceful example would help clarify: Is there any degree at all to which it would be wrong to repeatedly play a video of a person enduring a painful death, even if no one was watching it, if only because the pattern of pixels being generated has some extremely vague resemblance to the pattern of activity in the person's body during the experience? I don't know how I feel about this, but either way, I think mere videos of something bad don't rank high on the list of moral priorities.

I once saw a comment that said something to this effect: "I was going to 'like' this post, but it has 69 'like's already, and I don't want to ruin that!" This seems to be a relatively clear case where mere appearances bear essentially no relation to a moral evaluation. Whereas NPCs do execute extremely simple versions of the types of processes that comprise emotions in animals, the numbers "69" do not implement any obvious versions of sensory pleasure, not even in an extremely simple form. (Of course, one still might invent joke interpretations of those digits according to which they represent pleasure, but this is probably no more true for "69" than for "70".)

An argument against mere images having moral standing

Suppose we film an actor who feigned a painful death in a movie scene but was really enjoying the acting experience. The acting is very convincing, and the video itself is indistinguishable from a video of a real, painful death. In fact, suppose there exists another video of an actual, painful death that is pixel-by-pixel, frame-by-frame identical to the pretend one. Then if we replay the pretend video, are we "tracing the outlines" of the awful real death or the enjoyable fake one?

To some extent, this puzzle resembles the underdetermination of the meaning of a computation in general. We always have to decide what interpretation to apply to a set of symbol movements, though typically, unless the computational system is extremely simple, there's only one interpretation that's not hopelessly contorted in its symbol-to-meaning mappings.

Maybe an important feature of a morally relevant agent is counterfactual robustness. Hurting an NPC matters because if you hadn't hurt it, it wouldn't have recoiled, and if you had hurt it in a different place, it would have recoiled there rather than here. Another possible difference between interactive video games vs. fixed videos is that in an NPC, there are computations going on "under the hood" of the agent, in its "mind," telling it how to react. In contrast, video frames are collections of memorized pixel values. If the violent video were not statically recorded but dynamically computed based on some algorithm, at that point I might indeed start to become concerned.

An actor can enjoy the portrayal of being hurt because his brain includes extra machinery that allows him to know how to mimic injury without actually being injured; this machinery is not present in an NPC. Is there any extent to which portions of the actor's body do actually suffer a tiny bit by faking death, and they're just washed out in his brain's overall assessment?

I should add that sometimes actors do take on the moods of the characters they're playing to a nontrivial degree. Indeed, this is the premise behind the method acting school of thought. Two examples:

- Angelina Jolie "preferred to stay in character in between scenes during many of her early films, and as a result had gained a reputation for being difficult to deal with."

- When shooting The Aviator, Leonardo DiCaprio reawakened his childhood obsessive-compulsive disorder (OCD). According to Professor Jeffrey Schwartz: "There were times when it was getting not so easy for him to control it. By playing Hughes and giving into his own compulsions, Leo induced a more severe form of OCD in himself. There is strong experimental evidence this kind of switch can happen to actors who concentrate so hard on playing OCD sufferers."

Why visual display might matter

A few people do seem to feel moral revulsion about video-game violence, and presumably visual display is the reason for this: The images appear disgusting and may trigger our mirror neurons. Without visual display, very few people at present would care about NPCs. But the visual display seems "superficial," in the same way that a person's appearance doesn't matter compared with his inner feelings. So is visual display totally irrelevant for serious altruism?

One way we might think it matters is that it provides more unity to characters that would otherwise consist of lots of distributed operations by different parts of the computer. The display localizes the effects of those operations to a set of pixels on a screen that evolves slowly in time, analogous to biological creatures being a set of atoms that evolves in time according to the brain's algorithms. We might think of the NPC as "being" those pixels, with its "brain" computations happening non-locally and then being sent to the pixel body. Of course, this is just one possible conceptualization.

The screen could also be seen as playing some roles of consciousness. For instance:

- The global-workspace theory of consciousness proposes that some lower-level processes become "broadcast" to the rest of the brain in a serial fashion, and this corresponds to consciousness of that information. We could see the screen as playing a broadcasting function, and in fact, the global-workspace theory has been described using a theater metaphor, in which consciousness consists in what happens on stage, while lots of other computing may go on in the background as well. However, unlike in a global-workspace model, the screen doesn't have effects back on the brain itself. I think these effects back on the brain are pretty crucial, and indeed, Daniel Dennett considers consciousness to be the effects of "famous" or "powerful" brain coalitions on the rest of the system—not just a broadcast of the information that doesn't do anything. We would not say "I'm conscious of X!" if the global broadcast about X didn't transmit to our verbal brain centers.

- The higher-order theory postulates that consciousness consists in thoughts about one's mental states. A screen can be seen as a "thought about" the operations that the computer is performing, where instead of being written in words, the screen's message is written in pictures.

I think both of these interpretations are rather weak and strained, but perhaps they can at least convince us that what the screen does may not be completely irrelevant to a moral evaluation.

How important are NPCs?

I think video-game NPCs matter a tiny amount, even the present-day versions that lack reinforcement learning or other "real AI" abilities. They matter more if they're more complex, intelligent, goal-directed, adaptive, responsive to rewards and injuries, and animal-like. However, I don't think current game NPCs compete in moral importance with animals, even insects, which are orders of magnitude more sophisticated in brain complexity and intelligence. Maybe game NPCs would be comparable to plants, which, like the NPCs, mostly engage in scripted responses to environmental conditions. Some NPCs are arguably more intelligent than plants (e.g., game AIs may use A-star search), although sometimes plants, fungi, etc. are at least as competent (e.g., slime molds solving shortest-path problems).

Fortunately, the number of video-game NPCs in the world is bounded by some small constant times the number of people in the world, since apart from game testing, NPCs are only computed when people play video games, and a given game contains at most a couple highly intelligent NPCs at once. The NPCs also run at about the same pace as human players. So it's unlikely game NPCs will be a highly morally significant issue just on the grounds of their present numerosity. (A more likely candidate for an algorithm that's significantly ethically problematic based primarily on a numerosity argument would be some silent, bulk process run at blazing speeds for instrumental purposes.)

At the same time, the levels of violence in video games are basically unparalleled, even compared against the spider-eat-insect and fish-eat-zooplankton natural world.

!['Exploding an [enemy's] head using the GHOUL engine in Soldier of Fortune.' This is a copyrighted image, but I believe its presence here qualifies as fair use for the reasons listed on the Wikipedia page where I found it and from which I quoted the description: https://en.wikipedia.org/wiki/File:Soldier-Of-Fortune-Violence.png The main way in which my use differs from Wikipedia's is that I'm commenting on video games in general rather than trying to identify this game in particular. Please contact me if you have any concerns about use of this image.](/wp-content/uploads/2014/09/Soldier-Of-Fortune-Violence.png)

NPCs typically come into existence for a few seconds and then are injured to the point of death. It's good that the dumb masses of NPC enemies like Goombas may not be terribly ethically problematic anyway, and the more intelligent "boss-level" enemies that display sophisticated response behavior and require multiple injuries to be killed are rarer. Due to the inordinate amounts of carnage in video games, game NPCs may be many times more significant than, say, a small plant per second. Perhaps a few highly elaborate NPCs approach the significance of the dumbest insects.

On the other hand, it's important not to overstate the degree of suffering of NPCs either. Their decrements to health upon injury and efforts to stay alive aren't significantly different from those of more mundane computational processes found in other software or other complex systems. We may be tempted to give too much weight to video-game violence because of its goriness relative to what the algorithms behind the scenes actually warrant.

Video games of the future

If video games are not among the world's top problems at the moment, why talk about them? One reason is just that they provide a helpful case study with which to refine our intuitions regarding what characteristics make an agent ethically relevant. But a larger reason is because video games may become highly significant in the far future. As more sophisticated cognitive and affective components are added to NPCs in the coming decades, the moral importance of each NPC will grow. Likewise, the sheer number of video games might multiply by orders of magnitude, especially if humans colonize space and harness the stars for computational power.

Eliezer Yudkowsky's fun theory suggests that a valuable galactic future should involve ever-increasing challenges. Others like Nick Bostrom and Carl Shulman suggest a spreading of eudaemonic agents, who likewise value many rich, complex experiences.

Where would such fun, eudaemonic experiences take place? Presumably many would be within virtual-reality video-game worlds, filled with many NPCs. (Actually, in such a case, the distinction between player and non-player characters may break down, since all agents might be equally embedded in the simulated environment.) These NPCs could include immense numbers of insects, wild animals, and imaginary creatures. In order to make these NPCs lifelike, they might consciously suffer in sophisticated ways when injured. We can hope that future game designers heed this as an ethical concern, but that possibility is far from guaranteed, and it may depend on whether we encourage compassion for wild animals, insects, etc. in the shorter term.

What about enemy aggression?

According to the top-voted comment on the main reddit discussion of my argument:

[NPCs'] primary goal is to oppose the player and foil his attempts to progress through the game. They take steps to stay alive because it makes them more effective as an obstacle. If the player has a goal, and the NPC's have a goal of preventing the player from achieving that goal, then there is a collision of rights in an environment with an intrinsic competitive mechanism for resolving the situation.

The obvious oversight in this argument is that the player is the person who set up the bloody arrangement to begin with. Suppose you build a colosseum and hire a rival to fight you to the death. During the battle, it seems you have the right to kill your opponent because he's trying to kill you. But from a broader perspective, we can see that it was you who created the whole fight in the first place and thereby ensured that someone would die.

Do player characters matter?

In this piece I've focused on NPCs because they're more autonomous, numerous, and abused in video games than player characters (PCs). But do PCs also have moral significance? It's natural to assume that PCs are subsumed within the moral weight we place on the player herself; PCs might be significant in the same degree as a spoon, shovel, or other extension of the player's body. Any planning, goal direction, or behavior of the PC would already be morally counted in the brain of the player.

Possibly there are ways in which the PC could count a little extra beyond the human player, such as through visual reactions to injury, autonomous responses based on HP level, or automatically orienting to face an enemy. Regardless, the experiences of the human player probably matter more.

Comparison with biocentrism

Biocentrism proposes that all living organisms—animals, plants, fungi, etc.—deserve ethical consideration because they have (at least implicit) goals and purposes. Paul Taylor suggests that each organism matters because it's a "teleological centre of life." His "The Ethics of Respect for Nature" explains:

Every organism, species population, and community of life has a good of its own which moral agents can intentionally further or damage by their actions. To say that an entity has a good of its own is simply to say that, without reference to any other entity, it can be benefited or harmed.

This captures a similar idea as my point that game NPCs have at least implicit goals that can be thwarted when we injure them. While this might seem like a separate criterion from sentience—and Taylor clarifies that "the concept of a being's good is not coextensive with sentience or the capacity for feeling pain"—I think the distinction between sentient and non-sentient teleology is one of degree. Even fairly trivial systems exhibit a vanishing degree of self-reflection, information broadcasting, and other properties often thought to be part of sentience.

Taylor's biocentric theory assumes that life is good for organisms:

What is good for an entity is what "does it good" in the sense of enhancing or preserving its life and well-being. What is bad for an entity is something that is detrimental to its life and well-being.

But if biological organisms have their goals thwarted enough (as is the case for most plants and animals that die shortly after birth in the wild, as well as for survivors enduring frequent hardship), maybe they would be better off not having existed at all. Whether we disvalue conscious suffering or so-called "unconscious" goal frustration, it seems a real possibility that the disvalue of nature could exceed the value. Hence, even a biocentric theory isn't obviously aligned with ecological preservation.

J. Baird Callicott pointed this out:

Biocentrism can lead its proponents to a revulsion toward nature—giving an ironic twist to Taylor's title, Respect for Nature—because nature seems as indifferent to the welfare of individual living beings as it is fecund. Schweitzer, for example, comments that

the great struggle for survival by which nature is maintained is a strange contradiction within itself. Creatures live at the expense of other creatures. Nature permits the most horrible cruelties.… Nature looks beautiful and marvelous when you view it from the outside. But when you read its pages like a book, it is horrible. (1969, p. 120)

So even if one doesn't adopt a traditional sentiocentric viewpoint, one can still feel that wild-animal suffering (or, more precisely, wild-organism goal-frustration) is perhaps an overridingly important moral issue.

Taylor admits that he is "leaving open the question of whether machines—in particular, those which are not only goal-directed, but also self-regulating—can properly be said to have a good of their own." He confines his discussion only to "natural ecosystems and their wild inhabitants." In general, environmental ethics leaves out the "machine question," as David Gunkel calls it. Some, like Luciano Floridi, have challenged this focus on the biological. Floridi proposes an "ontocentric" theory of "information ethics" that covers both biological and non-biological systems.

Does NPC suffering aggregate?

Some people have an intuition that small pains like a pinprick don't add up to outweigh big pains like torture. I sympathize with this sentiment, though I'm not sure of my final stance on the matter. There's a counterargument based on "continuity," which says that one prick with a 3 mm pin is less bad than thousands of pricks with a 2 mm pin, and one prick with a 4 mm pin is less bad than thousands with a 3 mm pin, and so on. Eventually it seems like one stab with a sword should be less bad than some insanely big number of pinpricks. I'm personally not sure suffering is continuous like this or whether I would declare some threshold below which suffering doesn't matter.c

If you did think that pains only add up if they exceed some threshold of intensity, then does NPC suffering not matter because it's so tiny? I personally think NPC suffering should add up even if you have a threshold view. The reason is that I don't picture NPC suffering as being like a dust speck in your eye. Rather, I picture it as being really awful for the NPC. If the NPC were smarter, it would trade away almost anything to avoid being shot or slain with a sword. The reason this quasi-suffering has low moral weight is because the NPC is exceedingly simple and has only the barest traces of consciousness. Even though NPC suffering ends up having small total importance, I still put these numbers in the category of "major suffering" (relative to the organism), so I think these numbers should still add in a way that pinpricks may not.

To put it another way, I would do aggregation as follows. Let wi be the moral weight given to organism i based on its complexity. Let the positive number Si be the suffering of that organism relative to its typical levels of suffering. Let T be a cutoff threshold such that pains less intense than the threshold don't matter. I propose to aggregate like this:

Σi wi * (if (Si > T) then return Si, else return 0)

rather than like this:

Σi (if (wi * Si > T) then return wi * Si, else return 0),

because in the latter case, if wi is small, wi * Si will never exceed T, so even an NPC in agony won't count given a non-tiny threshold T.

The second of these equations would make more sense if you hold a view according to which suffering is a tangible thing that can be quantified in some absolute way. If NPCs have lower moral weight, it must be because they suffer less in a universal sense. But then they should be like pinpricks and should fall below the threshold T.

The first of the equations can make more sense if you instead hold my perspective, according to which consciousness is not a thing but is an attribution we make. We decide how much consciousness we want to see in various physical processes. Interpersonal utility comparisons are not absolute but are ultimately arbitrary, and the wi in the equation is interpreted as an interpersonal-comparison weighting based on brain complexity, not as a "true" statement about how much absolute suffering is being experienced. The organism-relative suffering for, say, an NPC being shot is high, because the NPC would do almost anything to avoid that outcome if it had the opportunity and cognitive capacity to make such tradeoffs. (Some NPCs, such as perhaps reinforcement-learning agents, might in fact have the cognitive capacity to make explicit tradeoffs between getting shot vs. other unfavorable outcomes.)

I don't think it's right to imagine ourselves experiencing an extremely tiny pain when thinking about what it's like to be an NPC, because the NPC itself would trade away almost anything to avoid what it's experiencing (if it were capable of thinking about such tradeoffs). This suggests that intense suffering would be a more apt analogy than minor suffering when trying to put ourselves in the place of an NPC that's being killed. Pain and pleasure don't have objective magnitudes; rather, we make up how much we want to care about a given physical system. The only reason I give an NPC (much) less weight than I give a human is because I have a prejudice to care more about complex and intelligent things—not because there's any objective sense in which a human suffers more than an NPC does.

A reader of this site wrote me the following comment regarding insect sentience (from an email, 30 Jun. 2018):

Many people agree that insects may well experience qualia, but they also believe that insects are not able to suffer or enjoy life anywhere near as much as a human can. My reply to that is usually something like "Do you think that an insect that hears a sound hears that sound much weaker than you do? Is that sound, that quale, weaker for the insect? Will a shout sound like a whisper to an insect? Do you think that the colors seen by a bee are much duller than those seen by you? If not, why would the pain that an invertebrate experiences when injured or the joy it feels when it eats food, be weaker than the pain and joy you experience?"

I like this framing. The colors that a bee sees aren't vastly grayer or duller than the colors we see. Rather, a bee's brain doesn't do as much high-level processing of its visual inputs as the human brain does. As one example, humans may explicitly categorize what they see into labeled objects, while if bees do categorization of their visual inputs, it's more implicit. So if we privilege human vision over bee vision, we do so because there's more going on in human vision than in bee vision, not because human vision is somehow "more intense" than bee vision. I contend that it's the same for pain. Human pain is not "more intense" than bee pain; rather, human pain just involves more total cognitive processes than bee pain does. It's hard for us to imagine "having fewer/simpler cognitive processes", because our brains inescapably have lots of complexity built in. Trying to imagine a simpler mind as a mind having less intense experiences is, in my opinion, a misleading attempt to grapple with the fact that we can't really imagine ourselves in the place of a mind very different from our own.

I agree that simpler organisms matter less than sophisticated ones, but I think it's not because the experiences of simpler organisms are "less intense". Rather, it's because their brains are less elaborate, with fewer and simpler cognitive processes responding to a given pain. As an analogy, compare a small community mourning the loss of a beloved person versus an entire nation grieving the death of someone admired. I wouldn't say that the grief in the latter case is "more intense"; rather, the grief is more widespread, has wider-ranging societal impacts, includes qualitatively different components not seen in the case of a single small community (such as coverage on the national news and trending hashtags on Twitter), etc. I'm trying to separate the dimension of "intensity" (how strong the emotion feels to the organism) from the dimension of the "richness" of an experience (how sophisticated and complex is the mind of the organism undergoing it).

A final argument for my view can be as follows. Imagine a highly sentient post-human mind, enhanced to have vastly greater intelligence, insight, introspection, and so on as compared with any present-day human. If the emotional depth of this post-human is far superior to ours, would it be right to say that human torture is comparable to pinpricks relative to this post-human? And therefore maybe no number of humans in agony for years on end could outweigh five seconds of this post-human's agony? Maybe some people would agree with this, but my view is that both human agony and post-human agony count as "extreme suffering" (relative to the organism). I would give greater moral weight to one supersentient post-human than to one human, but I think both humans and post-humans in agony pass the threshold where suffering begins to count as extreme.

Should we aggregate suffering at all?

Sometimes the objection against aggregation goes further: It's claimed that not only does NPC suffering not aggregate, but no suffering does, not even human suffering. C. S. Lewis expressed the idea in The Problem of Pain (p. 116):

There is no such thing as a sum of suffering, for no one suffers it. When we have reached the maximum that a single person can suffer, we have, no doubt, reached something very horrible, but we have reached all the suffering there ever can be in the universe. The addition of a million fellow-sufferers adds no more pain.

My first response is that our ethical calculations don't have to refer to something "out there" in the world. To say that two people suffering is twice as bad as one person suffering is just to say that we want to care twice as much in the former case. It's perfectly sensible to conceptualize our computational brains making calculations on the basis of aggregated suffering, or using computers to do so. A deontological prohibition against lying also doesn't refer to any object "out there" but is still a perfectly coherent moral principle. I find it intuitively obvious that ethical concern should scale linearly in the number of instances of harm, and this is all I need in order to be persuaded.

Secondly, I would point out that many anti-aggregationists often do aggregate suffering over time for a single individual. But if we dissolve the idea of personal identity, then aggregation within an individual over time should also be seen as aggregation over different "individuals", i.e., different person-moments. (A "person-moment" is the state of a person at a particular moment in time. For example, "John F. Kennedy at 9:03 am on 1962 Aug 15" was one person-moment. This individual was in many ways quite different from "John F. Kennedy at 4:36 pm on 1919 Feb 08".) If we don't aggregate over time within an individual, then we might, for example, prefer a 100-year lifetime of unbearable agony at intensity 99 over one minute of unbearable agony at intensity 100 followed by immediate death, because the 100 intensity is worse on a per-moment basis. We could rewrite C. S. Lewis's argument:

There is no such thing as a sum of suffering over a lifespan, for no person-moment suffers it. When we have reached the maximum that a single person-moment can suffer, we have, no doubt, reached something very horrible, but we have reached all the suffering there ever can be in the universe. The addition of a million other suffering person-moments at other points in the person's life adds no more pain.

Finally, I would challenge the anti-aggregationist's confidence in the ontological concreteness of an "individual". It's claimed by the anti-aggregationists that the suffering of an individual is real, while aggregate suffering is a fictional construct. But this isn't the case. The only "real" things that exist are fundamental physical primitives—quarks, leptons, bosons, etc., or perhaps more fundamentally, strings and branes. Anything else we may describe is a conceptual construct of these basic components. Molecules are helpful conceptualizations of more fundamental particles behaving in coherent ways together. Likewise with cells, organs, organisms, societies, planets, galaxies, and so on. When we talk about the suffering of an individual, we're referring to an abstraction: The collection of neurons of a person firing in certain patterns that we consider to constitute "suffering" in some classification scheme. I don't see why we couldn't apply a similar abstraction to describe the collective suffering of many organisms, viewed as a coherent group. Maybe one difference is that neurons in a brain are more integrated and interdependent than people in a society, but in that case, couldn't we at least aggregate the collective suffering of a close-knit human community when they, for example, experience a common loss? Like a collection of neurons, they are all responding to an input stimulus in ways that trigger aversion, and these responses interact to form a whole that can be seen as larger than its parts. To pump this intuition further, we could imagine hooking together the brains of all the community members, until even C. S. Lewis admits they form a single individual. Then slowly degrade the connections between community members. At what point do they stop being a single individual? Why can't their verbal and physical contact still count as communication within the collective brain?

For more on viewing consciousness at many layers of abstraction, see the next section.

Individuation vs. entitativity

The different components of a video-game character may be diffusely distributed. The NPC may have one set of functions controlling behavior, another set computing physics, another set performing graphics rendering, and so on. And perhaps some of these functions refer to shared libraries or DLLs, which are common to many different characters or objects in the game. So is each character really a separate individual, or are they all part of one big program?

Questions like these are not unique to video games. This section of another piece explores similar puzzles in biological contexts. Ultimately, there is just one multiverse, one unified reality. The way we slice it up when interpreting and describing it is up to us. Of course, some divisions seem more natural, like separating the United States from Russia at the Bering Strait. Many biological organisms can be separated based on physical localization, although slime molds, and even the bacteria in our guts, provide challenges to an absolutist approach of that type.

How do we count moral value if lower systems and higher systems can both be considered conscious? For instance, in the case of a China brain, both the individual Chinese citizens and the collective brain that they play out are conscious at the same time. We could further imagine the China brain as being one member of a larger Milky Way brain. And the brains of each Chinese citizen are themselves composed of many subcomponents that we may consider conscious in their own rights. How do we quantify the total amount of consciousness in such a system? The problem is analogous to quantifying the amount of "leaf-ness" in a fractal fern image. Do we just count the lowest layers, and let the big parts matter more because they have more smaller parts? But this ignores the way in which the big parts themselves form holistic units; a bunch of smaller parts arranged in a more random fashion would be less important. Do we just count the big brain as one more brain like the smaller brains? But presumably the China brain is more significant (or less significant??) than any individual Chinese citizen. I don't have a good proposal for how to proceed here. I do have an intuition that the lower-level components account for much of the total value, but this view might change.

These questions seem like something out of postmodernism or Hindu metaphysics,d but they have ethical significance. The reason our prevailing ethical paradigm for individuation focuses on humans and human-like animals as its central units of value is because it's humans who have the most power and make claims about their consciousness and self-unity—not because humans are somehow ontologically special as a level of organization compared with lower and higher levels. We do also see some higher levels of organization assert their power and self-unity, including nation-states and corporations, though none of these has the machinery to organically generate the stance of claiming that "there's something it's like" to be itself, the way humans do.

Susan Blackmore describes the sense of self as an "illusion" that our brains construct. Elsewhere she clarifies that by "illusion" she doesn't mean to say that consciousness doesn't exist but only that it's not what we initially thought it was. Blackmore continues:

"Everything is one" claim mystics; "realising non-duality" is said to be the aim of Zen; "dropping the illusion of a separate self" is the outcome for many meditators. These claims, unlike paranormal ones, do not conflict with science, for the universe is indeed one, and the separate self is indeed an illusion.

See also ego death, egolessness, and extended cognition.

Dennett's description of the "Cartesian theater" can help us see the constructed nature of self-other distinctions. The Cartesian theater is the idea of "a crucial finish line or boundary somewhere in the brain" where formerly unconscious contents become conscious. The "lights turn on," so to speak. Neuroscientists may reject this explicitly. Yet many of them still talk as though the brain is conscious, while the external world is not. But in this case, the brain itself is "a crucial finish line or boundary" where formerly unconscious contents (e.g., photons or sound waves from the external environment) become conscious. How is this any different? Where in the environment-organism-environment feedback loop does consciousness "start"? It doesn't start anywhere; there's just a big loop of stuff happening. We call some parts more conscious than others because of constructions we're developing in our heads, not because of a discontinuity in the underlying physics of the world.

Case study: SimCity

In SimCity (2013), the player acts as the mayor of a city, working to build it and address needs as they arise. The game includes individual people, who move around using sidewalks, cars, and buses. The 2013 SimCity is an agent-driven simulation, so the dynamics of how the city evolves are complex and not computable from simple spreadsheet formulas, unlike what was the case in previous versions. That said, any given individual is relatively simple—perhaps not much more complicated than water molecules moving through the capillaries of a tree.