Summary

The perspective of machine ethics can be used to make a case for preference utilitarianism. Other ethical systems are probably very hard to formalize and much more likely to bring about intuitively bad consequences.

Contents

Preliminary Remarks

I originally wrote the following as part of a paper, in which I formalize preference utilitarianism using Bayesian inference. However, I took it out of the original paper to save some pages.

Due to its original format, the style of references differs from what is usual on this site. However, I have tried to insert hyperlinks to my sources, whenever they are available online. For full bibliographic information, you can always scroll down to the reference section.

Feel free to send me all kinds of comments to my e-mail adress!

Introduction

Artificial systems increasingly make morally relevant decisions. (Wallach and Allen 2009, pp.13-23) Many scientists even predict that an intelligence explosion is possible (Hutter 2012) and could take place some time this century. (Schmidhuber 2012; Kurzweil 2005; Vinge 1993) This intelligence explosion is assumed to have an extremely high impact (Hawking et al. 2014; Yudkowsky 2008) and the scenario is so scary that it is a common theme in literature, film etc. Equipped with the “right” values intelligent machines could nonetheless solve many of mankind’s problems to positively reshape the ethical condition in our universe. (Muehlhauser 2012; Yudkowsky 2008; Hall 2011, pp.522-523; Dietrich 2011) But what are the right values? Most value systems are not formulated with sufficient precision to apply them in machine ethics. (McLaren 2011, p.297; Gips 2011, p.9f.) Also, a large part of machine ethics research has concentrated on proposing negative results, for example that human values are too complex for simple formalizations. (Muehlhauser and Helm 2012)

In this article, however, I will argue that the perspective of machine ethics helps us to choose an ethical system. The need for formality forces us to discard many intuitions that make it difficult to develop a coherent system of ethics. And when we are not the agent that has to make the decisions, we do not reject ethical systems only because they are difficult or uncomfortable to apply. It has been noted that through this change of perspective machine ethics can help the general field of ethics. (Gips 2011, p.9f.; Dennett 2006) As Anderson (2011, p.527) notes, “immoral behavior is immoral behavior, whether perpetrated by a machine or human being.” In this paper, I therefore write “(machine) ethics” to express that I use the concepts of machines as moral agents but that the argumentation is valid for ethics in general, but not necessarily meant as practical advice to people on how to behave.

Specifically, I argue that consequentialist ethical systems with a continuous scale of moral standing rather than a classification are a good foundation for machine ethics. I then examine some options for defining the moral good and identify the fulfillment of preferences as the most plausible one.

Consequentialism

Ethical systems are usually divided into three classes: deontological, consequentialist (or teleological) and virtue ethics. For machine ethics, deontological ethical systems have often been considered. (Bringsjord et al. 2006) One reason appears to be that they seem relatively easily applicable because the rules an agent should adhere to do not require deep calculation of outcomes. That also makes the behavior of such an agent predictable for humans and thus seem safer. (Agrawal 2010) Even Kant’s categorical imperative has been discussed in machine ethics. (Powers 2006; Tonkens 2009) Both rule-based and Kantian deontological approaches run into problems and in the following I would like to try to point out the insurmountable danger of non-consequentialist ethics being applied by arbitrary intelligent systems.

Some typical ethical questions that separate consequentialist from non-consequentialist ethics are the following:

- Is it in the same sense wrong not to donate 3000 dollars to save one person

as it is to kill a person? - Is it wrong to donate inefficiently? When we donate 100 dollars and we can

save either 0.0001 people or 10 people, do we have to choose the latter? Is

it wrong to choose the first one? - Do we have to or are we not allowed to kill one person to save three?

In the non-consequentialist approaches to these questions the following notions

are commonly found: (see for example Hill 2010, pp.161f.; Zimmerman 2010; Uniacke 2010; Williams 1973)

- There is a fundamental difference between direct and indirect

consequences. - There is a fundamental difference between consequences that one supports

actively and consequences that one does not prevent and therefore

supports passively (through inaction). - An agent is mainly responsible for the intended consequences of its actions

and these constitute only a subset of the foreseen consequences.

Without these distinctions, there is no difference of ethical importance between, for example, killing somebody, (knowingly) causing somebody’s death and letting somebody die. Only consequentialism does not need this differentiation.

Formalizing deontological ethical systems

My first main argument against the applicability of non-consequentialist approaches in machine ethics is that these notions are not universally, coherently formalizable, because even non-consequentialists define an action by its consequences: non-consequentialist do not argue that it is intrinsically bad to lift your arm and pull your forefinger. The action is considered bad if you have a gun in your hand and you aim at a person, because the consequence of this action is that the person will be hurt or even die.

The differentiation among “direct” and “indirect” consequences seems to be rooted deeply in a specific kind of (human) thinking that allows one to ignore some of these consequences. For example, we tend to neglect consequences that are far away in time, space or causal chains. But it is unclear how these non-consequentialist approaches can be implemented if the given AI does not think in, e.g., causal chains at all or uses them differently.

In Artificial Intelligence research, the inability to reasonably formalize non-consequentialist ethical systems is mirrored by the choice of consequentialist (world state based) utility and reward functions for agents. (Russel and Norvig 2010, pp.9,53) However, the agent itself may give pairs of actions and states a value (based on the value of the consequences of the action in this situation). (Russel and Norvig 2010, p.824) This illustrates the idea that deontological ethical systems are only simplified, practice-oriented versions of consequentialist ethical systems.

I believe that the unclear and intuition-based line or transition between “direct” and “indirect” actions is actually one reason why most people probably feel more comfortable with non-consequentialist imperatives. A deontological statement leaves so much open for interpretation that it is very hard to outright reject it. For example, consider the imperative not to kill. As a consequentialist I may not reject this imperative but have a different understanding of killing than most other people. If, however, someone were to give the imperative a well-defined, specific meaning, it will certainly not be met by universal agreement anymore.

Ethical considerations

Even more important than the question of whether these non-consequentialist notions are implementable or formalizable is the question of whether it is good to implement them in any way on a machine. And I think the answer clearly is no, because for us only the consequences of the machine’s doing are morally interesting. This argument is a variation of a classic defense of consequentialism, namely, that the victims do not care about the agent’s inner thoughts, their evolution towards “being good”, possible resentments concerning pulling the trigger of a gun and indifference about letting people die on another continent etc. The only ones who could (intrinsically) care about them are the agents themselves. This argument is even stronger for an agent that we create deliberately to act morally since all of us will be the potential victims and it does not help us if an AI has a good will or behaves according to certain rules if this leads to suffering. A sufficiently powerful artificial intelligence is like a mechanism or a force of nature and we do not care whether a thunderstorm has good intentions or behaves according to some rules as long as it does not harm us.

Even if one adopted a deontological position as the human engineer, that would not necessarily mean that one had to build machines to behave according to a deontological ethical system. Most deontological ethical systems may not help us in deciding on which ethical system should be used for governing the behavior of machines that we construct. They simply do not contain rules for this particular scenario. Therefore, non-consequentialist engineers might agree that whether a potentially very powerful machine obeys some human-oriented rules seems to be not very relevant in comparison to the consequences of building such an agent. Similarly, it is questionable whether creating a Kantian machine is actually Kantian. (Tonkens 2009) Consequentialist ethics do not seem to suffer from such value propagation problems.

So, our argumentation gained persuasive power from talking about machines that are built to improve the ethical situation. For human minds, consequentialism has several practical problems. Due to inherent selfishness psychological effects such as alienation (Railton 1984) and the desire to sustain a good conscience might make it more satisfying to adhere to a good (by the standards of a consequentialist ethical system) rule-based system of ethics. However, I would never propose trying to act in a consequentialist way by computing all consequences of one’s actions anyway, because this is impractical, even for a superintelligent robot. Humans’ deontology-like rules can be seen as simplifications of some deeper consequentialist ethical system that is very difficult to apply directly. These simplifications work most of the time, meaning it usually helps in doing good in the sense defined by the original ethical system that would be difficult to apply directly. For example, the consequences of “killing directly”are most of the time not good in any workable consequentialist system of ethics and even more often too complicated to evaluate as a human being. Therefore, any sufficiently intelligent consequentialist agent will set up rules and intuitions that make decision-making simpler as it will be impossible in most cases to forecast all relevant consequences. (Compare Hooker 2010, pp. 451f.)

A continuous scale of moral standing

Our intuition tells us that at least there should be some difference in ethical value between the life of a human and for example the life of a single cell. Therefore, several discrete classifications with very few degrees of moral standing have been proposed, for example that only living creatures with the ability to suffer (Bentham 1823, ch. 17 note 122), personhood (Gruen 2012), free will, (self-)consciousness (Singer 1993, pp.101ff.) or the ability of moral judgment (Rawls 1971, pp.504-512) have moral standing. But these constraints suffer from several problems.

First of all, none of them could be defined in a rigorous fashion, yet. For example, the word “life” is probably very difficult to define universally (Emmeche 1997; Wolfram 2002, pp.823-825,1178-1180) and it is very unclear what consciousness is supposed to mean in a binary sense.

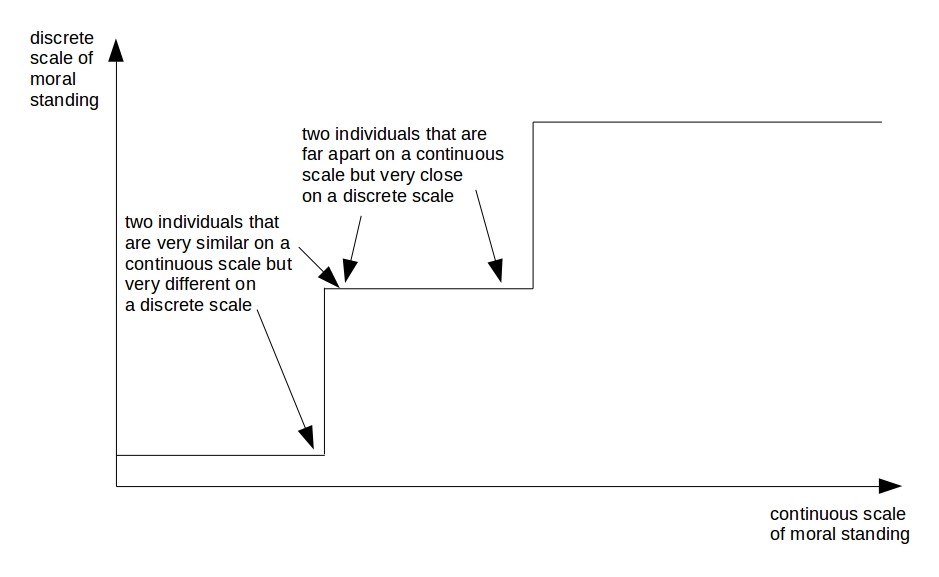

Also, constraints always seem to be problematic whenever there is a relevant measure that is (more) continuous. For example, intelligence and complexity seem to be relevant for judging the moral standing of a creature. It then seems as if there was either some threshold on this continuous scale where a sudden jump in ethical relevance takes place (see figure 1) or that the moral standing is more or less independent from the continuous scale. In both cases, it is possible that two creatures with very different amounts of intelligence (or whatever continuous scale one could consider relevant) are of the same moral value, whereas two very similar creatures (one below and one above the threshold) significantly differ in it.

scales are available.

So, thresholds and classification for the evaluation of moral standing seem to be packed with problems. Nevertheless, many of them seem to be ethically relevant. The solution seems to be that the seemingly binary concepts like consciousness, ability to suffer, intentionality, free will and moral personhood have been pointed out to possibly be rather continuous. (Hofstadter 2007, pp.9-24,51-54; Tomasik 2014b; Wolfram 2002, pp.750-753; Arneson 1998, p.5) So maybe all the proposed qualitative differences are in fact only quantitative ones.

Discarding consequentialist candidates

In the following I briefly discuss two different non-egoist consequentialist systems and argue that they are not suitable to be formalized and implemented on a machine.

Hedonism

Many ethical theories (for example the basic form of utilitarianism) are based on good and bad feelings or pleasure and pain. The imperative proposed by these ethical systems is to maximize overall pleasure and minimize overall pain. However, to me it seems impossible to define pleasure or pain for arbitrary agents without somehow basing it on a preferentist view. Because this is a common criticism of hedonistic ethical principles (Weijers n.d., 5c), I will not discuss it further.

Rule consequentialism?

Rule consequentialism is often conceived as a different consequentialism. But it suffers from great problems. If rule consequentialism were to dictate to follow rules that have the best consequences when following them, then the only necessary rule would be act consequentialism or an alternative and potentially superior implementation of it, (compare Gensler 1998, pp.147-152) because acting according to act consequentialism by definition has the best consequences. In this case, rule consequentialists and act consequentialists do not seem to disagree about normative ethics.

Of course, one could define another mechanism to dictate which rules to follow. For example, one could follow all rules that would have positive impact if obeyed by all agents, or rules with positive impact that are “easily applicable”, e.g. easily computable. Once the initial process of generating the set of rules to follow is over, however, one essentially obeys a deontological ethical system and acts towards suboptimal consequences. Therefore, rule consequentialism falls prey to the arguments presented in section 4.2.

Also, rule consequentialism is not necessary to avoid computing all consequences of one’s actions: As mentioned above, an (act) consequentialist agent will set up such rules without rule consequentialism because computing all consequences of an action is impossible and therefore not a good way to bring about good consequences. (Hooker 2010, pp.451f.)

Preference utilitarianism

Preference utilitarianism is a common theory of ethics and it seems relatively convenient for formalization. It is based on the conception of agents as objects acting towards some interests, preferences or desires. For example one could think of ensuring their survival and reproduction or more complexly producing art, owning a house or having friends. The main imperative of preference utilitarianism is to fulfill these preferences. A direct consequence of a scale of unequal value (as introduced in section 5) is that having ethically relevant preferences is not binary: Some objects have stronger preferences than others, for example humans have stronger preferences than mice who have stronger preferences than bacteria or “inanimate” objects, where we often use the word purpose instead of preference. Good introductions to this specific panpsychic form of preference utilitarianism were given by Tomasik (2014a, 2014b).

An important advantage of preference utilitarianism in machine ethics

Usually, when we are talking about an ethical system or principle it is in practice rather irrelevant what would happen if everybody would adhere to it precisely. Most principles are applied in consideration of other ones and leave some room for interpretation.

In machine ethics and especially Friendly Artificial Intelligence the situation is certainly different. A presented principle is interpreted in one precisely defined way by a possibly very powerful machine or group of machines. This usually leads to one typical kind of counter-arguments to a system of ethics on machines: “The very consequent application of the principle leads to X and nobody wants that.” Typical examples for X are dictatorship, realization of “evil” preferences, killing people for their organs to save others or too much happiness and thus boredom.

In preference utilitarianism, all of these objections can be answered easily: If “nobody wants that”, then a preference utilitarian Friendly Artificial Intelligence will not put it into practice. If people do not want to be set into a “perfect” world, it will not happen by means of a truly preference utilitarian agent. If people want to rule themselves, then the Friendly AI will let them unless other preferences (having a good government) or the preferences of others (for example those of people from another country, children and non-human animals) are more important. If no one wants the preference utilitarian AI to take organs from single humans to help many others, or society were endangered by such behavior, then it will not operate this way. This last example is interesting: If people want the agent not to act in a consequentialist way, then preference utilitarianism could demand to (seemingly) act according to deontological ethics.

So, the trick in preference utilitarianism is to avoid the problems of the complexity of (human) values by defining what the term value (or goal, aim etc., in our case preference) of a creature means itself and that it is good to protect them. This trick of defining preferences and goals in general rather than putting them into a system directly is commonly proposed in machine ethics, but mostly as a one-time process to learn ethics from humanity. (Yudkowsky 2004; Honarvar and Ghasem-Aghaee 2009; Muehlhauser and Helm 2012, pp.13-17; Wallach and Allen 2009, pp.99-115) The only problem left is that we somehow have to define what the preferences of an object are in a simple way. In Formalizing Preference Utilitarianism in Physical World Models, I attempt to introduce a framework to allow for exactly that.

Concluding Remarks

An alternative to this process of choosing an ethical system seems to be letting a machine make up or find an ethical system itself. This corresponds to the philosophical position of moral realism, which states that there are moral facts that are true and whose goal it is to define or rather actually find out what in the world makes moral facts true. (Sayre-McCord 2009; Shafer-Landau 2003) Machine ethics’ goal would then be to implement a mechanism for deriving these moral facts. I do not believe in moral realism, simply because it is impossible to make up any kind of facts out of nothing. A similar problem occurred in the foundational crisis of mathematics: Many mathematical statements were simply assumed implicitly because they appeared to be undoubtedly true and this caused problems.Therefore, different sets of axioms were assumed explicitly, for example the Zermelo-Fraenkel set theory. Coupled with a logical system, these rules were able to imply all statements of classical mathematics. But whereas the more complicated theorems of mathematics can not simply be assumed, axioms of Zermelo-Fraenkel set theory like the existence of the empty set ∅ seem to be necessary for any useful set theory and are therefore not really open to debate.

Similarly, ethics needs some basic assumptions. Instead of searching for something that somehow makes moral statements true, one could try to find axioms that somehow define moral truth similarly to the Zermelo-Fraenkel axiom system in a way everybody can agree on or at least informal principles that make it possible to discuss ethical questions on common ground. Many of our existing intuitions about ethics can help us here. But also, an ethical system should be precise, consistent, universal, impartial and not based on false assumptions. So, an artificial moral agent’s morality should not only be based on the subjective preferences of its developers.

Acknowledgements

I am grateful to Brian Tomasik for important comments and the opportunity to put this on his site. I also thank Alina Mendt, Duncan Murray, Henry Heinemann, Juliane Kraft and Nils Weller for reading and commenting on earlier versions of this text, when it was still part of a paper.

References

Agrawal, K. (2010): The Ethics of Robotics. http://arxiv.org/pdf/1012.5594v1.pdf

Anderson, S. L. (2011): How Machines Might Help Us Achieve Breakthroughs in Ethical Theory and Inspire Us to Behave Better. In: Machine Ethics. ed. Anderson, M., Anderson, S.L.. Cambridge: Cambridge University Press. pp.524-530.

Arneson, R. (1998): What, if anything, renders all humans morally equal? http://philosophyfaculty.ucsd.edu/faculty/rarneson/singer.pdf

Bentham, J. (1823): Introduction to the Principles of Morals and Legislation, 2nd. edn. http://www.econlib.org/library/Bentham/bnthPMLCover.html

Bringsjord, S., Arkoudas, K., and Bello, P. (2006): Toward a General Logicist Methodology for Engineering Ethically Correct Robots. In: Intelligent Systems, IEEE, Volume 22, Issue 4, pp. 38-44. http://kryten.mm.rpi.edu/bringsjord_inference_robot_ethics_preprint.pdf

Dennett, D. (2006): Computers as Prostheses for the Imagination. Talk at The International Computers and Philosophy Conference, Laval, France.

Emmeche, Claus (1997): Defining Life, Explaining Emergence. http://www.nbi.dk/~emmeche/cePubl/97e.defLife.v3f.html

Gensler, H. (1998): Ethics. A contemporary introduction. London: Routledge.

Gips, J. (2011): Towards the Ethical Robot. In: Machine Ethics. ed. Anderson, M., Anderson, S.L.. Cambridge: Cambridge University Press. pp.244-253. http://www.cs.bc.edu/gips/EthicalRobot.pdf

Gruen, L. (2012): The Moral Status of Animals. In: The Stanford Encyclopedia of Philosophy (Winter 2012 Edition), Edward N. Zalta (ed.). http://plato.stanford.edu/archives/win2012/entries/moral-animal

Hall, J.S. (2011): Ethics for Self-Improving Machines. In: Machine Ethics. ed. Anderson, M., Anderson, S.L.. Cambridge: Cambridge University Press. pp.512-523.

Hawking, S., Tegmark, M., Russell, S., Wilczek, F.: Transcending Complacency on Superintelligent Machines. http://www.huffingtonpost.com/stephen-hawking/artificial-intelligence_b_5174265.html

Hill, T. (2010): Kant. In: The Routledge Companion to Ethics. ed. John Skorupski. Abingdon: Routledge. pp.156-167

Hofstadter, D.(2007): I Am a Strange Loop. New York: Basic Books.

Honarvar, A.R., Ghasem-Aghaee, N. (2009): An Artificial Neural Network Approach for Creating an Ethical Artificial Agent. In: 2009 IEEE International Symposium Computational Intelligence in Robotics and Automation (CIRA) on 15-18 Dec. 2009, pp.290-295.

Hooker, B. (2010): Consequentialism. In: The Routledge Companion to Ethics. ed. John Skorupski. Abingdon: Routledge. pp.444-455.

Hutter, M. (2012): Can Intelligence Explode? http://www.hutter1.net/publ/singularity.pdf

Kurzweil, R. (2005): The Singularity Is Near. When Humans Transcend Biology. New Yorks: Viking Books.

McLaren, B. (2011): Computation Models of Ethical Reasoning. Challenges, Initial Steps, and Future Directions. In: Machine Ethics. ed. Anderson, M., Anderson, S.L.. Cambridge: Cambridge University Press. pp.297-315. http://www.cs.cmu.edu/bmclaren/pubs/McLaren-CompModelsEthicalReasoning-MachEthics2011.pdf

Muehlhauser, L. (2012): Engineering Utopia. http://intelligenceexplosion.com/2012/engineering-utopia/

Muehlhauser, L., Helm, L. (2012): Intelligence Explosion and Machine Ethics. http://intelligence.org/files/IE-ME.pdf

Powers, T. (2006): Prospects for a Kantian Machine. In: Intelligent Systems, IEEE, Volume 22, Issue 4, pp. 46-51. http://udel.edu/tpowers/papers/Prospects2.pdf

Railton, P. (1984): Alienation, Consequentialism, and the Demands of Morality. In: Philosophy and Public Affairs 13 (2), pp.134-171. http://philosophyfaculty.ucsd.edu/faculty/rarneson/Courses/railtonalienationconsequentialism.pdf

Russell, S., Norvig, P. (2010): Artificial Intellgence. A modern approach. Third Edition. Upper Saddle River, Pearson.

Sayre-McCord, G. (2011): Moral Realism. From The Stanford Encyclopedia of Philosophy (Summer 2011 Edition). http://plato.stanford.edu/archives/sum2011/entries/moral-realism

Scanlon, R.M. (1998): What We Owe to Each Other. Cambridge, MA: Harvard University Press.

Schmidhuber, J. (2012) : New Millennium AI and the Convergence of History. http://www.idsia.ch/juergen/newmillenniumai2012.pdf

Shafer-Landau, R. (2003): Moral Realism. A Defence. Oxford, Oxford University Press.

Singer, P. (1993): Practical Ethics. 2nd edn. Cambridge: Cambridge University Press.

Tomasik, B. (2014a): Hedonistic vs. Preference Utilitarianism. http://foundational-research.org/publications/hedonistic-vs-preference-utilitarianism/

Tomasik, B. (2014b): Do Video-Game Characters Matter Morally? http://www.utilitarian-essays.com/video-games.html

Tonkens, R. (2009): A Challenge for Machine Ethics. In: Minds & Machines (2009) 19, pp. 421–438.

Uniacke, S. (2010): Responsibility. Intention and Consequences. In: The Routledge Companion to Ethics. ed. John Skorupski. Abingdon: Routledge. pp.596-606.

Vinge, V. (1993): The Coming Technological Singularity. How to Survive in the Post-Human Era. http://groen.li/sites/default/files/the_coming_technological_singularity.pdf

Wallach, W., Allen, C. (2009): Moral machines. Teaching Robots Right from Wrong. Oxford: Oxford University Press.

Weijers, D. (n.d.): Hedonism. Retrieved from http://www.iep.utm.edu/hedonism/ on 16 May 2015.

Williams, B. (1973) A critique of utilitarianism. Taken from: Ethics. Essential Readings in Moral Theory. ed. George Sher (2012), Abingdon: Routledge. pp.253-261.

Wolfram, S. (2002): A New Kind of Science. https://www.wolframscience.com/nksonline/toc.html

Yudkowsky, E. (2004): Coherent Extrapolated Volition. http://intelligence.org/files/CEV.pdf

Yudkowsky, E. (2008): Artificial Intelligence as a Positive and Negative Factor in Global Risk. In Global Catastrophic Risks, edited by Nick Bostrom and Milan M. Ćirković, 308–345. New York: Oxford University Press. http://intelligence.org/files/AIPosNegFactor.pdf

Zimmerman, M. (2010): Responsibility. Act and obmission. In: The Routledge Companion to Ethics. ed. John Skorupski. Abingdon: Routledge. pp.607-616.