Summary

The Fermi paradox—the puzzle of why we ostensibly haven't been visited by extraterrestrials—represents an important focal point for challenging and refining our beliefs about life in the universe. I review some of the main hypotheses to explain the Fermi paradox, evaluating their intrinsic plausibilities, how they're affected by anthropic updates, and how much impact we could make toward reducing suffering if they were true. The hypothesis of an early filter, such as that abiogenesis is very hard or that human-level intelligence rarely evolves, seems very intrinsically plausible and implies high altruistic import if true. It's anthropically disfavored by the more plausible anthropic views, but it's possible that the relative importance of this scenario remains high compared with other scenarios. We should maintain significant uncertainty, and the expected value of further study seems large.

Update, Feb. 2015: The final section of this piece contains an analysis based on Stuart Armstrong's Anthropic Decision Theory, which in my opinion provides the best answer to this question. I think it suggests that we should roughly act as if Earth is pretty rare but not extremely rare.

Contents

- Summary

- Introduction

- Scenarios should focus on space colonization and optimization

- Evaluating explanations of the Fermi paradox

- A filter lies before humans (Rare Earth)

- Most nascent ETs are killed; humans have not been

- Gamma rays killed life before now (neocatastrophism)

- Small universe

- Baby universes

- A filter lies ahead of humans (doomsday)

- Space-faring civilizations live only a short time

- ETs are too alien for us to recognize that they're already here

- Advanced superintelligences colonize "inner space" (transcension hypothesis)

- ETs prefer to keep the universe unoptimized

- ETs are hidden and watching us

- Something else?

- Early vs. late filters

- Relationship to the sign of human space colonization

- Conclusion

- Update, Feb. 2015: Doing away with anthropics

- Would aliens arise eventually?

- Footnotes

Introduction

Science fiction does a reasonably good job of focusing on some of the big non-fiction ideas of the universe that are relevant to reducing suffering. One of those big ideas is whether aliens exist and what this question has to say about ourselves. Extraterrestrials (ETs), if any, represent a sort of reference class for our own civilization and can help in calibrating our expectations about the future.

One of the most striking puzzles when thinking about ETs is the Fermi paradox: Since there appear to be so many Earth-like planets in our region of the universe where life could have arisen, why don't we see evidence of other civilizations? Why are we not members of a gigantic ET civilization rather than the apparently first technological life in our region of the cosmos?

One of the most striking puzzles when thinking about ETs is the Fermi paradox: Since there appear to be so many Earth-like planets in our region of the universe where life could have arisen, why don't we see evidence of other civilizations? Why are we not members of a gigantic ET civilization rather than the apparently first technological life in our region of the cosmos?

It's often helpful to focus on paradoxes because they represent areas of our epistemology where we must be making at least one error and so are ripe grounds for changing our minds about potentially crucial considerations. The Fermi paradox in particular may affect what we think about the existence of ETs and humanity's own probability of becoming a galactic civilization.

Scenarios should focus on space colonization and optimization

There are many possible definitions for what might be considered alien life. For instance, do archaea-like organisms deep inside other planets count? Might self-replicating chemical reactions of a simpler sort count? From the perspective of reducing suffering, an important distinction seems to be whether there are ETs that have achieved technological civilization and are colonizing and optimizing their regions of the universe. This is because space colonization would probably produce astronomically more suffering than life on a single planet or small set of planets.

Moreover, space colonization appears to be feasible for a moderately advanced artificial general intelligence (AGI) using von Neumann probes. If technological ETs exist at all, it seems likely they would have colonized far and wide via probes rather than sticking around in a local region and communicating via radio signals.a Probes could probably travel at a nontrivial fraction of the speed of light, and combined with the billion-year lead times that some exoplanets had ahead of Earth, the Fermi paradox becomes a question of why our region of the universe hasn't been overtaken by colonizing probes. Katja Grace put it like this: "If anyone else had been in [a] position [to create colonizing AGI], our part of the universe would already be optimized, which it arguably doesn’t appear to be."

I'm nearly certain that SETI will never find radio signals from ETs, because it just doesn't make sense that ETs, if they existed at all, would remain at the technological level of present-day Earth rather than either going extinct or optimizing the galaxy and beyond. In general, human visions of ETs have been way too limited. Some thinkers historically proposed creating signals on Earth, such as a giant triangle, to show possible ETs on Mars or Venus that we exist. But within a few decades, it became possible for humans to determine whether Mars/Venus contained life without needing to look for signals. So unless the hypothetical Martians were exactly at the same level of technological development as Earthlings, the signaling would have been useless. A similar comment probably applies to a lesser extent with respect to present-day proposals to send signals to ETs. If there are ETs, either they're way too primitive to detect anything, or they're so advanced that they don't need our signals, such as because they have hidden Bracewell probes nearby. As Nick Bostrom has said, the middle ground where a civilization is comparably intelligent to humans is not a stable state and quickly should either yield superintelligence or extinction.

Evaluating explanations of the Fermi paradox

There seem to be three general ways to answer the Fermi paradox:

- It's unlikely for ETs to evolve and develop spacefaring ability. There can be many reasons for this, but the whole collection of factors that might preclude the ability for galactic colonization has been called the Great Filter.

- ETs have spacefaring ability but don't exercise it.

- ETs have exercised spacefaring ability to our region of the universe, but they remain hidden.

Wikipedia's article on the Fermi paradox lists many possible explanations. In the following I'll review some of these hypotheses and evaluate how they score on three dimensions:

- Are they intrinsically plausible? That is, do they make sense in light of what we know about physics, biology, sociology, etc.? Are they non-conjunctive?

- Are they anthropically plausible? That is, are they consistent with prevailing theories of anthropic reasoning? The theories I'll consider are SSA, SIA+SSA, and PSA. SSA+SIA and PSA mostly agree for the scenarios described here. I personally think regular SSA is pretty clearly wrong, but it's also the most standard anthropic approach. In particular, I consider SSA with a reference class that includes both evolved creatures and post-biological minds.

- How much impact could our efforts make to reduce suffering if the hypothesis were true? We should focus our altruistic efforts on hypotheses where our impact is big, so in most cases, we can "act as if" hypotheses that imply bigger impact are true.

In theory, the relevance of a hypothesis to our actions is roughly the product of the three factors above. In practice, there's enough model uncertainty, including about anthropic theories, that we need also to maintain ample modesty in such calculations.

A filter lies before humans (Rare Earth)

Intrinsic plausibility: high

This hypothesis suggests that most of the barrier to space colonization comes from some stage of development prior to human-like intelligence, such as planets having the right conditions for life, getting life off the ground, evolution of complex organisms, and evolution of technologically adept organisms. The Rare Earth hypothesis paints a picture of this type. A view like this follows naturally from what we know about the world. Creationists and intelligent-design proponents like to emphasize how hard it seems to be for life to get started and to evolve exquisitely complex structures like neurons and hearts. Even though such arguments are biased, it remains pretty astonishing that life arose from chemicals and developed seemingly irreducibly complex structures.

Anthropic plausibility: SSA: high, SSA+SIA or PSA: low

Absent anthropic considerations, I would probably find the Rare Earth explanation to be by far the most probable. However, certain kinds of anthropic reasoning can make it somewhat less likely. In particular, consider either the SSA+SIA or PSA approaches to anthropics. These favor hypotheses with more copies of your experiences. If life were easier to get started, there would be more copies of minds like yours, so we should update somewhat away from Rare Earth views even if the non-anthropic evidence favors them. This anthropic penalty might be small or might be many orders of magnitude.

Regular SSA doesn't have a problem with Rare Earth, since although there aren't many copies of you, there also aren't many other organisms. The probability that a random pre-colonization mind would be you is the same whether ETs are rare or common. However, SSA with a non-gerrymandered reference class also implies the doomsday argument, which means that a colonizing future is exceedingly unlikely to happen. Thus, our ability to reduce future suffering resulting from such colonization is exceedingly small. Hence, we should mostly ignore the (Rare Earth + SSA) branch of possibilities on prudential grounds.

Our impact if true: high

As Rob Wiblin has observed, if Rare Earth is true, we may actually have much bigger counterfactual impact than otherwise. If ETs are common, then if humans don't colonize, someone else might. In contrast, if ETs are rare, then whether humans colonize space could be the difference between an empty, mostly suffering-free universe versus a colonized, suffering-dense universe. Hence, suffering-reduction efforts would make more difference in Rare Earth scenarios.

On the flip side, if there are ETs and if they're less compassionate than Earth-based intelligence would be, then once we come into contact with them, we might be able to make a big impact by stopping them—hopefully through diplomacy but maybe also through force—from causing suffering. This suggests that we could also have some impact in scenarios where other ETs do exist.

Most nascent ETs are killed; humans have not been

Intrinsic plausibility: low

According to this hypothesis, life is reasonably common in the universe, but the first colonizing civilization uses berserker probes to kill life that begins on other planets before it reaches the human level. This would yield a Rare Earth kind of effect without it being intrinsically difficult for life to begin. Of course, the question remains why Earth hasn't been destroyed. Presumably it's because Earth happens to be far away from the berserkers—in one of the rare regions of the universe where life is actually sparse rather than dense.

Anthropic plausibility: SSA: low, SSA+SIA or PSA: low

SSA doesn't like this hypothesis if the berserker civilization has lots of observers, because SSA says we should have been one of them rather than the exceptional evolved civilization that wasn't preempted by berserkers. SSA+SIA and PSA also don't like the way this hypothesis predicts that there are only a few human-level evolved creatures like us.

Our impact if true: medium

If the hypothesis were true, we might have significant possibility for impact if humans develop advanced AGI before the berserkers arrive. Even if so, it's not clear whether human-built AGI or the original civilization would win the ensuing battle, which could be ugly.

Thus, there does seem reasonable potential for impact in this scenario, but like Rare Earth, it's anthropically disfavored. Moreover, it seems intrinsically less probable than the supposition that life is just hard to get started, since it requires a conjunction of many speculations together (ETs, berserkers, etc.).

Gamma rays killed life before now (neocatastrophism)

Intrinsic plausibility: medium

"An Astrophysical Explanation for the Great Silence" suggests that gamma-ray bursts tend to sterilize life periodically, before it can colonize space. Hence, all planets with life were "reset" at the time of the last burst, and now organisms on possibly many planets are evolving toward colonization.

Anthropic plausibility: SSA: high, SSA+SIA or PSA: low

SSA likes its doomsday implications, as long as colonization doesn't end up succeeding. SSA+SIA and PSA don't like the fact that gamma sterilization makes human-like intelligent organisms relatively rare.

Our impact if true: medium

If there are many civilizations competing to colonize, humans would in expectation control only a portion of the cosmic commons. If humans are first, then our impact might be greater.

Small universe

Robin Hanson suggests an example of how wacky physics could help:

Another possibility is that the universe is very much smaller than it looks, perhaps because of some non-trivial topology, so that our past light cone contains much less than it seems.

Intrinsic plausibility: low

I'm not aware of independent reasons for suspecting this possibility.

Anthropic plausibility: SSA: high, SSA+SIA: low, PSA: high

SSA and PSA are happy to have a high density of minds like ours. SSA+SIA wants a high absolute number of minds like ours and so demands a huge universe.

Our impact if true: low

A small universe means there's less suffering to be prevented.

Baby universes

Robin Hanson mentions another weird-physics scenario:

[Possibly] it is relatively easy to create local "baby universes" with unlimited mass and negentro[p]y, and that the process for doing this very consistently prevents ordinary space colonists from escaping the area, perhaps via a local supernovae-scale explosion.

Intrinsic plausibility: low

I'm not aware of independent reasons for suspecting this possibility.

Anthropic plausibility: SSA: low, SSA+SIA or PSA: high

SSA dislikes the way this hypothesis allows for vast numbers of post-human minds to exist. SSA+SIA and PSA appreciate how the hypothesis allows human-level intelligence to be common on planets.

Our impact if true: high

If baby universes are possible, humanity might produce immense numbers of minds (some of which would suffer), which implies enormous potential impact for our suffering-reduction efforts.

A filter lies ahead of humans (doomsday)

Intrinsic plausibility: low/medium

Anthropic plausibility: SSA: high, SSA+SIA or PSA: high

Our impact if true: low

Ignoring Fermi and anthropic considerations, I don't find this filter particularly plausible because humanity seems pretty robust, and there aren't obvious barriers against space colonization by digital minds. As Robin Hanson observes, we understand social sciences of the present better than Earth's biology billions of years ago, so most of the fudge room to increase our filter estimates would seem to be in pre-human steps. Even a low probability that humans will survive and colonize space might be something like ~10%, while I think the probability of, say, abiogenesis alone may be orders of magnitude smaller, especially given that we don't yet fully understand how life started. In addition, the evolution of human-like intelligence seems fairly contingent. Had the dinosaurs not gone extinct, for instance, plausibly no technological civilization would have emerged on Earth. Dolphins are smart but can't write books or build cities.

Systemic late filters cannot take the form of random natural events like asteroids or supervolcanoes, because these should operate roughly independently from planet to planet, meaning it wouldn't make sense for all technological civilizations to be destroyed by them. (Or, on planets where they do happen often enough to surely extinguish a civilization, they should also extinguish pre-civilization life and hence count as early filters.) Moreover, late filters cannot take the form of rogue AGI, because this should in general colonize space. Late filters would have to be non-AGI civilization-caused disasters, perhaps due to some massively destructive technology that necessarily precedes AGI.

Alternatively, if there is some reason why colonization probes are impossible in principle, this would be a stronger explanation of the Fermi paradox than a prediction that intelligence civilizations generally tend to destroy themselves, because the impossibility of space probes would apply to all civilizations at once, while technological self-destruction should operate somewhat independently from planet to planet, so that, e.g., even if the probability of survival were 1%, out of 100 such planets we should expect one of them to have colonized. That said, I'm not aware of any plausible arguments why colonization should be off limits to advanced AGI. Moreover, if colonization is off limits, then there will be less suffering to be reduced in the far future, so we can mostly ignore this possibility on prudential grounds.

SSA+SIA and PSA predict that the Great Filter mostly lies in our future, and if that's true, then our altruistic efforts make comparatively little difference. SSA also likes a big future filter.

Space-faring civilizations live only a short time

Intrinsic plausibility: low

This hypothesis looks unlikely to me. It seems to extrapolate historical trends of species extinction to the future without consideration of how the future would be different. For one thing, computer minds can resist evolution. They can be fully programmed to want to obey orders, can be surveilled to arbitrary precision, and can employ digital error correction to avoid "mutations". These facts make it plausible that the future of intelligence within a single civilization will be unified and coordinated, avoiding the kinds of warfare and technological self-destruction that befall humans.

In any case, even if the trend of evolution is for species to go extinct, there's also a trend for some life to survive and spread to fill all available niches. The galaxy is a big niche that no one has yet filled. Moreover, once colonization began, it would be harder for a civilization to self-destruct because members of the civilization would be widely distributed. Presumably the civilization would only be fully destroyed by a major galaxy-wide natural disaster or by intentional berserker probes. We don't observe signs of either of these.

Anthropic plausibility: SSA: high, SSA+SIA or PSA: high

Our impact if true: low

An anthropic analysis of this hypothesis is similar as for a filter after humans. SSA is fine with such a filter, but SSA implies a doomsday update. SSA+SIA and PSA favor a late filter like this, but that also implies doom soon. Assuming that the future filter applies just as strongly to ourselves as to others, these considerations suggest less total impact for our altruistic efforts. Unless for some reason we think our position is uniquely immune to such a filter, the scenario that spacefaring civilizations soon go extinct doesn't seem to be one of maximal impact.

ETs are too alien for us to recognize that they're already here

Intrinsic plausibility: medium

Anthropic plausibility: SSA: low, SSA+SIA or PSA: high

Our impact if true: scenario is not very relevant to impact

This hypothesis doesn't seem out of the question, and indeed, depending how one defines "aliens", it may be trivially true that aliens are already here. For instance, if photons and neutrinos count, then aliens have indeed spread throughout the cosmos. But in this essay I'm focused on the kind of alien life that optimizes matter for colonization and computation, and we can see that our region of the universe is not optimized.

Advanced superintelligences colonize "inner space" (transcension hypothesis)

Intrinsic plausibility: low

John M. Smart's Transcension Hypothesis suggests that advanced intelligences may cluster closer together into black-hole-like regions for greater efficiency.

This may be, but it doesn't explain why they'd leave on the table perfectly good stars and planets elsewhere. For a superintelligent civilization, the cost of building probes to utilize these resources would be trivial and would pay itself back astronomically by the gains from such colonization—even if the gains from harnessing regular stars via Dyson swarms comprised, say, just 0.0001% of the civilization's total resources. As Hanson says:

even if the most valuable resources are between the stars or at galactic centers, we expect some of our descendants to make use of most all the matter and energy resources they can economically reach, including those in "backwater" solar systems like ours and those near us.

So the Transcension Hypothesis doesn't explain why the rest of the universe is unoptimized, unless the ETs want it that way, which I'll cover in the next section.

In addition, even transcendent ETs may worry about competitors trying to steal their black holes. They would thus have motive to quash fledgling civilizations like ours or at least build strong defensive measures, although potentially such defenses could be hidden.

Anthropic plausibility: SSA: low, SSA+SIA or PSA: high

SSA doesn't like the big technological civilization predicted by this hypothesis. SSA+SIA and PSA are happy with this hypothesis if it allows for the existence of many human-level civilizations, some of which become transcendent.

Our impact if true: medium

If ETs really aren't taking over the whole cosmos, there may be room for humans to expand, increasing consciousness and suffering. So we can make some impact in such a scenario. Of course, humans wouldn't have all resources of the cosmos to themselves.

ETs prefer to keep the universe unoptimized

Intrinsic plausibility: low

Evolutionary pressure on Earth implies that those organisms with most desire and capability to spread themselves will become numerically dominant. Likewise, if ETs are common, we should expect that even if most ET civilizations don't care about spreading, the few that do will come to dominate the cosmos and should reach Earth. A hypothesis that ETs want to preserve the universe untouched in a manner analogous to environmental conservation thus seems unlikely, since it requires that all ETs share this desire. Alternatively, maybe the first ET civilization subscribed to "deep cosmology" (analogous to "deep ecology"), didn't want the beauty of the cosmos tarnished, and built invisible probes set to pounce on any other civilization bent on destructive space colonization. Needless to say, this seems conjunctive and far-fetched. We don't observe many deep-cosmological sentiments today, so the presumption that all advanced civilizations will share this axiology seems implausible.

Anthropic plausibility: SSA: high, SSA+SIA or PSA: medium

SSA appreciates the fact that even though this scenario contains at least one advanced ET civilization, the total number of post-biological observers is small because most stars and planets are kept in their pristine states rather than harvested to produce computational resources.

SSA+SIA and PSA appreciate that this hypothesis allows many ETs to reach Earth-like intelligence before forswearing colonization or getting killed by the deep cosmologists. Getting killed by deep cosmologists is a special case of a late filter.

Our impact if true: low

Like other late-filter cases, these scenarios imply that our efforts have low expected impact because either humanity won't colonize much, or else humanity will be quashed if it attempts to colonize space to "spread its iniquity elsewhere".

Intrinsic plausibility: low

This might take the form of the zoo hypothesis (ETs are hiding and avoiding making contact) or the planetarium hypothesis (ETs are falsifying our cosmological data to make us think we're alone). ETs might send imperceptible nanobots to Earth to monitor our actions and transmit information to some hidden data-collection probe.

Motivations for silence might include

- avoiding provocation of an attack by humans against ETs

- maintaining an ethical injunction like the Prime Directive against interference

- wanting to study humans in their natural habitat for scientific or military reasons.

I find #1 implausible because any civilization advanced enough to colonize space is probably advanced enough to avoid attack by humans and even to cause human extinction. #2 is possible but seems overly anthropomorphic; is it really plausible that most aliens would converge on a value like this? #3 seems most sensible. The data provided by humans in their natural habitat might indeed be valuable, analogous to genes of rare plants in the rainforest, and once destroyed it might be impossible to recover. On the other hand, in order to collect these data, ETs would have to refrain from colonizing a good chunk of the visible universe, thereby forgoing astronomical amounts of computing power. While ETs might be harnessing energy from some stars in ways that are hard for humans to detect, there are clearly lots of stars and planets that aren't being exploited. This opportunity cost seems to far exceed whatever value studying humans would provide to the ETs.

Anthropic plausibility: SSA: low, SSA+SIA or PSA: high

SSA doesn't like these hypotheses because they entail many minds that aren't evolved technological creatures on their native planets. SSA+SIA and PSA like the fact that these hypotheses allow for life to be relatively common.

Our impact if true: low

If these hypotheses are true, our altruistic impact is limited, because there's already at least one other advanced ET civilization that is either hostile or, if friendly, would force us to maintain the Prime Directive. In either case, humans wouldn't exert astronomical counterfactual impact on the direction of the cosmos.

Something else?

There are probably many hypotheses not imagined yet.

Early vs. late filters

Ignoring anthropic considerations, I expect early (pre-human) filters to be orders of magnitude stronger than late filters (between humans and galactic colonization). There are many more pre-human than post-human steps, and the difficulty of generating life from no life or creating specialized organs seems much greater than the remaining challenge of building AGI and replicating spacecraft.

Here are some sample numbers. Say the probability that humans colonize space from where we are now is 10% (which seems low, but maybe it should be lowered in view of the Great Filter). Out of ~200 billion stars in the Milky Way, there appear to be about "11 billion potentially habitable Earth-sized planets" using a conservative definition. The Virgo Supercluster contains 200 trillion stars, or 1000 times the number in the Milky Way. So let's assume 11 trillion potentially habitable Earth-sized planets in our neighborhood. Of course, ETs might have been able to reach us from beyond the Virgo Supercluster (which is only 110 million light-years in diameter), but how much farther away they could have originated isn't clear.b If the ETs developed, say, 1 billion years before us and traveled at 0.1 times the speed of light, they couldn't have started much beyond the Virgo Supercluster.

11 trillion potentially habitable Earth-sized planets within a reachable distance means the filter strength should be at least ~1/(11 trillion) or roughly 10-13. A 10% filter for colonization relative to where we are now implies at most a 10-12 chance that a given Earth-sized exoplanet develops a technological civilization. These numbers sound reasonable to me.

However, as noted previously, SSA+SIA or PSA anthropics favor late filters because weaker pre-human filters allow more human-level minds to exist, making our own existence more likely. What does the epistemic situation look like after updating on anthropics?

Let's consider two hypotheses:

- EarlyFilter: pre-human filter = 10-12, after-now filter = 0.1.

- LateFilter: galactic space colonization is impossible for some unknown reason. Hence, the pre-human filter can be any reasonable value (though still low enough to account for the absence of human-like life on the other planets and moons of the solar system); say it's 10-4.

Ignoring anthropics, I'd rate LateFilter as having probability of say 10-3 because we don't have plausible candidate explanations for why space colonization should be impossible, and if it's not impossible, it seems that someone or other should succeed at it even if most technological civilizations fail.

But now considering anthropics, we see that LateFilter allows for (10-4)/(10-12) = 108 times the chance that we would exist because the filter against our having come into existence is 8 orders of magnitude smaller. Even in the face of a 10-3 prior bias against LateFilter, the hypothesis ends up 105 times more probable after anthropic update. This is the "SIA doomsday argument". Note that unlike the SSA doomsday argument, this doomsday argument doesn't scale linearly with the possible number of minds in our future but instead derives its magnitude from Great Filter probabilities. A 105 greater probability of doom than non-doom is less dramatic than the SSA doomsday argument though still quite substantial.

Of course, when considering our potential impact, it seems we should still act as if EarlyFilter is true, since our actions can prevent much more than 105 times as much expected suffering if EarlyFilter is correct, given the difference in size between populations on Earth versus populations resulting from widespread colonization.

In other words, we should epistemically believe the world will end but should act as if it won't. Of course, these conclusions only apply on the assumptions of the Great Filter and SSA+SIA or PSA anthropic frameworks, so obviously model uncertainty should dramatically temper conclusions like these.

Relationship to the sign of human space colonization

In "Risks of Astronomical Future Suffering", I argue that colonization of space by humanity is more likely to increase total suffering than to decrease it. While I think this may be true whether or not ETs exist, its degree may depend on which answer to the Fermi paradox is correct.

If humans are exceptional, and no other civilization would colonize the galaxy in their place, then human space colonization is most clearly negative, because colonization would turn otherwise dead matter into consciousness, some of which would suffer terribly in expectation.

In contrast, if there are ETs that would colonize in our place, they might convert energy and resources into computation even if humans don't. This means human space colonization would yield less of a counterfactual increase in total computation. In addition, human colonization might be more humane than that of a random alien civilization, which would be good, although human colonization might also contain more of what we recognize as sentience, which would be bad. In addition, if humans are not alone, there's potential for nasty conflict between humans and ETs, which could also be bad.

Conclusion

The overwhelmingly most straightforward explanation of the Fermi paradox is that life is rare, and if this is true, our efforts can have significant impact. The Rare Earth explanation is the only one in the list above that scored green on at least 2 out of 3 dimensions. It's unclear how severe the anthropic penalty on this hypothesis is, but given that anthropic reasoning is itself wildly uncertain, this may not be a devastating problem. For instance, SSA with a reference class narrow enough to avoid the doomsday argument doesn't have a problem with Rare Earth and yet retains the possibility for high altruistic impact on the far future.

Bostrom himself finds a Rare Earth view most likely. He suspects that many people who think intelligent life is easy to evolve may be unwittingly forgetting the observation-selection fact that if we're asking this question, intelligent life must have evolved on Earth, but this says nothing about how common life is in general. However, as I noted in this piece, there is an anthropic case against Rare Earth because it's less likely we would exist at all if that were true.

Hanson's "Great Filter" paper also seems to come down largely in favor of a Rare Earth view: "we can find a number of plausible candidates for groups of hard trial-and-error biological steps". However, Hanson wrote this before discussions of SIA even existed, and upon learning of the SIA argument, his views shifted somewhat toward future filters.

Personally, I think the specific filter step that's probably hardest to overcome is abiogenesis. Other planets and even comets have organic compounds, so steps prior to abiogenesis seem easy. And we saw that once life started on Earth, it progressed pretty rapidly relative to the total lifetime of Earth, which weakly argues against subsequent evolutionary steps being extremely difficult. Of course, abiogenesis also happened relatively rapidly, so why do I think that step is hard? I suspect the answer is that if conditions are right, then abiogenesis becomes relatively easy and can happen soon, while if the conditions are not right, then abiogenesis never happens. Evolution up to more intelligent organisms seems like the kind of thing that might eventually happen with enough time, whereas getting life started in the first place seems, intuitively, more like the kind of thing that's either possible or not in a more binary fashion given the initial conditions of a given planet.

If we accept a Rare Earth view, this suggests that ET life is probably less common than we might think and that ET scenarios may not play a huge role in our calculations about future trajectories. Of course, this view could be significantly disrupted if life were discovered on other planets.

Given the massive uncertainty that remains, none of these conclusions should be taken too seriously.

Update, Feb. 2015: Doing away with anthropics

In 2015 I finally internalized an idea that a friend had told me over a year ago: "You should think of yourself as all of your copies at once." That is, if the algorithm corresponding to your thoughts and choices is being implemented in many locations, you should act as if you are the set of all of those instances of your algorithm, because what your algorithm chooses, all of those instances choose. This leads to a view in which we no longer perform anthropic reasoning to explain why we are this particular person at this particular spatiotemporal location, because we are all people at all locations who implement our decision algorithm. For instance, in conventional anthropics, we could ask what the probability is that Sleeping Beauty is waking up on Tuesday. But assuming the coin lands tails, Sleeping Beauty is the set of her copies on both Monday and Tuesday, so this question is not well defined, because "she" extends over both days.

Stuart Armstrong has formalized this view as

Anthropic Decision Theory (ADT) An agent should first find all the decisions linked with their own. Then they should maximise expected utility, acting as if they simultaneously controlled the outcomes of all linked decisions, and using the objective (non-anthropic) probabilities of the various worlds.

In hindsight, this seems to me the obvious approach. It suggests that we can disregard the "Anthropic plausibility" sections of the preceding analysis, so long as we accurately evaluate the "Our impact if true" sections to mean "The collective impact of all of our copies if true".

Armstrong typically deploys his ADT in thought experiments where all the relevant details of the universe are known, and the agent knows what his/her algorithm is. In practice we don't know either of those with precision and so need to apply probabilities over various possibilities.

For example, consider the debate between early filters (Rare Earth) and late filters (Doomsday). If we knew exactly which algorithm we are and exactly what the laws of physics were, then we should be able to logically infer whether Rare Earth or Doomsday was true because we could compute all of physics, look for the exact copies of our algorithm, and see whether they were Rare or Doomed. It's only because we lack this degree of omniscience that the Fermi paradox is a puzzle in the first place. What we do as an approximation is assign probabilities to Rare Earth and Doomsday and then combine this with how much impact we would have in either case.

The prudential comparison runs as follows:

- If the filter is early, then humans are vastly more likely to colonize an uncontested swath of the cosmos, which means any given planet's suffering-reduction efforts will have vastly more expected impact.

- However, if the filter is late, then any given planet is unlikely to colonize, but there are more total copies of us, so whatever impact we can have is multiplied by that number of copies.

Comparison assuming all Earths have a copy of you

To make things easier, assume for now that every pre-spacefaring planet that contains intelligent life contains one copy of you. This assumption will be discarded in the next subsection.

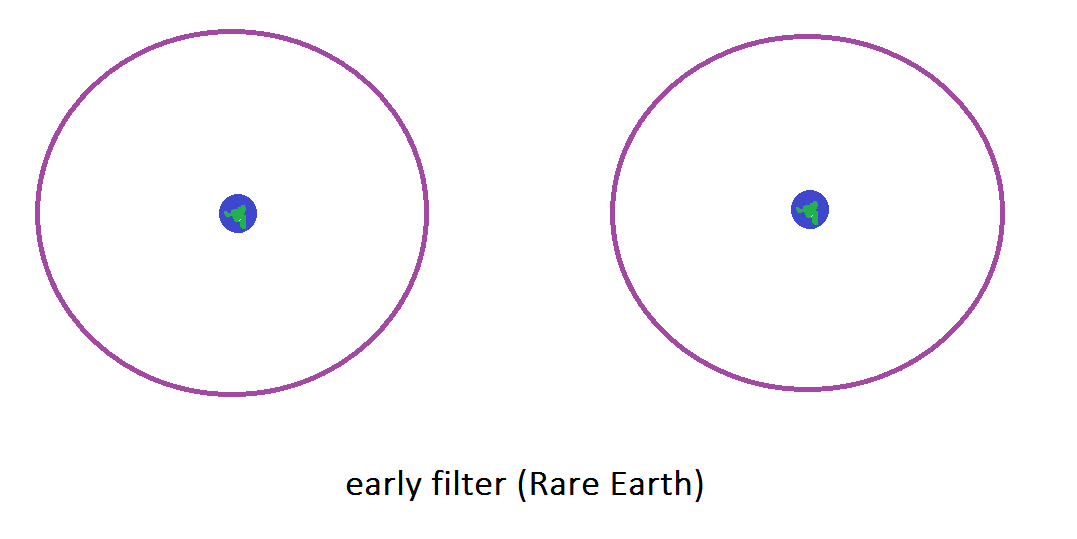

We can illustrate the comparison between Rare Earth and Doomsday in pictures. The following shows Rare Earth, with the purple boundary indicating that post-humans eventually colonize space throughout the circled region:

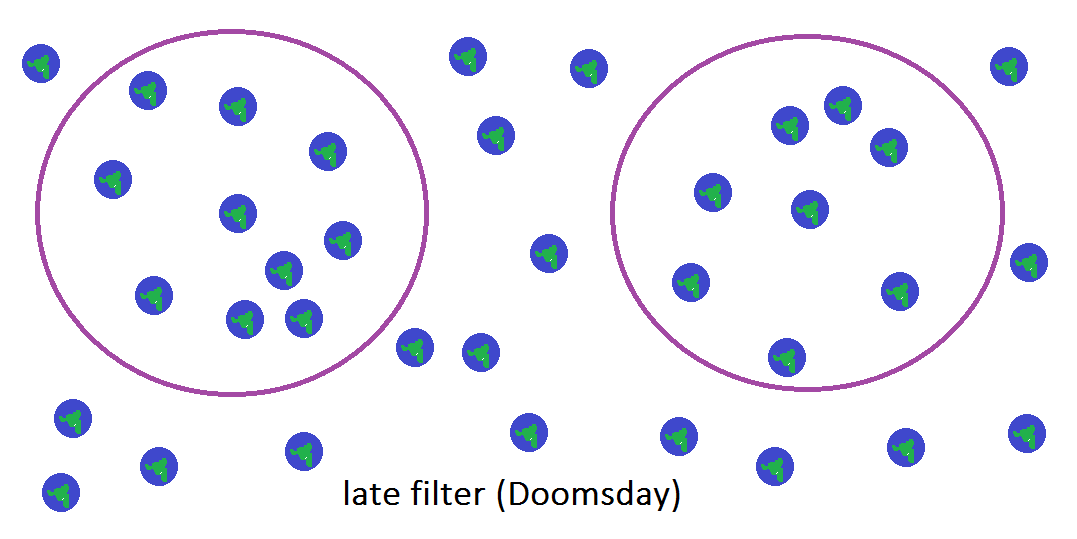

And here's a Doomsday scenario, with lots more Earths but a much smaller per-Earth chance of colonizing:

Since, by assumption, there's one copy of you on every Earth, the collective "you" consisting of all copies together achieves the same impact either way, since on the two Earths from which you do colonize, your far-future suffering-reduction efforts make a difference.

Indeed, we can see that total impact is roughly determined by how much volume is covered by purple circles, regardless of the rarity of intelligent life. Whether the filter is early or late doesn't necessarily affect your total impact (where "your" means "the collection of copies of you").

However, the Great Filter's location may affect whether to prioritize far-future or short-term suffering reduction. That's because in the Doomsday case, there are many more humans on their own planets. So if instead of betting on far-future impact, they instead turned their focus toward helping other humans and animals nearby, there would be many more humans and animals helped. In this sense, we do get something right when we reduce our estimate of the value of far-future work in the Doomsday scenario: The value of far-future work relative to short-term work is lower (but still possibly much greater than 1:1).

Comparison not assuming all Earths have a copy of you

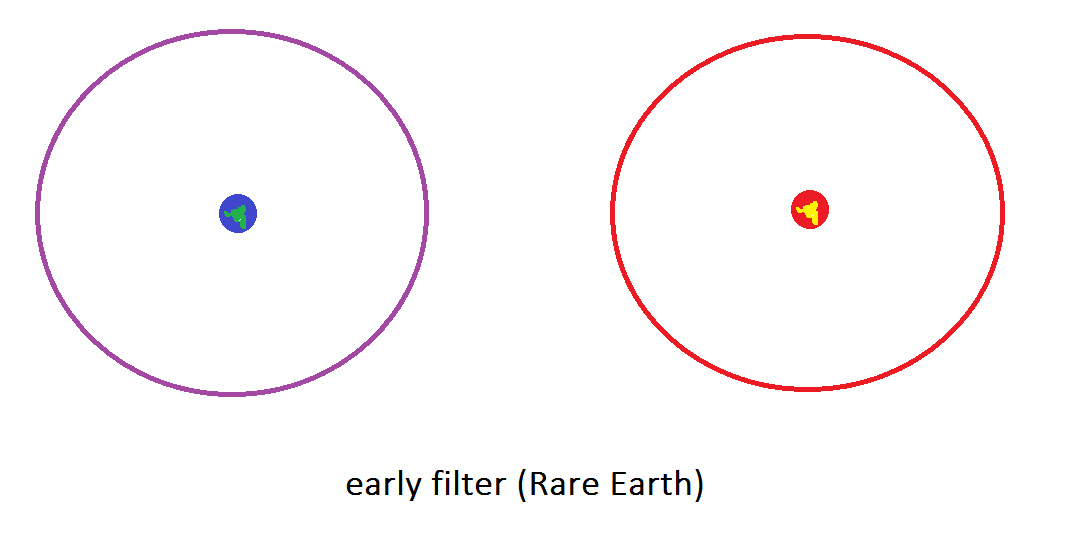

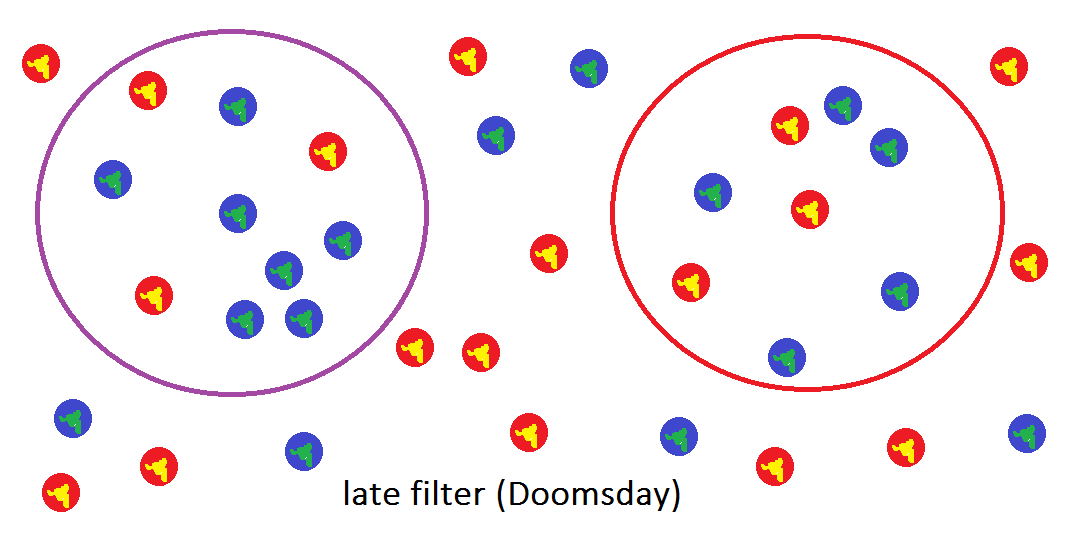

Now let's relax the assumption that all planets with intelligent life contain one copy of you. In fact, almost all planets with intelligent life contain zero copies of you. To make drawing easier, though, assume that 1/2 of planets with intelligent life contain a copy of you. In the following diagrams, a blue planet with green land contains a copy of you, and a red planet with yellow land doesn't. A purple circle still indicates regions of the cosmos colonized by a planet that contained you, while a red circle indicates regions colonized by a planet that didn't contain you.

So the same reasoning applies as before. All we've done is multiply a tiny probability for a given planet to contain a copy of you in front of all calculations.

What should we conclude using ADT?

If you're optimizing for far-future impact, you should act as if the hypothesis is true that has the greatest product of (intrinsic probability)*(size of circles colonized by planets containing copies of you), since the first factor in this product is a prior probability of the hypothesis, and the second factor indicates how much influence your copies have if the hypothesis is true.

Here's an example of what this formula tells us. If we thought that intelligent life was probably exceedingly uncommon—so much that most of the universe would always go uncolonized—then ADT prudentially disfavors actions based on this premise, since your copies would have such little impact in that case. However, once much of the universe is able to be colonized in some way or other, ADT doesn't favor hypotheses with late filters compared with early ones, in contrast with SSA+SIA. This is because the size of circles colonized by planets with you on them doesn't increase as more planets are added past the point where most of space is filled by circles. If we compare space with the "carrying capacity" of an ecosystem, then this point essentially translates to the idea that once organism densities are sufficiently high, the total population of the species won't actually increase even if parents have lots of children.

I find Rare Earth a priori much more likely than Doomsday, i.e., I place higher intrinsic probability on early filters. This suggests that the optimum of (intrinsic probability)*(size of circles colonized by planets containing copies of you) is the hypothesis that Earth is rare within, say, the Virgo Supercluster but that there are probably Earth-like planets not very far outside the region that we can expect to colonize.

Note that correlations among copies of you are crucial to this argument. If you ignored correlations, then you would only consider the possible effects of space colonization by one particular planet that's home to a single clump of atoms running your algorithm. The only factor affecting the expected impact of this clump of atoms is the relative probabilities of an early vs. late filter. A prudential argument by this particular atom clump would optimize for hypotheses with high values of (intrinsic probability)*(probability that this particular planet colonizes). If the intrinsic probability was highest for extreme Rare Earth scenarios, which had high early filters and hence low late filters, then this decision theory would act as if extreme Rare Earth was true. In contrast, ADT acts as if only moderate Rare Earth is true, since moderate Rare Earth implies you have more total copies.

What is "intrinsic probability"?

How should we interpret the "intrinsic probability" part of "(intrinsic probability)*(size of circles colonized by planets containing copies of you)"?

The most standard way is probably just to take it as a brute factor—the probability of that particular hypothesis being actual rather than another.

In a sufficiently big universe, that factor has a slightly different meaning, since in such a universe, all possible configurations are actualized somewhere, if only as "freak worlds" that materialize for a split second due to random particle fluctuations and then disappear soon thereafter. Those freak worlds do contain copies of "you", but they're not robust, so if "you" decide to take an action, the effects of that action in the freak worlds don't extend very far, since the worlds soon poof out of existence as the particles randomly reorganize further. For decision purposes, the copies of "you" that matter to outcomes are those where your algorithm is robustly implemented so that your choices have lasting impacts. Thus, in this case, the "intrinsic probability" of a hypothesis refers to the fraction of places in the universe where that hypothesis is robustly implemented in the sense that your choices will reliably have expected impacts on later events. For example, to say that Rare Earth is more intrinsically probable means that there are more places in the universe where Earth-like planets are rare, and the places where Earth-like planets are common are either less frequent or less robustly instantiated.

Note that, like ADT did with anthropics, this style of thinking replaces "probability" with "amount of impact", since all events happen (i.e., all events have probability 1), and the relevant question is how many times they happen. Naively we might interpret this "how many times they happen" as a probability statement about which instance you happen to be, i.e., you're more likely to be one of the more common instances than one of the more rare instances. But this is misleading, because "you" are all of your instances at once. We can thus only speak of "how big your collection of copies is" or "how much impact you have" rather than "which instance you are". (Wei Dai has discussed similar ideas.)

Would aliens arise eventually?

The Fermi paradox typically focuses on the question of whether ETs exist now. But maybe a more important question is: Will ETs exist ever?c Whether our part of the universe gets colonized depends on whether spacefaring ETs ever emerge, not just whether they coexist with us at present.d

To make things concrete, consider the following framework. Say we're ~10 billion years since the point where life could have emerged in the universe. (I'm just using "10 billion" because it's a round number, not because it's precise.) Star formation will end within 1-100 trillion years. For simplicity, assume it's 10 trillion years, and suppose that technologically advanced civilizations can continue to emerge until then.e I'd like an expert in astrobiology to confirm that assumption, but it seems plausible, because "Planets form within a few tens of millions of years of their star forming", so as new stars get created, I assume that new planets (including a tiny number of potentially habitable planets) get created in short order. The Drake equation is based on rates of star formation.

Now the question is: If intelligence on Earth doesn't colonize our part of the universe, will someone else do so within the next 10 trillion years? If the probability of a spacefaring civilization emerging per 10 billion years is greater than 0.001, then the answer is probably "yes", while if that probability is much smaller than 0.001, the answer is probably "no". Assume that once a spacefaring civilization emerges, it permanently takes control of its part of the universe and prevents any future civilizations from developing.

What is the probability that a spacefaring civilization will emerge within a 10-billion-year period? Let's call this Q. Our prior probabilities for Q should be pretty broad, since the factors that make it hard to develop intelligent life could be minimal, fairly steep, or extremely steep, with lots of variations in between. Q could easily be any of 1, 0.001, or 0.000000000001. Then this prior probability distribution needs to be updated based on our evidence. Given that life which looks set to colonize space emerged (apparently) once in 10 billion years (rather than taking, say, 100 billion years to emerge), we should update toward Q being pretty high. For instance, the likelihood for Q = 1 is 1000 times higher than the likelihood for Q = 0.001. Assuming Q = 1 had about equal prior probability as Q = 0.001f, then ignoring other possible values of Q, our posterior probability for Q = 1 is over 99.9%. This means we should indeed expect to see other spacefaring ETs if Earth-based intelligence doesn't colonize. Of course, this is a strong conclusion to assert, so it needs to be taken with many grains of salt. Given the uncertainty in my input parameters, probability distributions, and model, I in practice wouldn't put the probability that future ETs will colonize in our place higher than, say, ~70%, since it does look naively as though Earth is pretty alone and that the emergence of ET life is pretty difficult.

The above paragraph relied on ordinary Bayesian updating, but the same point can be made in the language of ADT. Consider two scenarios:

- Spacefaring civilizations develop about once every 10 billion years.

- Spacefaring civilizations develop about once every 10 trillion years.

Suppose these possibilities have equal prior probability. Since we find ourselves (roughly) in the first ~10 billion years since intelligent life was possible, then over many Hubble volumes throughout the multiverse, if hypothesis 1 is true, the probability that it will contain an exact copy of us is 1000 times higher than if hypothesis 2 is true, since all (non-Boltzmann brain and non-simulatedg) copies of us must be in the first ~10 billion years, while 99.9% of initial spacefaring civilizations given hypothesis 2 don't emerge in the first ~10 billion years. So there are ~1000 times more copies of me if hypothesis 1 is true, and thus it deserves ~1000 times more prudential weight. This analysis has so far ignored correlations between the value of Q and other facts that determine how much impact we can have. For example, if Q is bigger, it's more likely that someone will eventually colonize any given Hubble volume, so our impact comes mainly from displacing someone else who would have arisen. In contrast, if Q is pretty small, it's less clear that anyone will eventually colonize our Hubble volume, in which case our impact comes from whether the Hubble volume gets colonized at all or not.

One objection to this framing of the question is the following: Rather than thinking about life forming with some rate per 10 billion years, maybe life either forms quickly or never forms at all. The hypothesis that "life either forms in the first 10 billion years or never forms at all" is as consistent with what we see / implies as many copies of us as the hypothesis that "life forms once every 10 billion years". But the former hypothesis implies that if we don't colonize, no one else will, while the latter hypothesis implies that someone else very likely will colonize if we don't. The idea that if life doesn't form soon, it never will seems reasonable on any single planet. As an analogy, consider a computer program designed to search for a solution to some problem. If the program doesn't output a solution in the first 10 billion years after running, it seems reasonable to suppose that the program is just poorly written and will never produce the solution. However, it's harder to defend the hypothesis that "if life doesn't form in 10 billion years, it never will" for all planets collectively, since new planets are forming all the time, and a newly formed planet hasn't yet even begun to try to create life.

Expanding universeh

Given the metric expansion of space, the number of stars accessible to any given planet will decrease over time. This means that one instance of space colonization now will probably create more suffering than one instance of space colonization later. Of course, if the probability of ETs emerging eventually is close to 1 even within a small region of space, then we should expect most of our future light cone to be colonized anyway. But if the probability of ETs per small region of space is not close to 1, then once space expands more, we should expect less of our future light cone to be colonized if we don't do it.

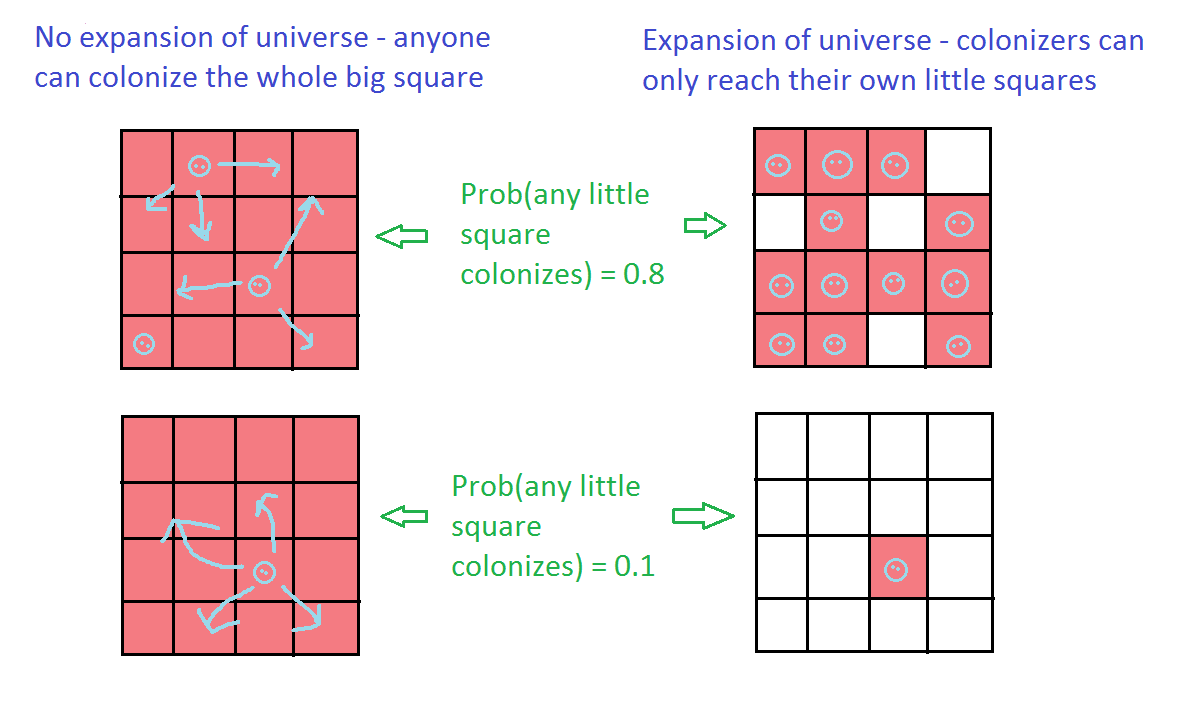

The following figure illustrates this point:

The big square, consisting of 16 smaller squares, represents our future light cone. Each smaller square represents a smaller region of space, such as a few galaxies. (In actuality, these squares should be spheres, since real space colonization takes place in three dimensions.) The small faces represent colonizing civilizations. Red squares represent regions that get colonized, with the color red representing the suffering that would be created as a result of space colonization.

The top row shows a scenario where the probability of a single small square containing a colonizing civilization is near 1, while for the bottom row, that probability is closer to 0. The fractions of red squares in the right column aren't exactly 0.8 and 0.1 because squares are filled in randomly, and the proportion of red squares in a sample of size 16 won't be exactly 0.8 and 0.1.

The left side of the figure illustrates what would be the case if space weren't expanding.

- The top left shows that if ETs are relatively common, then there might be a few different civilizations that colonize. But in any case, the whole big square gets colonized because the whole big square is accessible from any little square.

- The bottom left shows that even if colonizing civilizations are relatively rare, as long as at least one civilization colonizes, then the whole big square gets colonized because all other squares are accessible.

The right side shows what's actually the case for our expanding universe. Assuming a civilization colonizes relatively late, after space has already expanded somewhat, the civilization can only reach the galaxies within a small square.

- The top right shows that if civilizations are very common, then most of space gets colonized anyway, one small square at a time.

- The bottom right shows that if civilizations are rare, then most of space doesn't get colonized after all. This undercuts the argument that "even if there are no ETs now, there probably will be eventually, and they'll colonize our future light cone" because even if a few ETs do emerge eventually, they may only be able to colonize limited regions of space relative to what (post-)humans could colonize by embarking on space colonization now.

This figure is stylized but captures the main point. A slightly more accurate version of this picture would pick the planets that develop colonizing civilizations first and then draw circles around them to represent how far they could colonize. Those circles would sometimes overlap with one another rather than being disjoint squares.

Star formation declines exponentially?

thegreatatuin (2016) is a blog post by a molecular biologist who seems to have some acquaintance with cosmology. He says: "Numerous publications for decades at this point have performed similar measurements of star formation rate over time, and all those with sufficient detail reveal one, simple, important fact: the rate of star formation per unit mass in the universe as a whole is decaying exponentially, and has been for most of the history of the universe." After estimating a graph for star-formation rate over time, thegreatatuin (2016) says: "Star formation is a phenomenon of the early universe, a temporary phase not something that goes on stably." In fact, "at the present moment ~90% of all stars that will ever exist already exist." This seems to undercut the argument that ETs are highly likely to eventually colonize because we're so early in the lifetime of star formation. In fact, we're not early within the set of stars that will ever form.

What about our position with respect to all planets that will ever form? thegreatatuin (2016): "I am utterly unprepared to do this rigorously since the astronomical community as a whole hasn’t got a good handle on planet formation – if there’s one incontrovertible takeaway from the Kepler mission, this is it. I can, however, pull up some numbers that are better than nothing and layer them on top of my star formation numbers and see what comes out." Based on some numbers from Behroozi and Peeples (2015), thegreatatuin (2016) finds:

Under the power-law metallicity assumption, Earth shows up as younger than 72% of planetary systems that will exist.

Under a sharp metallicity-cutoff assumption, Earth shows up as younger than 51% of planetary systems that will exist.

Behroozi and Peeples (2015) themselves concluded (p. 1815) that "Assuming that gas cooling and star formation continues, the Earth formed before 92 per cent of similar planets that the Universe will form". thegreatatuin (2016) objects to this conclusion "because it includes the assumption that ALL gas within galactic dark matter halos will eventually form stars." But even if it were correct, it wouldn't imply vastly more future planets than have already existed.

Behroozi and Peeples (2015) claim that if "Earth formed before 92 per cent of similar planets that the Universe will form", then "This implies a <8 per cent chance that we are the only civilization the Universe will ever have" (p. 1815). One intuitive argument for this is that if there will only ever be 1 civilization, then it'd be odd to find ourselves so early among planets, while if there will be, say, 10 civilizations, then it makes sense that the apparently first one (ourselves) is so early. Assuming the prior probability for there ever being ~1 civilization isn't that different from the prior for on the order of ~10 civilizations, we should update toward there being more like ~10 civilizations. However, I think this argument ignores anthropic selection? The prior probability for there being 1 civilization is much higher than the prior for there being ~10 civilizations because whenever it's very hard for intelligent life to emerge, in those places where it does emerge, there will almost always only be one emergence of intelligent life within that region of the universe. Whether we think the probability for intelligent life to form on a planet is 10-1000, 10-100, or 10-21 (see Behroozi and Peeples 2015, p. 1814), in all of these cases, we would expect there to only be one planet in the history of that region of the universe where intelligent life emerges. That's a huge range of possible values for the fraction of planets that develop intelligent life. Meanwhile, the probability that intelligent life is likely enough to get ~10 civilizations but not so likely that we get hundreds, thousands, or billions of civilizations is a much smaller slice of probabilities. Hence, the prior for ~10 planets eventually developing intelligent life is much smaller than the prior for 1 planet doing so when viewed from the anthropically selected planet itself. (Note, however, that this anthropic argument ignores the prudential consideration that we should focus on scenarios where intelligent life is easier to get started because more total copies of us exist throughout the multiverse in that case. If we accept this prudential consideration and only focus on scenarios where a high fraction of Hubble volumes get colonized, such that the prior probability of on the order of ~1 civilization emerging is about the same as the prior for on the order of ~10 civilizations emerging, then I think the Behroozi and Peeples (2015) argument favoring ~10 civilizations over 1 civilization may still basically work?)

Footnotes

- One of many reasons to doubt that we'd observe radio signals is that they aren't detectable beyond thousands of light-years. On the other hand, Robin Hanson reports the following conclusion by J. Richard Gott: "the energy of a single star might power an intermittent very narrow-band signal detectable to pre-explosive life like ours across the entire universe [Gott 82]." (back)

- An obviously absurd upper bound for how far away ETs could have originated is 46.6 billion light-years, the proper-distance radius of the observable universe. This corresponds to light from the beginning of the universe reaching us now. 46.6 billion light-years is about 103 times the radius of the Virgo Supercluster, at 55 million light years. Assuming that volume is proportional to radius cubed, this would imply at most 109 times more planets from which aliens might have arisen, though I assume the actual multiplier is far less than 109. Also note that the region of space that could be reached from us by light-speed travel is at most our Hubble volume, which has a radius of only ~13.4 billion light-years, except in the extremely unlikely event that nontrivial amounts of matter can be stably transmitted through wormholes to regions beyond our light cone. (This topic is confusing, and I may have gotten it wrong. Let me know if so.) (back)

- This section was inspired by a short ebook by Magnus Vinding. I disagree with its conclusion that Earth-originating intelligence is more likely to reduce than increase suffering, but Vinding's arguments are worth debating. (back)

- Note that later-emerging civilizations matter less because they have less time to run computations, but this consideration can be ignored because it changes the analysis by at most a factor of ~2 or something, since if a colonizing civilization emerges once uniformly at random between 0 and T, its expected time to undertake astronomical computations is T/2. (back)

- That said, "old stars are dying faster than new ones are being born to replace them, which means that over the next few billion years the universe will lose its shine in what is known as universal dimming." This means we should expect fewer planets where life might originate in future years than at present, and hence the ratio between past potential for life to form and future potential for life to form is less than 1000 to 1.

Also note that in 100 billion years: "all galaxies beyond the Milky Way's Local Group to disappear beyond the cosmic light horizon, removing them from the observable universe." The Local Group is just one galaxy group, containing "more than 54 galaxies, most of them dwarf galaxies." This is a tiny number of galaxies compared with how many are accessible right now. For instance, the Virgo Supercluster contains "At least 100 galaxy groups and clusters". So any given individual colonization wave after ~100 billion years from now is relatively trivial in altruistic terms. However, if Earth-based intelligence colonizes soon, it can spread throughout the Virgo Supercluster and remain in all of its galaxy groups at once, thereby preventing ETs from colonizing any of those galaxy groups. (back)

- Maybe the prior probability P(Q = 1) shouldn't be exactly equal to the prior probability P(Q = 0.001). One reason is that, since we haven't found any ET life at all yet, it's plausible that the portion of the Great Filter that makes it hard for life to emerge is pretty steep. Q = 1 means that the overall Great Filter is less strong than if Q = 0.001, so if Q = 1, there are fewer possible combinations of other filter sizes with the known-to-be-strong filter against life's getting started. Here's a simple but unrealistic example to illustrate the idea. Q = 1 says that the Great Filter is strong enough to yield a per-year probability of ~10-10 for civilizations emerging, while Q = 0.001 says that the Great Filter gives a per-year probability of ~10-13. Suppose the filter for life getting started implied, by itself, a probability 10-10 per year. If Q = 1, this would require that all other filter steps be very easy. In contrast, if Q = 0.001, the other filter steps have an additional 3 orders of magnitude of probability to distribute in many different ways among the remaining filter steps. (back)

- Note that we can indeed ignore Boltzmann-brain and simulated copies when thinking about far-future impact because more copies of us in those forms doesn't translate into more potential for far-future impact, unlike if there are more regular copies of us. (back)

- This section was partly inspired by a post by Andres Gomez Emilsson. (back)