This page is probably not of interest to most readers. It contains some draft replies to The Lucas-Penrose Argument about Gödel's Theorem. After I wrote them, I discovered I was even more confused about how the argument was supposed to work, so I no longer think these points are apt.

Contents

My first reply

NOTE: I don't understand the following topic well, so what I say below may be mistaken. Read it at your own risk.

The Lucas-Penrose Argument about Gödel's Theorem may be the classic example of deceptive math that misleads on cognitive science. Many replies have been offered, which I won't review here. I'll present my personal take on the confusion.

Consider Penrose’s Gödelian Argument:

(1) suppose that “my reasoning powers are captured by some formal system F,” and, given this assumption, “consider the class of statements I can know to be true.” (2) Since I know that I am sound, F is sound, and so is F’, which is simply F plus the assumption (made in (1)) that I am F (incidentally, a sound formal system is one in which only valid arguments can be proven). But then (3) “I know that G(F’) is true, where this is the Gödel sentence of the system F’” (ibid). However, (4) Gödel’s first incompleteness theorem shows that F’ could not see that the Gödel sentence is true. Further, we can infer that (5) I am F’ (since F’ is merely F plus the assumption made in (1) that I am F), and we can also infer that I can see the truth of the Gödel sentence (and therefore given that we are F’, F’ can see the truth of the Gödel sentence). That is, (6) we have reached a contradiction (F’ can both see the truth of the Gödel sentence and cannot see the truth of the Gödel sentence). Therefore, (7) our initial assumption must be false, that is, F, or any formal system whatsoever, cannot capture my reasoning powers.

As a computationalist, I agree with (1)—at least if the "formal system" under consideration is sufficiently physics-oriented to potentially include manipulations of physical brain states based on physical laws. According to the physical Church-Turing thesis, these physical operations could in principle be simulated to sufficient precision on a Turing machine, i.e., a formal system.

Many critics object to (2), and I probably do as well, but this isn't where I think the fundamental error lies, so let's give Penrose (2) also.

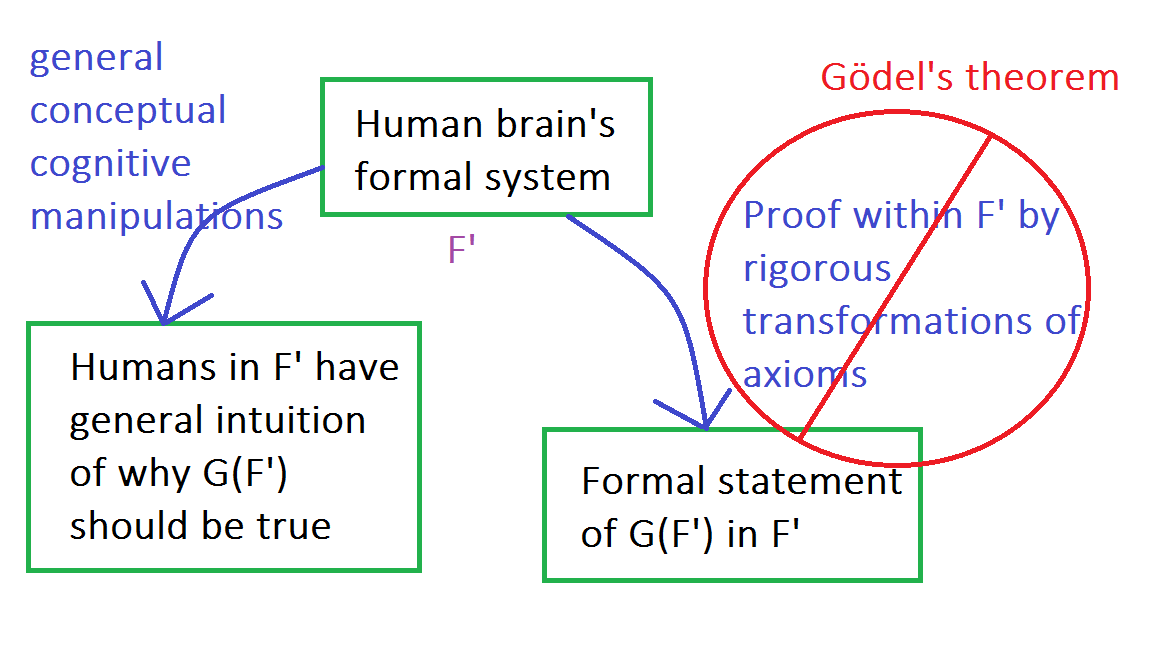

I object to (3). The state of my brain in which I "know" that G(F') is true is some state in which I'm engaging in abstract manipulation of some big conceptual cloud bubble for F', remembering how Gödel sentences work, and realizing that a similar trend applies here, which would make G(F') true. This is high-level, heuristic, pattern-matching reasoning. The state of my brain when doing this is some "theorem" of F', but it's not the theorem that corresponds to formally proving F'. That would be some vastly more complex physical state. The sense in which F' could not see the truth of G(F') is in this latter, rigorously formal sense, which my brain has not reached when it intuits the truth of G(F') in an informal manner. So Gödel’s first incompleteness theorem has nothing to say about my intuitive grasp of G(F').

John Baez explained the idea in a different context, which I'm co-opting to the Penrose case:

What’s happening now is that [Penrose is] using ‘provable’ in an intuitive sense, where any perfectly convincing argument counts as a proof. [...]

But in formal logic we have to write down a system of precise rules of proof; then we say something is ‘provable’ if it’s provable using those rules.

Basically, Penrose is conflating

- proving G(F') within F', which humans obviously cannot do by Gödel’s theorem

- reaching some state other than a full formal proof of G(F') that corresponds to our brains intuiting (perhaps correctly) the truth of G(F') using heuristic pattern-matching reasoning.

Proving G(F') within F', if it were possible, would involve going through some immensely intricate series of steps, which is not anything like what humans do when they see that G(F') is true. Of course, Penrose agrees with this, but he forgets that some other result of formal manipulations of F' can be humans' state of intuitive understanding that G(F') is true.

Penrose should ask: If humans don't prove G(F') formally, how do they do it? Whatever physical process humans use can, if the computationalist is right, be modeled as operations on a formal system. But those operations need have no resemblance to the sequence of operations that would prove the same theorem that they intuitively grasp.

To belabor the point once more, here's a summary diagram:

My second reply

In "Minds, Machines and Gödel" (1961), John R. Lucas explains how a machine can be converted into a formal system: The system's initial conditions can be seen as axioms, and rules for transitioning from one system state to another can be seen as if-then rules of inference. As the machine proceeds forward, its formal-system counterpart would be systematically "proving" new theorems, with each new state of the machine being a new theorem.

Lucas doesn't offer a practical example, but let me give it a shot. Let the initial state of a human be specified by all the physical variables describing him to sufficient precision. Then this physical system evolves with inference rules like "If the person is in state #02934234823472938439439 (atom #1 is at position (.2034, 2.342, 99.204), atom #2 is at position ..., atom #1's velocity is ..., etc.), then the person moves to state #82342398423492384392423 (atom #1 is at position ..., atom #1's velocity is ..., etc.)." Starting with just the axiom of the initial state of the person, this system would deduce a new "theorem" (state) for each next step of the system. If the system looped back to an earlier state, then the theorems it would be proving wouldn't be new, but it seems unlikely a single person could loop back to one of his previous physical states within a human lifespan.

In fact, the specification above seems inadequate, because rules of inference for the human system can't work without knowledge of environmental inputs. And the local environment's behavior can't be perfectly predicted without knowledge of the wider environment. So rather than modeling a discrete person as a machine, I think we should model the entire universe as a machine, or at least some isolated subsystem of the universe whose evolution is independent of outside events. In other words, Lucas's argument has moved from attempting to refute computationalism with respect to the mind to attempting to refute computationalism for the universe as a whole, since a mind can't be isolated from the universe.

Roger Penrose developed a variant of the remainder of Lucas's argument, and I'll focus on it here. I quote the summary of it from the Internet Encyclopedia of Philosophy entry:

(1) suppose that “my reasoning powers are captured by some formal system F,” and, given this assumption, “consider the class of statements I can know to be true.” (2) Since I know that I am sound, F is sound, and so is F’, which is simply F plus the assumption (made in (1)) that I am F (incidentally, a sound formal system is one in which only valid arguments can be proven). But then (3) “I know that G(F’) is true, where this is the Gödel sentence of the system F’” (ibid). However, (4) Gödel’s first incompleteness theorem shows that F’ could not see that the Gödel sentence is true. Further, we can infer that (5) I am F’ (since F’ is merely F plus the assumption made in (1) that I am F), and we can also infer that I can see the truth of the Gödel sentence (and therefore given that we are F’, F’ can see the truth of the Gödel sentence). That is, (6) we have reached a contradiction (F’ can both see the truth of the Gödel sentence and cannot see the truth of the Gödel sentence). Therefore, (7) our initial assumption must be false, that is, F, or any formal system whatsoever, cannot capture my reasoning powers.

As a computationalist, I agree with (1)—at least if the "formal system" under consideration is sufficiently physics-oriented to potentially include manipulations of physical brain states based on physical laws, as we saw with my example above.

Many critics object to (2), and I probably do as well if we're thinking of human verbal statements as being produced by a formal system. But when we're considering a physics-oriented formal system whose only axiom is the initial conditions of the universe and whose only update rules are to move to other states of the universe, I don't see how we can get contradictions. Indeed, I'm not sure what truth values mean in this context. How would one state of the universe be the negation of another?

But in this case, Gödel's theorem doesn't even apply, because the formal system can't do arithmetic? Indeed, this formal system is more like a finite-state automaton that just moves to the next state in succession.

My third reply

I became confused about how a Turing machine can be seen as a formal system. I started a Quora thread about this and proffered my own answer there, but I still don't understand how we get an arithmetical theorem prover from a generic Turing machine.

Judging from the attention that Lucas and Penrose have received, it seems there probably is a way (not currently transparent to me) in which any Turing machine can be seen as a formal system subject to the requirements of Gödel's theorem. Otherwise these authors would just be debunking the claim that the human brain is a narrow GOFAI theorem-proving system, which basically no cognitive scientist believes.