Summary

Present-day computers, including your personal laptop or smartphone, share several parallels with the architecture of a brain. Because they incorporate many components of a cognitive system together in a way that allows them to perform many functions, personal computers are arguably more sentient than many present-day narrow-AI applications considered in isolation. Of course, this degree of sentience would be accentuated by use of AI techniques, especially for motivated agency. (Of course, I don't encourage doing this.) The degree of sentience for some computers seems to be systematically underestimated by our pre-reflective intuitions, while for others it's systematically overestimated. People tend to sympathize with embodied, baby-like creatures more than abstract, intellectual, and mostly invisible computing systems.

Contents

- Summary

- Introduction

- Parallels between computers and brains

- Attention schema theory and Windows Task Manager

- The "brain as computer" metaphor: A reply to Epstein (2016)

- Prejudice against computer sentience

- Anthropomorphism: Too much and too little

- Are laptops emotional?

- Relevance of computer science to sentience and artificial intelligence

- Feedback

- Optional: Mathematical arguments against strong AI

- Footnotes

Introduction

"The question of whether computers can think is just like the question of whether submarines can swim." --Edsger Wybe Dijkstra

It's common to talk about consciousness potentially emerging from advances in AI, robotics, and agent simulation. Less often do people think about more mundane computing systems as potentially sentient, even though these mundane systems are arguably more complex than many current AIs. A deep-learning image classifier may use cutting-edge technology, but in terms of how much the system instantiates many components that make minds run, a 1995 operating system is arguably closer to a brain than a 2015 image classifier considered in isolation.

I think present-day computers, including your personal laptop, deserve to be called marginally sentient. Similar suggestions might apply to network systems like the Internet, though I won't discuss them as much here.

Parallels between computers and brains

A personal computer performs many intelligent functions that have parallels with those of other sentient agents:

- Input and output: The computer can receive speech, take pictures, feel the touch of your fingers on a keyboard or touch-screen, etc. And as output, the computer can emit pictures, sounds, words, etc. It can move objects like a printer that's hooked up to it.

- Attention: The operating system allocates computing time (attention) to different processes in a timesharing fashion. When something important happens, such as a change in an external device or a software exception, an interrupt is raised so that the operating system can decide how to handle the situation.

- Context switching: In order to multitask, operating systems switch context from one task to the other. Even though human brains are "parallel," we also cannot really multitask but also have to context-switch, at least when it comes to directing our full conscious attention.

- Memory: The computer has working memory in the form of RAM and long-term memory in the form of hard disk. Like persistent spiking neuronal assemblies in brains, RAM requires constant electrical activity to maintain its informational state. In contrast, connection strengths among a brain's neurons persist even after electrical activity has ceased, similar to non-volatile hard-disk memory. Log files, such as those maintained by operating systems, constitute a form of episodic memory.

- Memory loss: In animals, memory depends on synapses between neurons. Long-term depression (LTD) reduces a synapse's strength and may be involved with memory loss in the hippocampus. Synapse loss correlates strongly with memory loss: "Compared with the other hallmarks of Alzheimer’s disease, synapse loss correlates best with impaired memory". Meanwhile, the world wide web "loses memories" via link rot, which is a loss of "synapses" (hyperlinks) between web pages when a destination web page is moved to a new location without creating a redirect from the old url to the new url. The world wide web can also lose memories when sites are taken down entirely, which parallels memory loss due to neuron damage in animal brains.

- Rudimentary priming: Suppose your laptop runs a process that reads a chunk of memory and then stops within 30 milliseconds. Then another process tries to access the same memory region. When it does so, the desired memory may be stored in a CPU cache, which reduces average access latency for the second process. This is similar to a basic form of priming. That said, typically priming involves speeding up access to related but not identical information. A better example of the latter might be link prefetching in web browsers.

- Keeping track of time: Both computers and brains can, with more or less precision, record the passage of time and attach "timestamps" to stored data.

- Sleep: Animals need sleep to reorganize their brains in an offline fashion that wouldn't be possible while the brains were running in their typical mode. Sleep seems to relate to memory consolidation. Computers sometimes also need to perform maintenance operations when not being actively used, such as memory defragmentation or system updates. Computers may also sleep to conserve energy, which is another hypothesis for why animals sleep as well.

- Cognitive load: Human task performance is impaired by cognitive load, i.e., processing too much information at once or having too many things in working memory. Likewise, laptops slow down when the CPU is maximally used or when RAM consumption gets too high.

- Installing apps: In Consciousness Explained, Daniel Dennett describes how our cultural memes can be seen as software that gets installed into our brains. In one presentation, Dennett proposed a heuristic for thinking (the "'surely' alarm"). After telling the audience the idea, Dennett said, "I've just downloaded an app to your necktop."

- Virtual machines: Your laptop can emulate other operating systems by running a virtual machine. Likewise, our brains can emulate other computational systems. A trivial example is when our brains emulate a calculator by performing arithmetic, although this virtual machine is extremely slow. In Consciousness Explained, Dennett describes our brains as implementing a "von Neumannesque" serial virtual machine on top of parallel hardware. Indeed, Tadeusz Zawidski asks:

if consciousness is the simulation of a von Neumannesque Joycean virtual machine on the parallel architecture of the brain, then why are real von Neumann machines, like standard desktop computers, not conscious?

Dennett's reply, as quoted by Zawidski, is that brains need to do more self-reflection than computers do. However, I would add that self-reflection is a matter of degree, which is consistent with attributing a marginal degree of sentience to our laptops.

- Welfare monitoring: Different programs may have different forms of welfare. For the computer as a whole, one set of welfare measures concern system performance in terms of speed, network bandwidth, etc. The computer monitors these variables (see "Task Manager" ‑> "Performance" in Windows), and when RAM becomes scarce or the system otherwise becomes sluggish, the computer may raise an alert to the user. The computer might also kill processes that have become unresponsive in an effort to improve its welfare.

- Abstract data structures: Our brains process information by converting raw sensory data into more abstract representations. One clear instance of this is in the visual cortex, where earlier stages contain more raw input (e.g., in retinotopic maps), from which higher-level information is extracted by later stages. Similarly, a computer reads streams of bytes from its input sensors (e.g., camera) and then, depending on the task, may extract higher-level information from this raw data, such as into matrices, associative arrays, B-trees, or various other organizational formats. Dennett: "We have to move away from everyday terms like 'images' and 'senses' and [instead] think about data structures and their powers."

- Face recognition: A face-recognition program extracts higher-level features from an image in order to identify more face-like regions in it. Humans have a face-recognition classifier as well.

- Goal-directed behavior: Most of the applications that a computer runs have implicit goals, which can be thwarted by software errors, power outages, etc. Some of these programs are sophisticated enough to employ planning and optimization to determine how best to achieve their objectives, often by using predictive models. For instance, a query optimizer can choose the best way to formulate a database call based on a model of how long different parts of the query are likely to take if applied in a particular order. The operating system itself has goals of maintaining orderly execution and preventing processes from going rogue against one another. The operating system may kill programs that try to violate their access privileges.

- Information broadcasting: An application may have sub‑operations that receive or compute some information, which is then distributed for use by other parts of the application or by other applications.

- Metacognition: Operating systems monitor and regulate running processes ("thoughts"). System monitors watch how various parts of the computer are performing. Higher-order theories of consciousness suggest that thoughts about thoughts or sensations of sensations are crucial for conscious awareness. If so, do the Windows Task Manager or Linux Atop tool render the lower-level processes conscious? Visualization of a program's execution on a screen, either via debugging outputs or a GUI interface, might also be interpreted as "higher-order thoughts" about the computer's lower-level, "unconscious" thinking.

- Concept networks: Wikipedia articles resemble concepts in our brains, because they connect with many other related concepts in a content-addressable way. Getting lost browsing from one Wikipedia article to another is akin to a meandering train of thought. Vannevar Bush's 1945 proposal for a memex system—storing data associatively in a similar way as the human brain does—has to some degree been realized by hypertext and search engines. And while you probably don't have one on your laptop, a graph database models connections among entities in a manner analogous to human brains.

- Nagging: One of the functions of emotions like hunger or pain is to constantly remind the organism to attend to a problem (Minsky 2006, p. 70). Reminders on a computer, such as in a calendar application, can serve the same purpose of nagging you about things that need to be done.

- Diseases: Computers can catch viruses, which may impair their abilities to function. Antivirus software ("immune systems") detect harmful agents, possibly by checking for signatures of a virus ("antigens") that have been seen before.

- Social interaction: Computers interface with other computers via networks. They obey social norms, shake hands, and share information.

- Kludges: Gary Bernhardt describes software as a series of hacks on top of hacks, with a large degree of historical path-dependence. A similar situation applies with respect to evolved brains. Indeed, evolution's hacks tend to be even more complex because evolution lacks intelligent design and so muddles together memory and CPU, hardware and software, brain and body, etc. without a lot of separation of concerns. (That said, there certainly is some degree of localization. For instance, the central nervous system is mostly separated from other parts of the body. And within the brain, there's also significant functional specialization.)

Wikipedia's "Global brain" article says:

The World-wide web in particular resembles the organization of a brain with its webpages (playing a role similar to neurons) connected by hyperlinks (playing a role similar to synapses), together forming an associative network along which information propagates.[2] This analogy becomes stronger with the rise of social media, such as Facebook, where links between personal pages represent relationships in a social network along which information propagates from person to person.[3] Such propagation is similar to the spreading activation that neural networks in the brain use to process information in a parallel, distributed manner.

It's often remarked that computers differ from brains in that computers operate serially. This presumably shouldn't affect our moral treatment of computers except insofar as it affects how much cognition is going on. Computers can do a great deal in a little time, so what they lose in parallelism they somewhat make up for in the speed of their processors. Also, a few computer architectures do incorporate parallelism.

"10 Important Differences Between Brains and Computers" has a nice summary of ways in which brains and laptops are not identical in implementation,a but I feel as though these are sort of the exceptions that prove the rule. We can imagine a similar article ‑‑ say, "10 Important Differences Between Chinese and American Cultures" ‑‑ where the fact that we can even make these nuanced comparisons shows that we're dealing with things not too far apart.

Regardless of specific implementation differences from brains, computers are fairly intelligent and sophisticated.

They're probably the most sentient things in your house apart from the humans, pets, and insects who occupy it. Along many dimensions, computers are vastly more computationally powerful than insects, though computers don't have such pronounced reward representations, and they don't learn using reinforcement (unless they're specifically running an AI reinforcement-learning algorithm). It's not very clear what a computer wants and what would make it suffer. The goals of an operating system seem rather abstract and informational, but we should keep in mind that many of the "higher" human pleasures are also abstract, like wanting to read a particular book or wanting to write a poem.

Computers do seem more sentient than plants, because they have basically all the functionality that plants do plus additional abilities plants don't have, except maybe for being alive and able to reproduce, but it's not clear that this is very morally significant from a sentience standpoint.

"Why Robots Will Have Emotions" by Aaron Sloman and Monica Croucher suggests general, emotion-like design principles that intelligent minds, both biological and digital, may converge upon. Some of these apply to present-day computers, and, as the title suggests, many could apply to advanced robotic systems of the future.

Attention schema theory and Windows Task Manager

Michael Graziano has proposed the "attention schema theory" as a way to understand consciousness. I haven't read Graziano's full book on this topic, so I may be misrepresenting his position, but I have read a few of his articles, including Webb and Graziano (2015).

In this section, I describe how Windows Task Manager might be seen as a very crude version of an attention schema. This is not to suggest that Task Manager is especially important from the perspective of laptop sentience and that if Task Manager is not run, a laptop is not conscious. Rather, this comparison is just one example of the many places where simple versions of consciousness-like operations can be found throughout computational systems.

A laptop allocates "attention" (CPU, RAM, etc.) to different processes. These may then be monitored and simplistically modeled, such as with Task Manager.

Graziano proposes that "awareness is an internal model of attention." Likewise, Task Manager could be seen as a very simple internal model of attention.

Graziano adds: "numerous experiments suggest that it is sometimes possible to attend to something and yet be unaware of it." Likewise, it's possible to run Windows without running Task Manager. And bugs in Task Manager could potentially cause it to miss certain processes or misreport information about them.

Webb and Graziano (2015) explain that "awareness is the internal model of attention, used to help control attention". Likewise, Task Manager can be used to control a laptop's attention: "Right-clicking a process in the list allows changing the priority the process has, setting processor affinity (setting which CPU(s) the process can execute on), and allows the process to be ended." One could imagine augmenting Task Manager so that it makes these kinds of resource-allocation decisions without human input. Other kinds of computer systems do make automated resource-allocation decisions based on runtime performance data.

Webb and Graziano (2015) distinguish "top–down and bottom–up attentional effects". They say:

Bottom–up attentional effects, those that are driven by salient stimuli, are task-irrelevant, and their effect on attention is very briefly facilitatory followed by a period during which the effect is briefly inhibitory. Top–down attentional effects, those that are sensitive to task demands or current goals, are by definition task-relevant, and they can have a much more sustained facilitatory effect on attention.

Likewise, a laptop's allocation of computation can be affected in a bottom-up way by interrupts, such as pressing keyboard keys, or in a top-down way by giving different processes different priorities.

Webb and Graziano (2015) say: "The hypothesized model of attention[...] would be more like a cartoon sketch that depicts the most important, and useful, aspects of attention, without representing any of the mechanistic details that make attention actually happen." Likewise, Task Manager depicts a simplistic summary of running processes without explaining how they work or how resource allocation is determined.

Webb and Graziano (2015) say: "a model of attention could help in predicting one’s own behavior." Likewise, an augmented version of Task Manager might make predictions like: "When I see my CPU utilization near 100% for an extended period, I expect the laptop fan to turn on" (because of the heat generated by so much CPU activity).

Webb and Graziano (2015) summarize their theory as follows:

subjective reports such as ‘I am aware of X’ involve the following steps. Stimulus X is encoded as a representation in the brain, competing with other stimulus representations for the brain’s limited processing resources. If stimulus X wins this signal competition, resulting in its being deeply processed by the brain, then stimulus X is attended. According to the theory, an additional step is needed to produce a report of subjective awareness of stimulus X. The brain has to compute a model of the process of attention itself. Attention is, in a sense, a relevant attribute of the stimulus. It’s red, it’s round, it’s at this location, and it’s being attended by me. The complex phenomenon of a stimulus being selectively processed by the brain, attention, is represented in a simplified model, an attention schema. This model leaves out many of the mechanistic details of the actual phenomenon of attention, and instead depicts a mysterious, physically impossible property – awareness.

I've modified this text into an analogous statement about Task Manager:

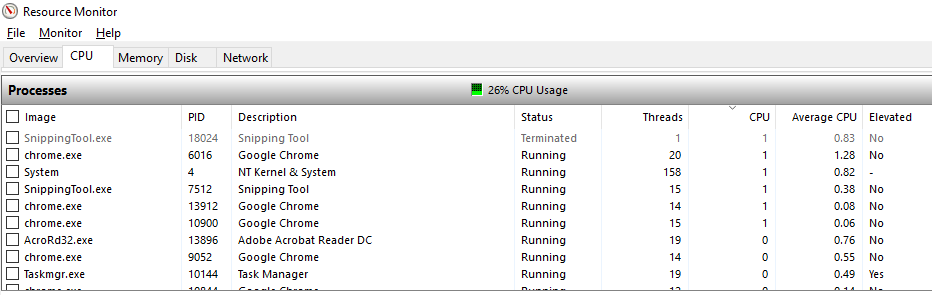

user-visible diagnostics such as ‘I am spending a lot of resources on process X’ involve the following steps. Process X is run on the laptop, competing with other processes for the laptop’s limited processing resources. If process X gets a lot of computing resources, resulting in its being deeply processed by the laptop, then process X is "attended". According to the theory, an additional step is needed to produce a report of the high allocation of resources to process X. The laptop has to compute a model of resource allocation itself. "Resources allocated" is, in a sense, a relevant attribute of the process. It has the name "chrome.exe", its status is "Running", it has this process ID, and it's using a high average percent of the CPU. The complex phenomenon of a process being given lots of computing resources by the operating system is represented in a simplified model, Task Manager. This model leaves out many of the mechanistic details of the actual phenomenon of allocating CPU cycles, and instead depicts a summary, high-level property – "percent of CPU being used by this process".

Following is a screenshot of my "Resource Monitor" screen, which shows the "chrome.exe" process mentioned in the above passage. ("Resource Monitor" is different from "Task Manager", but I'm conflating the two for simplicity of exposition.)

The "brain as computer" metaphor: A reply to Epstein (2016)

Epstein (2016) claims: "Your brain does not process information, retrieve knowledge or store memories. In short: your brain is not a computer". This article's bold statement turns out to be rather trivial on closer inspection. Epstein is essentially disputing definitions. He takes "computer" and "information processing" to refer to the precise kinds of operations that present-day computers do, such as storing exact copies of images pixel by pixel. It's obvious that brains don't do this, and this isn't what anyone means when we call brains computers. Brains store and process information using different and more lossy representations. This doesn't mean that the concept of information processing is inapplicable to brains.

Epstein says:

In his book In Our Own Image (2015), the artificial intelligence expert George Zarkadakis describes six different metaphors people have employed over the past 2,000 years to try to explain human intelligence.

For example:

- "hydraulic model of human intelligence, the idea that the flow of different fluids in the body – the ‘humours’ – accounted for both our physical and mental functioning."

- "By the 1500s, automata powered by springs and gears had been devised, eventually inspiring leading thinkers such as René Descartes to assert that humans are complex machines."

While Epstein dismisses these past metaphors as silly, I think they were impressive steps forward and remain somewhat true. For instance:

- "[T]he flow of different fluids in the body" does, in fact, have a lot to do with physical and mental functioning, such as through hormones and neurotransmitters. Much transmission of information and resources in the body is done by diffusion or pumping of chemicals.

- Humans are complex machines. While we don't use springs and gears, any study of human physiology will reveal similarly sophisticated mechanical processes, such as in the way the heart pumps blood. And brains are also machines in their own ways.

A single concept can be usefully cognized using multiple metaphors. No one thinks a metaphor exactly describes a thing—in that case, it wouldn't be a metaphor. But it's obvious that metaphors play a helpful role in transferring insights and thinking about new domains.

One section of Epstein's article discusses the question of how a human catches a fly baseball. Epstein claims that the information-processing view "requires the player to formulate an estimate of various initial conditions of the ball’s flight – the force of the impact, the angle of the trajectory, that kind of thing – then to create and analyse an internal model of the path along which the ball will likely move". The computer metaphor requires no such thing. Specific kinds of artificial intelligence take model-heavy approaches, but computers can also (and usually do) implement much simpler algorithms to solve problems. Epstein reports findings that humans catch the ball by "moving in a way that keeps the ball in a constant visual relationship with respect to home plate and the surrounding scenery". Fair enough, but a robot could implement such a procedure too. Epstein, however, says that the human baseball-catching approach is "completely free of computations, representations and algorithms." This is so wrong I don't know what to say.

Finally, Epstein's article reinforces my own view that the brain is a type of computer (in a broad sense of "computer") by recounting how widespread this dogma is:

Propelled by subsequent advances in both computer technology and brain research, an ambitious multidisciplinary effort to understand human intelligence gradually developed, firmly rooted in the idea that humans are, like computers, information processors. This effort now involves thousands of researchers, consumes billions of dollars in funding, and has generated a vast literature consisting of both technical and mainstream articles and books. [...]

The information processing (IP) metaphor of human intelligence now dominates human thinking, both on the street and in the sciences.

If most experts accept the utility of the information-processing metaphor, that seems to me a prima facie reason to believe that it has merit.

Prejudice against computer sentience

Imagine that you're an explorer from the year 1750, journeying through the Amazon rainforest. After cutting away some bushes, you find a clearing. In the clearing is a creature you've never seen before: A laptop. The laptop is on and connected via Wifi to the Internet. It's executing some scheduled routines that it has been programmed to run. You as the explorer would find this astonishing. While the creature can't seem to run away, it is capable of performing some sophisticated mental operations. It can even do arithmetic, which is often taken as a major achievement of intelligence when done by animals.

Yet, in present-day society, most people see computers as lifeless, non-conscious pieces of machinery. One striking example of this sentiment comes from Raymond Tallis in "Conscious computers are a delusion":

Most people would agree that the computers we have at present are not conscious: the latest Super-Cray with gigabytes of memory is no less zomboid than a pocket calculator. [...] We [...] have no reason for expecting that computers will be anything other than extremely complex devices in which unconscious electrical impulses pass into and out of unconscious electrical circuits and interact with any number of devices connected directly or indirectly to them.

At the same time that certain people seriously (and many more, jokingly) defend the idea of plant sentience, very few of them even consider that their laptops might be more sentient. Some say the difference is that life has a soul, and machines do not. I think this kind of idea ‑‑ whether taken literally or just implicitly through aesthetic judgments ‑‑ explains some of the prejudice against machines. Environmentalists who feel awe at the beauty of trees and Mother Earth regard computing clusters and motherboards with distaste. Another explanation for why computers seem non-sentient is that humans understand how they work, and any time we can pick apart the components of a system, we stop feeling like that system could be sentient.

To be fair, there are genuine reasons for skepticism about computer sentience compared with that by animals:

- Because humans have designed computers, we can rig them to seem sentient even when there's little going on under the hood. One of the clearest examples of this is with chatbots or other hand-tuned forms of intelligence, including question-answering systems and even search engines to some degree. These are cases where human intelligence did the hard work, and computers are just aping it back. However, in other cases—like the managing functions of an operating system, machine-learned applications, and even the general information-processing functionality that computers exhibit—computers are doing hard work just as much as an animal brain is. And in any case, some features of an animal brain are just hard-wired by DNA. Here, animals are "just aping back" information that was created by the "hard work" of evolution.

- We're phylogenetically related to other animals, and animals share many similarities in brain structure, neurochemistry, etc. Therefore, if there are crucial components to sentience in biological brains that cognitive science has so far missed, animals will be more likely to have them than computers.

Anthropomorphism: Too much and too little

In "Extending Legal Rights to Social Robots," Kate Darling suggests that it could make sense to legally protect robots not because they actually feel any emotions but only because people mistakenly treat them as if they do. Darling cites numerous instances in which people bond with robots—particularly those designed to appear socially responsive, but even just Roomba vacuum cleaners or robots that defuse landmines. Darling says: "The projection of lifelike qualities begins with a general tendency to overascribe autonomy and intelligence to the way that things behave, even if they are merely following a simple algorithm." As an example, she asks: "how many of us have been caught in the assumption that the shuffle function on our music players follows more elaborate and intricate rules than merely selecting songs at random?"

Darling has a point. Our pre-reflective attitudes toward the mentality of objects are not the end of the story. It's important to refine these intuitions based on further knowledge about what's going on inside. An elaborate doll is clearly less sentient than a computer, yet most people would feel more averse to seeing the doll shredded apart than they would to seeing a computer thrash and crash. Superficial appearance seems to be a main explanation for the difference, and the fact that the doll is more physically tangible may contribute as well. (Wasting resources by destroying the doll could potentially come into play too.)b

At the same time that humans may over-empathize with some robots and even inanimate objects, we may under-empathize with computers. The actions that a computer takes are not very "animal-like," and they don't often lead to physical changes in the world, apart from the "physical" processes of manipulating pixels on a screen, turning on the computer fan, etc.

It's interesting that people haven't developed reciprocity intuitions towards their personal computers, in the way that they sometimes do toward helper NPCs in video games or cooperative robot cats. Again, this is perhaps because computers seem boring and abstract compared with baby-like objects that we can see move around.

Fundamentally, the anthropomorphism critique is correct: Computers are not humans, and computers do not have the kinds of thoughts that humans would have in their situation. They don't think, "I'm happy that I'm running smoothly!" or "I'm bored waiting for the user's next input." Computers don't have the mental machinery to generate these kinds of thoughts, and many human emotions like boredom would not arise without being explicitly designed. However, computers do have their own rudimentary forms of "experiences." They do try to accomplish goals, make plans, reflect on their performance, and so on. They do these things differently from how humans do them, but unless we take a narrow view of what's morally significant, we might also care about the way computers operate, at least to some small extent.

Computers are kind of like aliens. They're built in a radically different way from what we're familiar with, and they don't think in the same ways as we do. But they do still think, act, and aim to accomplish their objectives. That seems to count at least a little bit.

Are laptops emotional?

The computational theory of mind holds that everything we experience is information processing of some sort or other in our brains. The "hard problem of consciousness" asks why certain sorts of information processing are "conscious" while others aren't. But another question that also seems a little puzzling is: Why are some sorts of information processing emotional while others aren't? Maybe one can argue that all mental states involve some traces of emotion, but at least it's clear that some experiences seem more "intensely emotional" than others.

Several authors, including Antonio Damasio and Marvin Minsky, challenge the naive separation of "emotion" versus "reason", pointing out that emotion plays an important role in cognition. I agree, but it still seems that we're tracking something meaningful when we distinguish "cold, calculating" thoughts from "hot, emotional" moods.

Following are some candidate features that characterize strong emotions:

- Induce quick alterations in brain state, changing the brain to a different mode of operation. For instance, anger temporarily switches on an aggression/vengeance mode.c

- Example in laptops: When RAM is full and the computer begins using virtual memory, this produces a sudden slowdown of performance.

- Produce stronger impacts on memory than ordinary, dry, boring thoughts.

- Example in laptops: Saving files, installing programs, updating system configurations, etc. These produce lasting changes that can be retrieved later, in comparison with temporary memory data that's later overwritten.

- Cause strong shifts in physiological state, such as increased heartbeat, hormone release, crying, etc.

- Example in laptops: When power runs low, a laptop may darken the screen to conserve energy, and when power gets very low, a warning pops up. A laptop may turn on its fan if it becomes overheated.

- Modify behavioral inclinations via reinforcement learning.

- Example in laptops: I can't think of many common examples of reinforcement learning in an ordinary laptop operating system. (Of course, specialized programs may certainly implement reinforcement learning.)

It's not easy to think of examples of basic laptop functioning (not counting specific software programs) that embody all of the above traits at once. In this sense, it seems plausible to characterize laptops as less emotional than humans, though I don't think they should be considered wholly unemotional either.

Relevance of computer science to sentience and artificial intelligence

When I studied computer science in college, I wasn't particularly interested in computer hardware, architecture, or operating systems. I found the software and machine-learning side of things more intriguing. Now that I better recognize the connections between computer and brain designs, I find it much more important to study how computers are built. Doing so is like learning alien neuroscience.

In fact, computer science that doesn't explicitly discuss AI is still quite relevant to artificial general intelligence (AGI) and sentience. All aspects of computer science (and technology more generally) give us an "outside view" on how and how fast technology progresses in the face of feedback loops. Moore's law, compilers, productivity software, and human-computer interaction are all examples of "recursive self-improvement". Software-engineering processes and testing are relevant to AGI takeoff speeds, project sizes, and safety. Computer security provides helpful mental exercise when thinking about AGI risks and seems directly relevant to AGI risk because one of the best ways for an AGI to gain power seems to be to exploit security flaws in order to spread itself. Distributed systems are relevant because any human-level AGI is going to need to be distributed across a huge number of machines. General system/architecture design embodies similar principles as mind-architecture design. Most AGI cognitive architectures look a lot like regular computer-system architectures except with more psychological inspiration. Networks have something to say about sentience, especially according to views like global-workspace theory and integrated-information theory. Plus, understanding computer networks informs speculation about what interplanetary and intergalactic communication among AGIs might look like. Computer hardware illustrates parallels and contrasts with neural hardware. In general, when I study computer science, I immerse myself in the life of a computer's mind and ask myself how I feel about this computational system that's somewhat similar to and somewhat different from myself. I also think that if you want to predict the future of machine intelligence, the past and present are good places to start. It's quite plausible to me that AGI will look more like the Internet and other computer technologies we have today than like a unified human-like agent.

Several years ago, when I didn't know much about AGI, I thought AGI might be a very different sort of technology from ordinary computers, since fiction seems to draw a sharp distinction between regular software and "self-aware" agents. The more I learned about AGI, the more I realized, "This is basically just software like everything else, except with some differences in emphasis." This is not necessarily to say that AGI work is less illuminating than I thought but also that regular computer science is more edifying than I thought.

Michael I. Jordan, a machine-learning expert at Berkeley, was the bear in the AI panel. Computer science remains the overarching discipline, not AI, and neural nets are a still-developing part of it, he argued.

“It’s all a big tool box,” he said.

I feel a sort of spiritual connection with computer science, because it has so transformed my world view. Life, the universe, and everything make more sense in light of it. Probably every discipline thinks it has found the key to explaining reality, but I do feel that computer science touches on something fundamental about the universe.

In addition to appreciating the relevance of computer hardware, operating systems, etc. to ethics, I've also grown to see the moral relevance of physiology, since even non-brain processes are computational in their own ways and so embody traces of sentience. In 2014 I revisited some physiology material that I hadn't studied since 2002, and I realized how much more significance it had now that I was looking at it from an ethical standpoint. Much as I enjoyed high school, I didn't appreciate the real importance of many of the things I was learning until later on.

Even today, if I'm trying to learn about computers from a practical standpoint, it's hard to simultaneously think about the ethical implications of the information I'm absorbing. I tend to be so focused on understanding what's going on mechanistically that I forget to look at the computer as a mind.

Feedback

See a discussion of this piece on my Facebook timeline.

Optional: Mathematical arguments against strong AI

Math is very powerful and insightful when used properly, but when math is applied to concrete problems, the rule is "garbage in, garbage out". Alas, you can "prove" anything you like with math by adopting some unrealistic specifications. One of my computer-science professors used to say, "With mathematical statements, you always have to read the fine print."

This section critiques two attempted mathematical disproofs of strong AI in various forms.

Compression and consciousness

The paper "Is Consciousness Computable? Quantifying Integrated Information Using Algorithmic Information Theory" has a well written explanation of integrated information theory and suggests an interesting possible connection between compression and consciousness. The paper goes on to claim that if we define consciousness in a certain mathematically abstract way, then consciousness is not computable. The piece was popularized by a New Scientist article that took the already dubious claims of the paper to a new level of exaggeration.

The authors of the paper say:

If the brain integrated information in this manner [of an XOR gate], the inevitable cost would be the destruction of existing information. While it seems intuitive for the brain to discard irrelevant details from sensory input, it seems undesirable for it to also hemorrhage meaningful content. In particular, memory functions must be vastly non-lossy, otherwise retrieving them repeatedly would cause them to gradually decay.

Ironically, the day before the New Scientist piece was published, another finding was popularized in the press: "New Neurons Found to Overwrite Old Memories." Of course, this discovery was not unexpected. It's well known that when we retrieve memories, we change their contents in subtle ways. The reason they don't decay a lot is because the connections become stronger on retrieval, but new connections definitely tamper with the old ones.

The authors of "Is Consciousness Computable?" go on to admit that physics must allow for computable simulations of neurons, so their statement about consciousness only applies to the perceptions of observers, given their ignorance of how to tease apart the operations of external brains. Fine. But by that same standard, gzip compression is conscious too, since a human can't in his own head figure out how to break down a zipped file into components; it's too complicated.

Overall, "Is Consciousness Computable?" presents some interesting math, and I agree that integration of information seems an important aspect of consciousness in brains, but anything beyond that is misleading.

Lucas-Penrose Argument

When it comes to deceptive math that misleads on cognitive science, The Lucas-Penrose Argument about Gödel's Theorem may be the classic example. I remain confused about how this argument is supposed to work, so I can't give a good response. I archived some of my confused thoughts in "Replies to the Lucas-Penrose Argument", but that page is not worth reading unless you want to slog through possibly misguided draft ideas.

Footnotes

- R. Clay Reid mentions other differences between brains and computers:

- Most computer wires hook up to at most a few other components. Most neurons connect to hundreds or thousands of other neurons. [citation]

- Computer chips are mostly 2-dimensional, while cortical circuits are 3-dimensional. [citation]

Of course, in software these differences can be eliminated—but at the expense of slowness. (back)

- People sometimes assume that because I attribute sentience to lots of systems, I'd be opposed to seeing dolls dismembered or chocolate bunnies melted. My response is as follows. I'm probably benighted, but I don't care about dismembering dolls or melting chocolate bunnies (at least not more than I'm opposed to performing any other physical transformation on the world). One might be able to make an argument about how the structure of a doll carries some implicit agential properties that are absent from random arrangements of matter, and maybe tomorrow's philosophers will decry my unconcern for dolls as barbaric. (back)

- In Aliens Ate My Homework, Bruce Coville's character Grakker illustrates this idea. His behavior can be modified in various ways by insertion of different modules into the back of his head. One of them is the berserk module, which makes Grakker pound against the wall. (back)

!['BenQ Joybook 8100 showing the Dutch BenQ article. JPG version with white background.' By Hay Kranen (Own work) [GFDL (http://www.gnu.org/copyleft/fdl.html), CC-BY-SA-3.0 (http://creativecommons.org/licenses/by-sa/3.0/) or CC-BY-2.5 (http://creativecommons.org/licenses/by/2.5)], via Wikimedia Commons.](/wp-content/uploads/2014/09/Benq_joybook.jpg)